Author: Gabriel Rüeck

Colored Network Diagrams (Part 2)

Executive Summary

This blog post is the continuation of the blog post Colored Network Diagrams (Part 1). In this blog post, I describe some improvements and enhanced capabilities of the scripts that have been presented in Part 1 already. The new set of scripts can now additionally color network graphs (PERT charts) according to:

- … remaining durations

- … remaining work

- … criticality based on remaining duration

Before reading this blog post, you need to read the blog post Colored Network Diagrams (Part 1) first to fully understand the context.

Preconditions

In order to use the approach described here, you should:

- … have access to a Linux machine or account

- … have a MySQL or MariaDB database server set up, running, and have access to it

- … have the package graphviz [2] installed

- … have some basic knowledge of how to operate in a Linux environment and some basic understanding of shell scripts

Description and Usage

In the previous blog post Colored Network Diagrams (Part 1) we looked at a method on how to create colored network graphs (PERT charts) based on the duration, the work, the slack, and the criticality of the individual tasks. We also looked at how a network graph looks like when some of the tasks have already been completed (tasks in green color). We will now enhance the script sets in order to process remaining duration and remaining work. In addition, this information shall also be displayed for each task. For this, the scripts create_project_db.sql, msp2mysql.sh, and mysql2dot.sh require modifications so that they can process the additional information. I decided to name the new scripts with a “-v2” suffix so that they can be distinguished from the ones in the previous blog post.

Let us first look at the script create_project_db-v2.sql which sets up the database [1]. Compared to the previous version, it now sets up some new fields which have been highlighted in red color below. For the sake of readability, only parts of the script have been displayed here.

...

task_duration INT UNSIGNED DEFAULT 0,\

task_work INT UNSIGNED DEFAULT 0,\

free_slack INT DEFAULT 0,\

total_slack INT DEFAULT 0,\

finish_slack INT DEFAULT 0,\

rem_duration INT UNSIGNED DEFAULT 0,\

rem_work INT UNSIGNED DEFAULT 0,\

`%_complete`

In addition to the fields rem_duration and rem_work, we also prepare the database for 3 out of the 4 slack types that tasks have (free_slack, total_slack, finish_slack), in order to be able to execute some experiments in future releases. Furthermore, we can now capture the link_lag between two linked tasks; this information will not be used now, but in a future version of the script set.

Step 1: Parsing the Project Plan

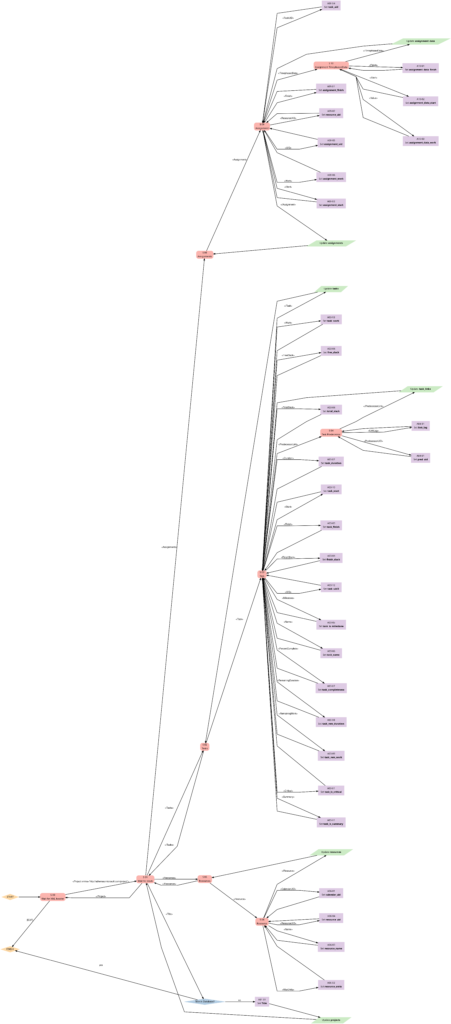

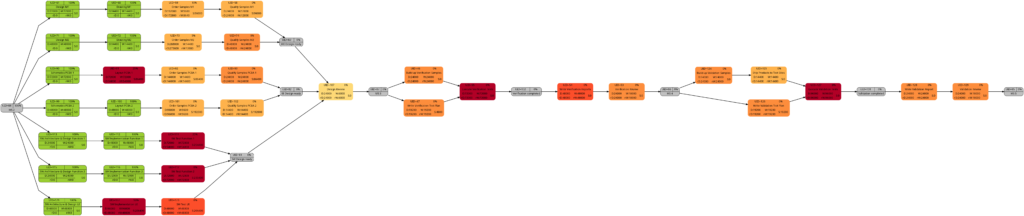

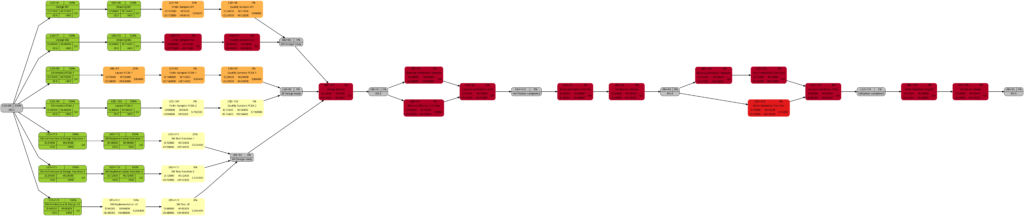

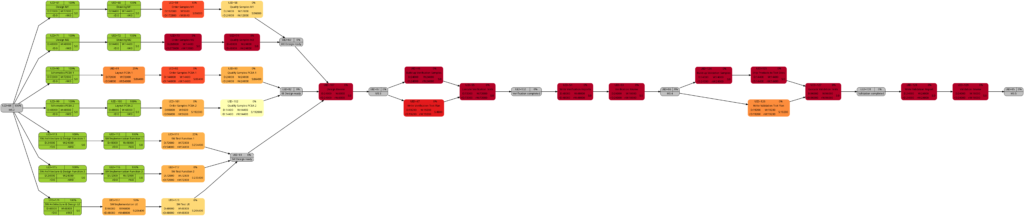

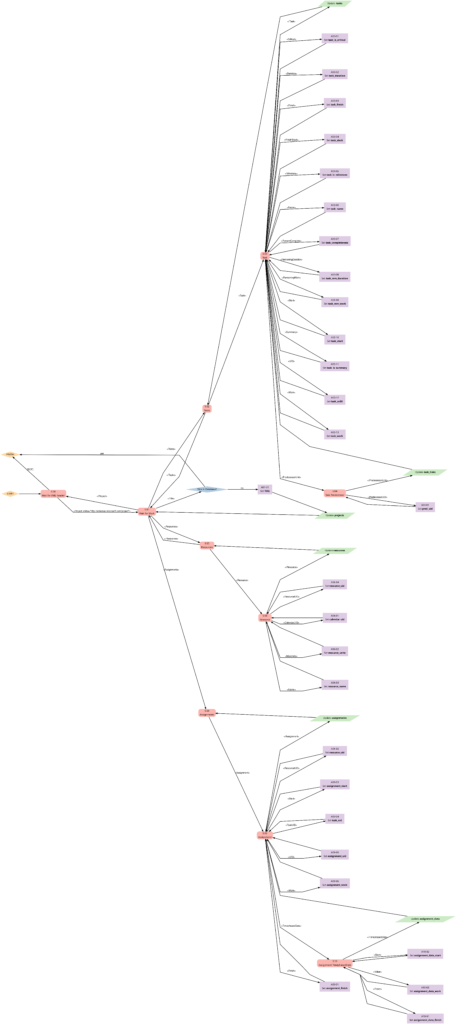

The script msp2mysql-v2.sh parses the Microsoft® Project plan in XML format. It contains a finite-state machine (see graph below), and the transitions between the states are XML tags (opening ones and closing ones).

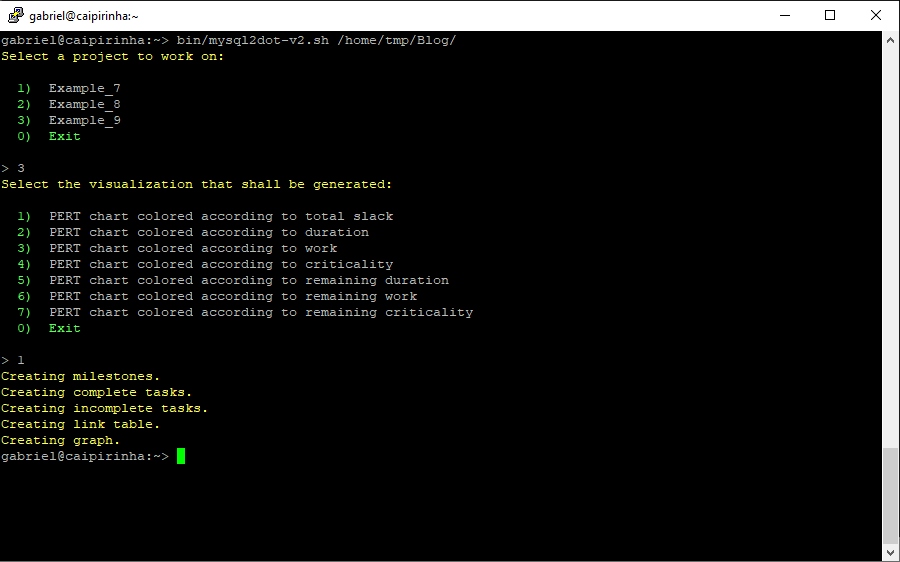

Step 2: Generating a Graph

Now we can extract meaningful data from the database and transform this into a colored network graph; this is done with the bash script mysql2dot-v2.sh. mysql2dot-v2.sh can create colored network graphs according to:

- … the task duration

- … the work allocated to a task

- … the total slack of a task

- … the criticality of a task

- … the remaining task duration

- … the remaining work allocated to a task

- … the remaining criticality of a task

mysql2dot-v2.sh relies on a working installation of the graphviz package, and in fact, it is graphviz and its collection of tools that create the graph, while mysql2dot-v2.sh creates a script for graphviz in the dot language. Let us look close to some examples:

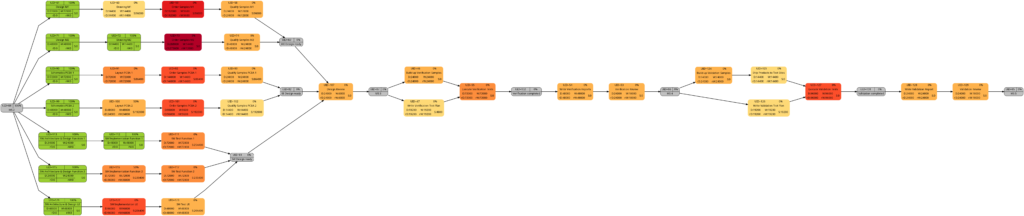

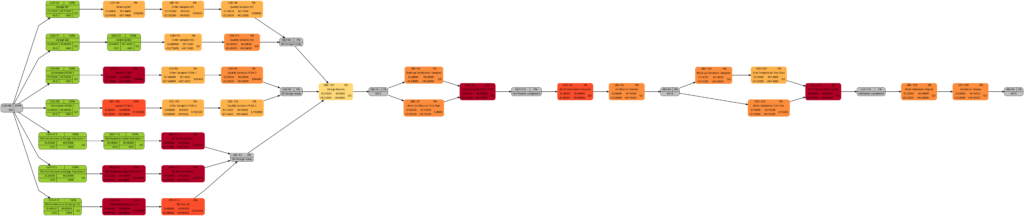

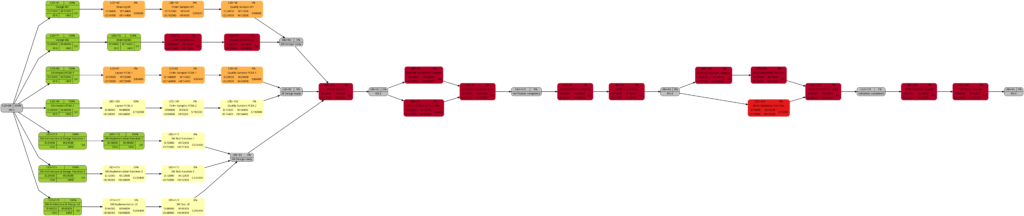

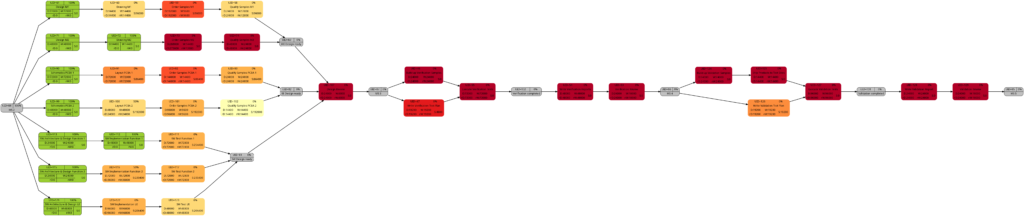

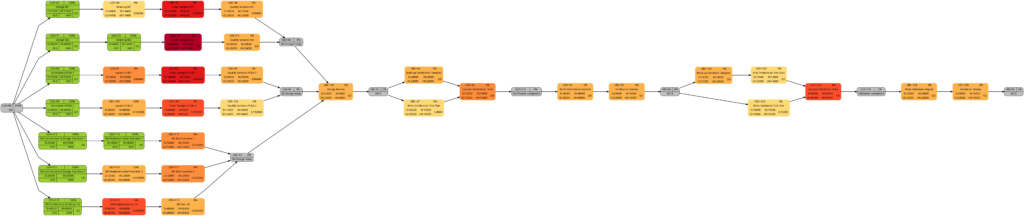

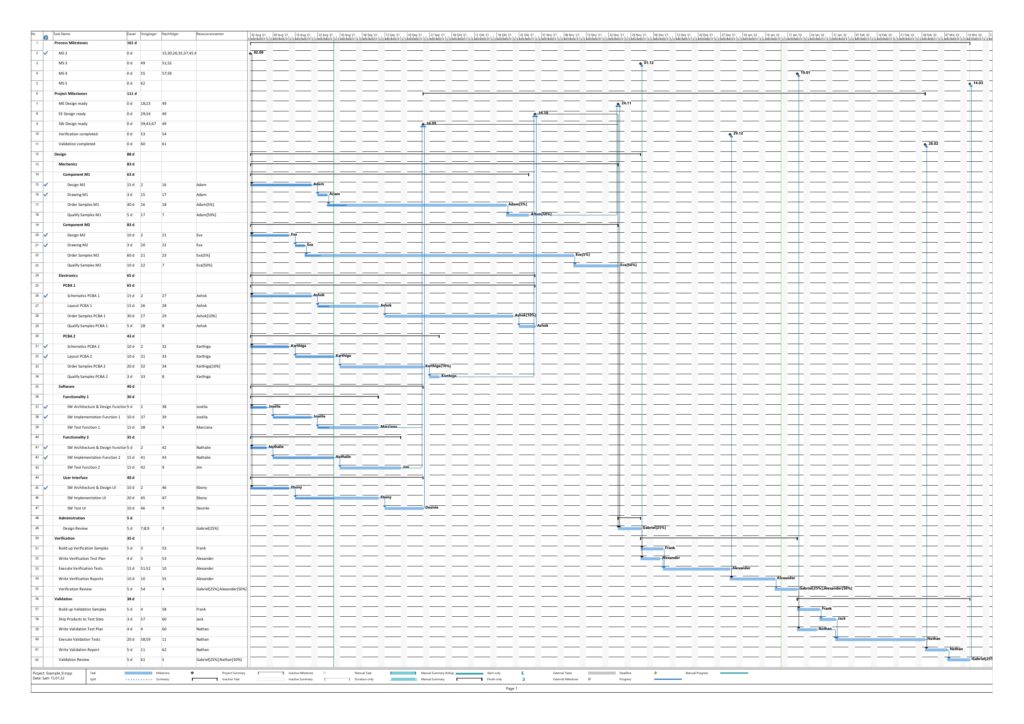

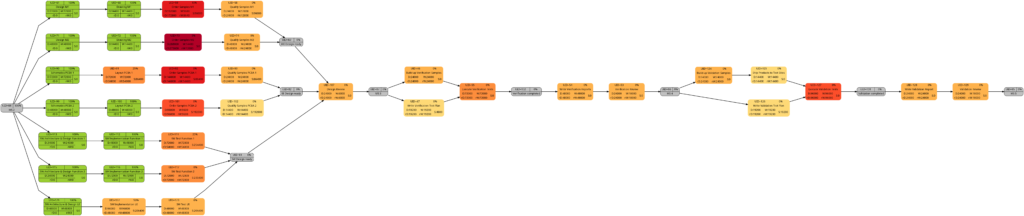

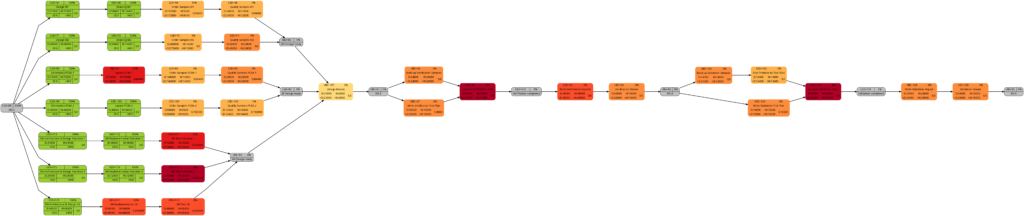

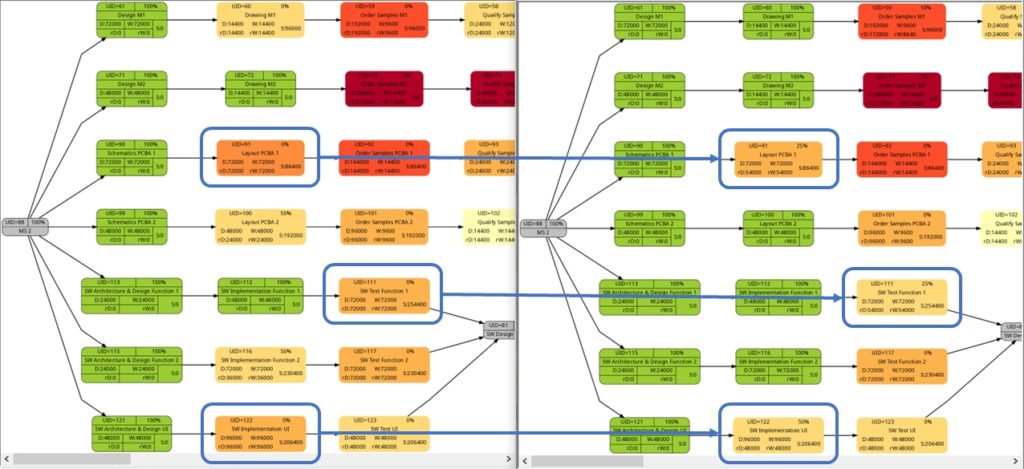

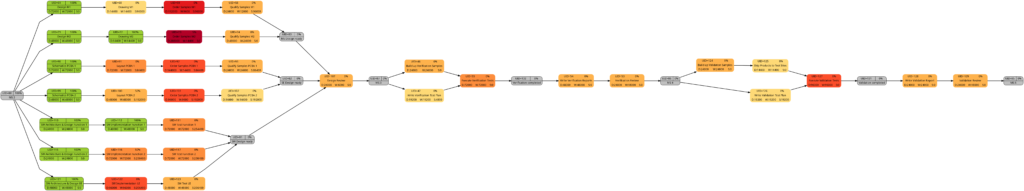

Example 8: A real-world example

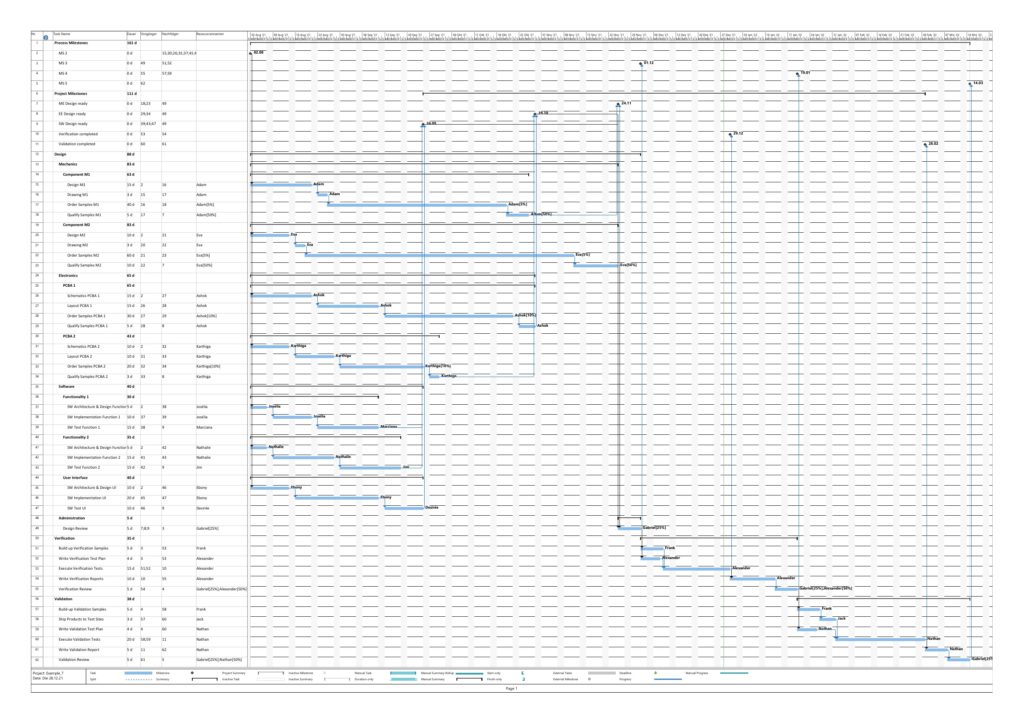

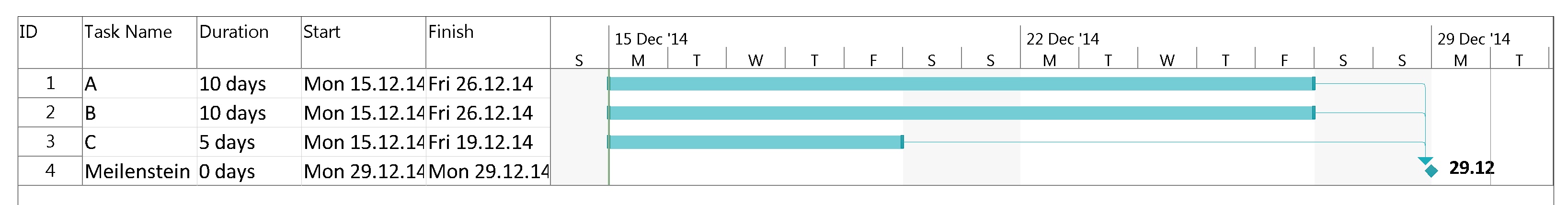

Example 8 was already mentioned in the blog post Colored Network Diagrams (Part 1). It is a fictious simplified project plan with development, verification, and validation activities where some tasks have already been completed. The green vertical line in the plan shows the status date.

Tasks that have been completed 100% are colored in green color across all network graphs indicating that these tasks do not require any more attention in the remaining project. While the network graphs had already been shown in the blog post Colored Network Diagrams (Part 1), they shall nevertheless ben shown again as the new script mysql2dot-v2.sh shows additional information (remaining duration, remaining work) for each task. For completed tasks, those values as well as total task slack are 0, of course.

The new script mysql2dot-v2.sh offers 3 additional graphs (menu choices 5…7):

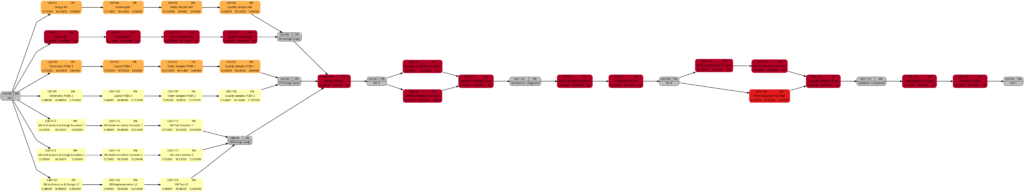

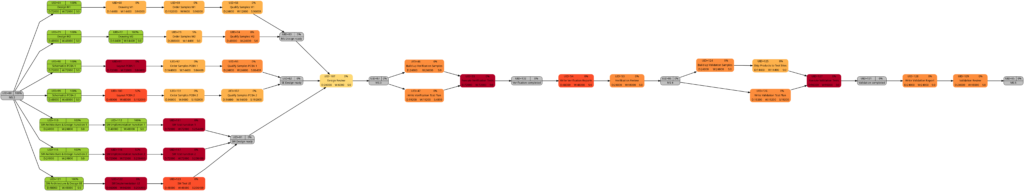

Let us have a closer look at these graphs and compare some of them:

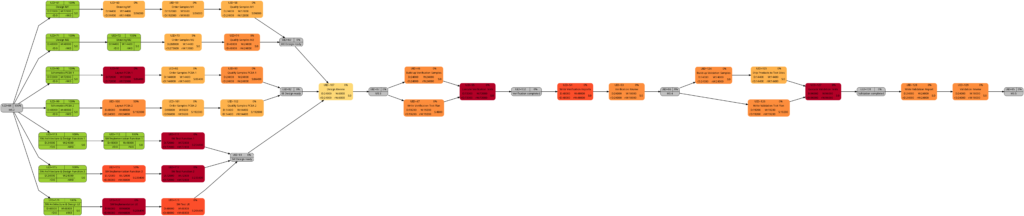

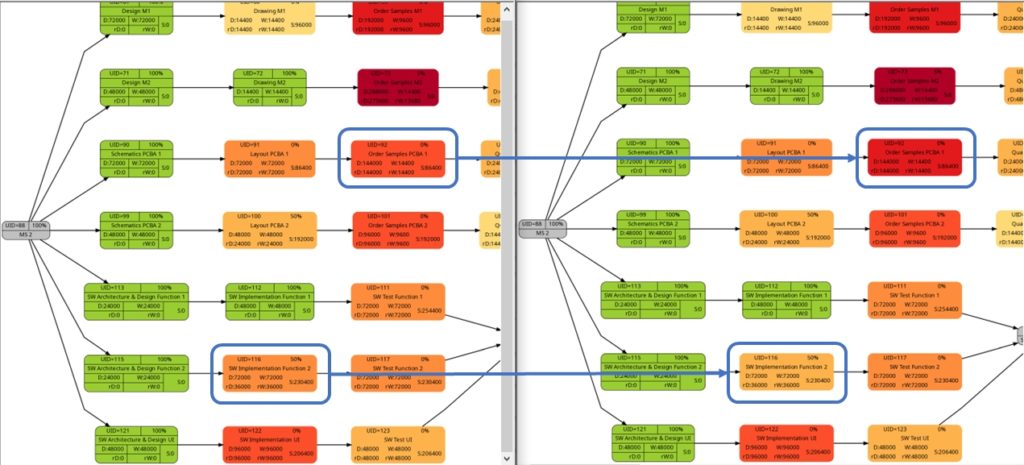

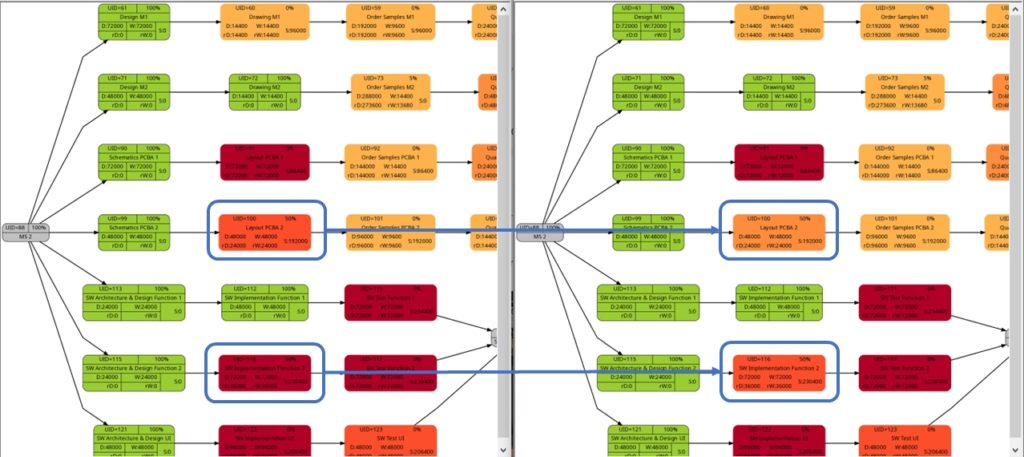

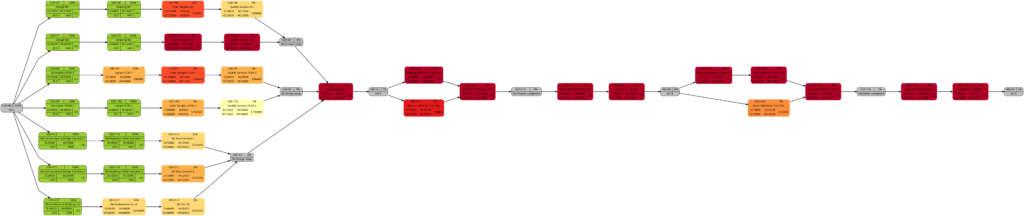

The comparison of the graphs above show two tasks whose colors have changed in different directions of color shades:

- The task with UID=116 changes to a lighter color shade. This is because the left graph takes the duration into account (D=72000), and the right graph takes the remaining duration into account (rD=36000). As the remaining duration is less than the duration, we can expect that change in the color shade.

- The task with UID=92 changes to a darker color shade although the values for the duration (D=14400) and the remaining duration (rD=14400) are equal. Why is that?

The explanation is that the spread between the color shades (the variable ${color_step} in the script mysql2dot-v2.sh) is computed based on the maximum remaining duration in the graph on the right side, different from the maximum duration in the graph on the left side. Consequently, if there is a task with a very long duration in the left graph and this task has a significantly lower remaining duration, this might occur in a smaller spread (a smaller value of ${color_step}) when the network graph for the remaining duration is computed.

I am personally open to the discussion of whether a different spread as seen in example (2) makes sense or not. For the moment, I decided to proceed like that. But there are valid arguments for both approaches:

- Leaving the spread equal between both graphs makes them directly comparable 1:1. This might be important for users who regularly compare the network graph colored according to task duration with the one colored according to remaining task duration.

- Computing the spread from scratch for each graph sets a clear focus on the tasks which should be observed now because they have a long remaining duration, irrespective of whether the tasks once in the past had a longer or shorter duration.

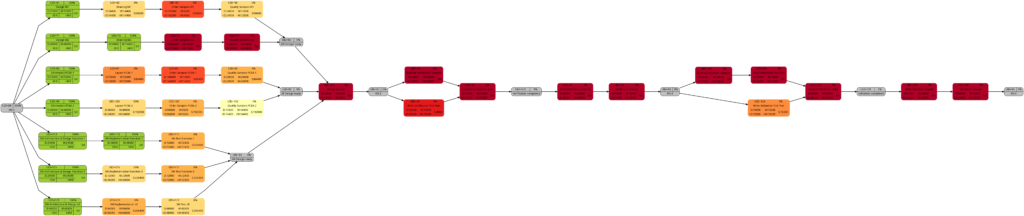

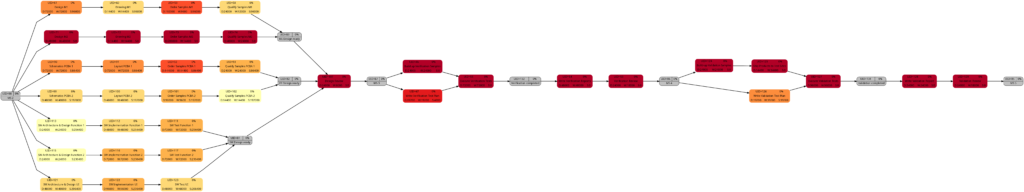

The shift of color shades in the graph above seems logical and corresponds to the case (1) of the previous example. For both the tasks with UID=100 and UID=116, the value of the remaining task work is half the value of the task work, and therefore, in the network graph for the remaining task work, both tasks feature lighter color shades as they are “less problematic”.

As already explained in the blog post Colored Network Diagrams (Part 1), criticality is computed based on the information of task slack and task duration. Whereas the script mysql2dot.sh used the task finish slack for this calculations, I decided to change to task total slack in the newer script mysql2dot-v2.sh; that seemed to be more adequate although in my examples, both values have been the same for all involved tasks.

In both versions of the scripts, logarithmic calculations are undertaken, and their outcome is different from the calculations based on the square root for the network graphs according to (remaining) task durations. As a result, we only observe a color shift in the task with UID=116. As the remaining task duration is half of the task duration, this task is less critical (hence, a lighter color shade) on the right side of the image above.

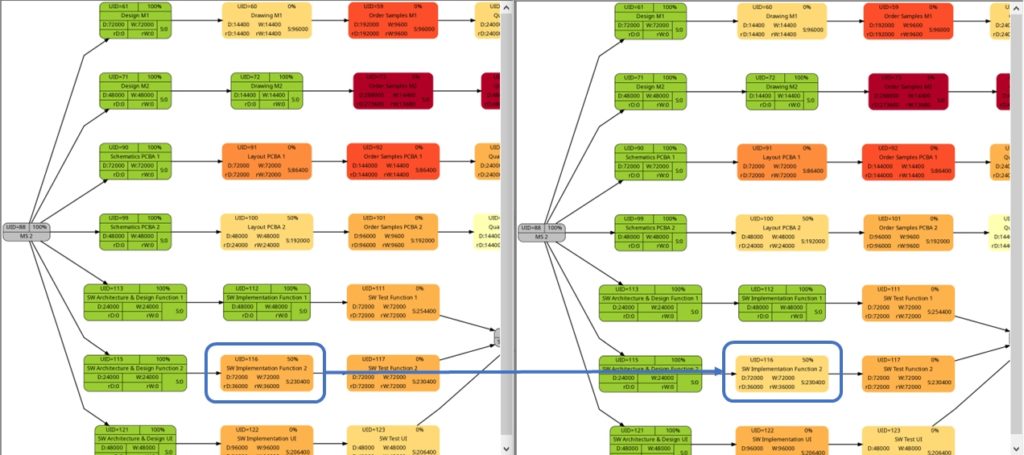

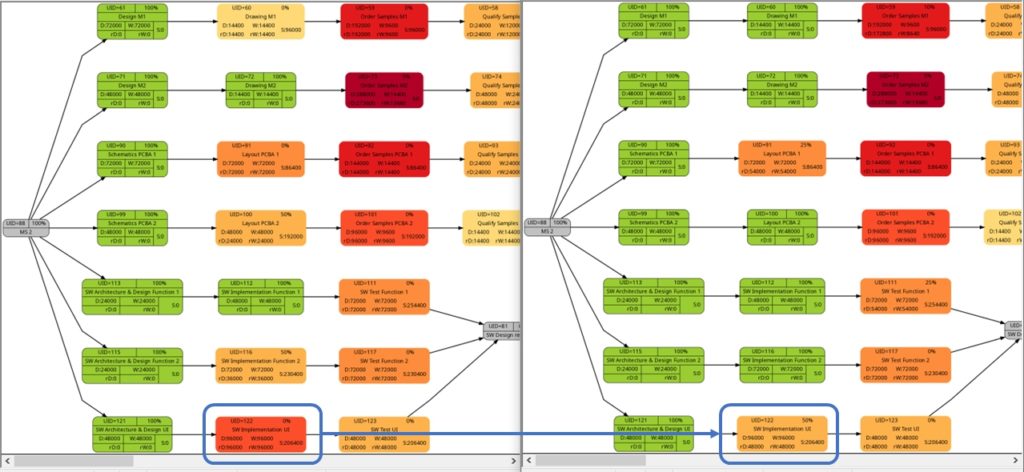

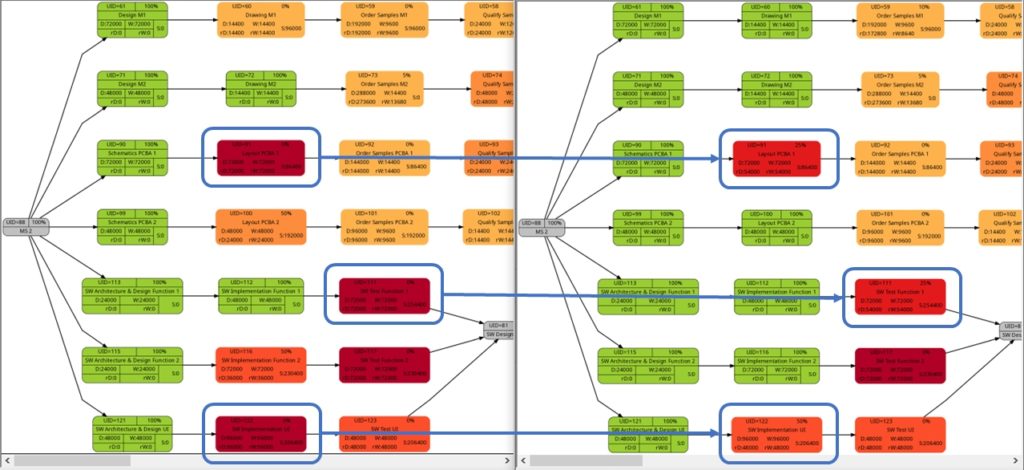

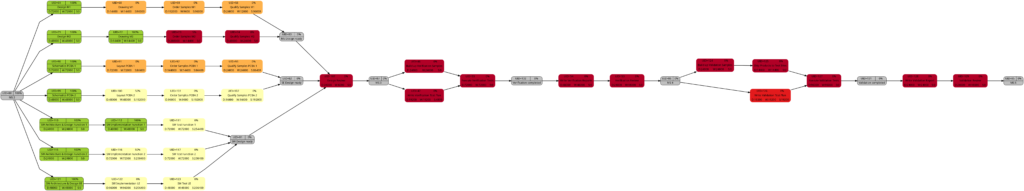

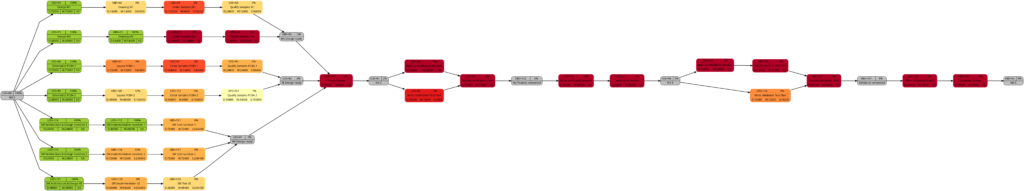

Example 9: Same project plan (more in the future)

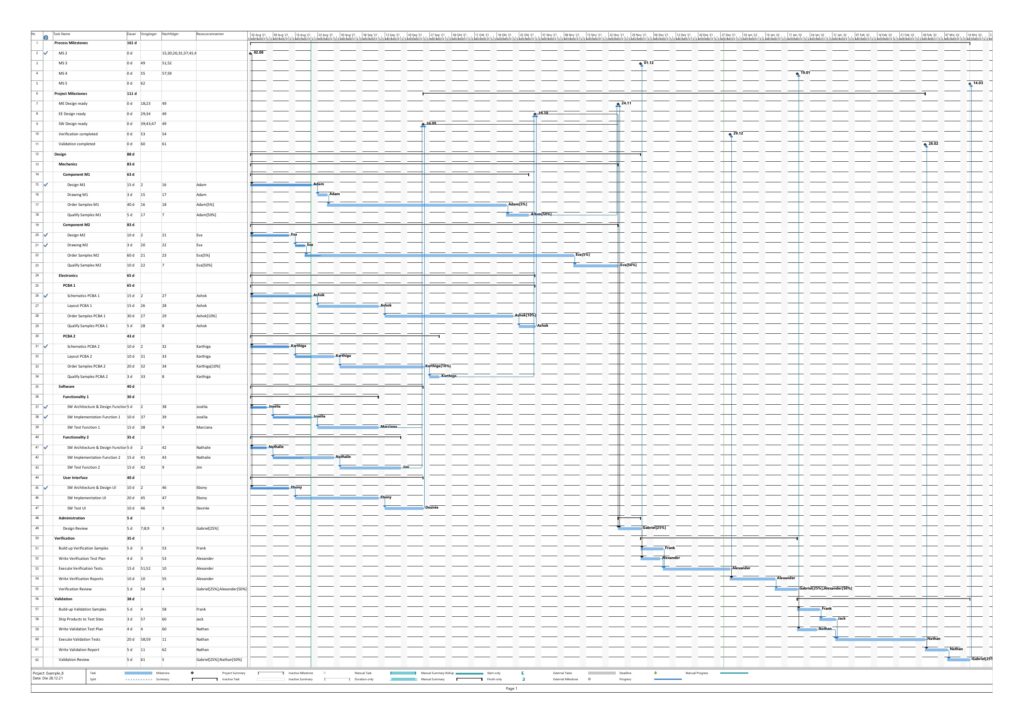

Jumping to the future, more tasks of the same project plan have been completed either entirely or partially. Again, the green vertical line in the plan shows the status date.

The resulting graphs are:

We will now look at some of the graphs and compare them with the equivalent graphs in Example 8. Apart from tasks being colored in green color once they have been completed, there are only differences in color shades of tasks belonging to the network graphs of:

- remaining task duration

- remaining task work

- remaining task criticality

as completed tasks have their remaining values set to 0 and therefore diminish the set of tasks that are considered for the calculation. In contrast to this, there are no changes in the network graphs of:

- task duration

- task work

as the respective values do not change for completed tasks. We could, however, in theory experience changes in the network graphs of:

- task slack

- task criticality

as complete tasks have their slack values set to 0 and therefore diminish the set of tasks that are considered for the calculation. This was not the case in our examples, though. It should also be mentioned that there might be changes in the color shade for a task where the duration or work is increased due to a revised assessment of the project manager from one status date to the other.

As mentioned above, the changes in the color shade of the highlighted tasks in the comparisons above are due to the fact that either the remaining duration or the remaining work of the respective tasks change between the earlier and the later status date.

Conclusion

The scripts create_project_db-v2.sql, msp2mysql-v2.sh and mysql2dot-v2.sh and the examples provided above show how, using the powerful graphviz package, traditional project plans created with Microsoft® Project can be visualized in a set of graphs that can help a project manager to focus on the right tasks. Compared to the set of scripts in the blog post Colored Network Diagrams (Part 1), the improved set of scripts additionally allow the examination of the task duration, task value, and task criticality based on remaining values of the tasks, and so can answer the question “How does the project look now?” more adequately.

Outlook

In the near future, I plan to rewrite the script mysql2dot-v2.sh into PHP because I want to incorporate an algorithm which I developed back in 2010 that can show the first x critical paths of the project using a recursive PHP function and several large multi-dimensional arrays.

Sources

Files

- create_project_db-v2.sql sets up the database in MySQL or MariaDB. The script works with the user gabriel who assumes to have access to the database server without password; you might have to adapt the script to your environment and needs.

- msp2mysql-v2.sh reads a Microsoft® Project plan in XML, parses it and writes the data into the MariaDB database.

- mysql2dot-v2.sh reads from the MariaDB database and creates a script for dot, a tool of the graphviz suite.

- Example_8-v2.zip contains all files with respect to Example 8 (created with the current set of scripts).

- Example_9-v2.zip contains all files with respect to Example 9.

Disclaimer

- The program code and the examples are for demonstration purposes only.

- The program code shall not be used in production environments without further modifications.

- The program code has not been optimized for speed (It’s a bash script anyway, so do not expect miracles.).

- The program code has not been written with cybersecurity aspects in mind.

- While the program code has been tested, it might still contain errors.

- Only a subset of all possibilities in [1] has been used, and the code does not claim to adhere completely to [1].

Colored Network Diagrams (Part 1)

Executive Summary

This blog post offers a method to create colored network graphs (PERT charts) from project plans that are created with Microsoft® Project. Color schemes similar to the ones used in physical maps visualize those tasks which:

- … have long durations

- … have a high amount work

- … have little slack

- … have a high criticality

The blog post shall also encourage readers to think outside of the possibilities that established project management software offers and to define for yourself which tasks are critical or relevant for you and how this information shall be determined.

Preconditions

In order to use the approach described here, you should:

- … have access to a Linux machine or account

- … have a MySQL or MariaDB database server set up, running, and have access to it

- … have the package graphviz installed

- … have some basic knowledge of how to operate in a Linux environment and some basic understanding of shell scripts

Description and Usage

The base for the colored network graphs is a plan in Microsoft® Project which needs to be stored as XML file. The XML file is readable with a text editor (e.g., UltraEdit) and follows the syntax in [1]. The XML file is then parsed, the relevant information is captured and is stored in a database (Step 1). Then, selected information is retrieved from the database and written to a script in dot language which subsequently is transformed into a graph using the package graphviz (Step 2). Storing the data in a database rather than in variables allows us to use all the computational methods which the database server offers in order to select, aggregate, and combine data from the project plan. It also allows us to implement Step 2 in PHP so that graphs can be generated and displayed within a web site; this could be beneficial for a web-based project information system.

Step 0: Setting up the Database

At the beginning, a database needs to be set up in the database server. For this, you will probably need administrative access to the database server. The following SQL script sets up the database and grants access rights to the user gabriel. Of course, you need to adapt this to your needs on the system you work on.

# Delete existing databases

REVOKE ALL ON projects.* FROM 'gabriel';

DROP DATABASE projects;

# Create a new database

CREATE DATABASE projects;

GRANT ALL ON projects.* TO 'gabriel';

USE projects;

CREATE TABLE projects (proj_uid SMALLINT UNSIGNED NOT NULL AUTO_INCREMENT PRIMARY KEY,\

proj_name VARCHAR(80)) ENGINE=MyISAM DEFAULT CHARSET=utf8;

CREATE TABLE tasks (proj_uid SMALLINT UNSIGNED NOT NULL,\

task_uid SMALLINT UNSIGNED NOT NULL,\

task_name VARCHAR(150),\

is_summary_task BOOLEAN DEFAULT FALSE NOT NULL,\

is_milestone BOOLEAN DEFAULT FALSE NOT NULL,\

is_critical BOOLEAN DEFAULT FALSE NOT NULL,\

task_start DATETIME NOT NULL,\

task_finish DATETIME NOT NULL,\

task_duration INT UNSIGNED DEFAULT 0,\

task_work INT UNSIGNED DEFAULT 0,\

task_slack INT DEFAULT 0,\

rem_duration INT UNSIGNED DEFAULT 0,\

rem_work INT UNSIGNED DEFAULT 0,\

`%_complete` SMALLINT UNSIGNED DEFAULT 0,\

PRIMARY KEY (proj_uid, task_uid)) ENGINE=MyISAM DEFAULT CHARSET=utf8;

CREATE TABLE task_links (proj_uid SMALLINT UNSIGNED NOT NULL,\

predecessor SMALLINT UNSIGNED NOT NULL,\

successor SMALLINT UNSIGNED NOT NULL) ENGINE=MyISAM DEFAULT CHARSET=utf8;

CREATE TABLE resources (proj_uid SMALLINT UNSIGNED NOT NULL,\

resource_uid SMALLINT UNSIGNED NOT NULL,\

calendar_uid SMALLINT UNSIGNED NOT NULL,\

resource_units DECIMAL(3,2) DEFAULT 1.00,\

resource_name VARCHAR(100),\

PRIMARY KEY (proj_uid, resource_uid)) ENGINE=MyISAM DEFAULT CHARSET=utf8;

CREATE TABLE assignments (proj_uid SMALLINT UNSIGNED NOT NULL,\

task_uid SMALLINT UNSIGNED NOT NULL,\

resource_uid SMALLINT UNSIGNED NOT NULL,\

assignment_uid SMALLINT UNSIGNED NOT NULL,\

assignment_start DATETIME NOT NULL,\

assignment_finish DATETIME NOT NULL,\

assignment_work INT UNSIGNED DEFAULT 0,\

PRIMARY KEY (proj_uid, task_uid, resource_uid)) ENGINE=MyISAM DEFAULT CHARSET=utf8;

CREATE TABLE assignment_data (proj_uid SMALLINT UNSIGNED NOT NULL,\

assignment_uid SMALLINT UNSIGNED NOT NULL,\

assignment_start DATETIME NOT NULL,\

assignment_finish DATETIME NOT NULL,\

assignment_work INT UNSIGNED DEFAULT 0) ENGINE=MyISAM DEFAULT CHARSET=utf8;

This SQL script sets up a database with several tables. The database can hold several project plans, but they all must have distinctive names. This SQL script only catches a subset of all information which is part of a Microsoft® Project plan in XML format; it does not capture calendar data, for example, nor do the data types in the SQL script necessarily match the ones from the specification in [1]. But it is good enough to start with colored network graphs.

This only needs to be executed one time. Once the database has been set up properly, we only have to execute Step 1 and Step 2.

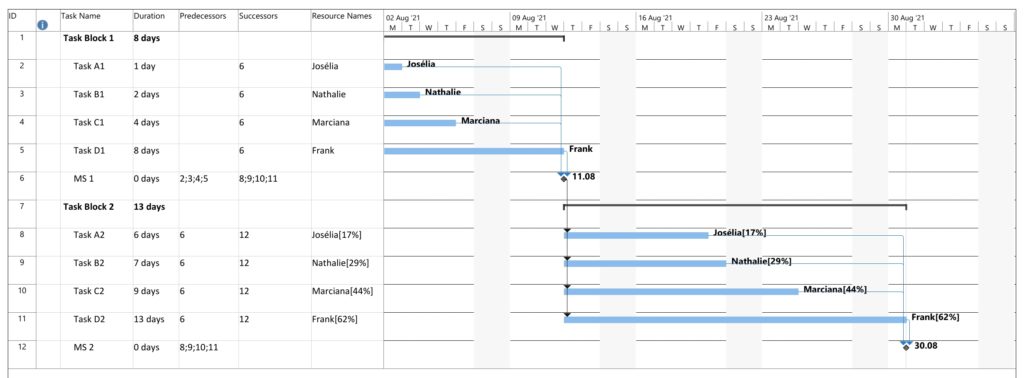

Step 1: Parsing the Project Plan

The first real step is to parse the Microsoft® Project plan in XML format; this is done with the bash script msp2mysql.sh. This program contains a finite-state machine (see graph below), and the transitions between the states are XML tags (opening ones and closing ones). This approach helps to keep the code base small and easily maintainable. Furthermore, the code base can be enlarged if more XML tags or additional details of the project plan shall be considered.

I concede there are more elegant and faster ways to parse XML data, but I believe that msp2mysql.sh can be understood well and helps to foster an understanding of what needs to be done in Step 1.

There is one important limitation in the program that needs to be mentioned: The function iso2msp () transforms a duration in ISO 8601 format to a duration described by multiples of 6 s (6 s are an old Microsoft® Project “unit” of a time duration, Microsoft® Project would traditionally only calculate with 0.1 min of resolution). However, as you can see in [2], durations cannot only be measured in seconds, minutes, and hours, but also in days, months, etc. A duration of 1 month is a different amount of days, depending on the month we are looking at. This is something that has not been considered in this program; in fact, it can only transform durations given in hours, minutes and seconds to multiples of 6 s. Fortunately, this captures most durations used by Microsoft® Project, even durations that span over multiple days (which are mostly still given in hours in the Microsoft® Project XML file).

While msp2mysql.sh parses the Microsoft® Project plan in XML format, it typically runs through these steps:

- It extracts the name of the project plan and checks if there is already a project plan with this name in the database. If that is the case, the program terminates. If that is not the case, the project name is stored in the table projects, and a new proj_uid is allocated.

- It extracts details of the tasks and stored them in the tables tasks and task_links.

- It extracts the resource names and stores their details in the table resources.

- It extracts assignments (of resources to tasks) and stores them in the tables assignments and assignment_data.

msp2mysql.sh is called with the Microsoft® Project plan in XML format as argument, like this:

./msp2mysql.sh /home/tmp/Example_1.xmlStep 2: Generating a Graph

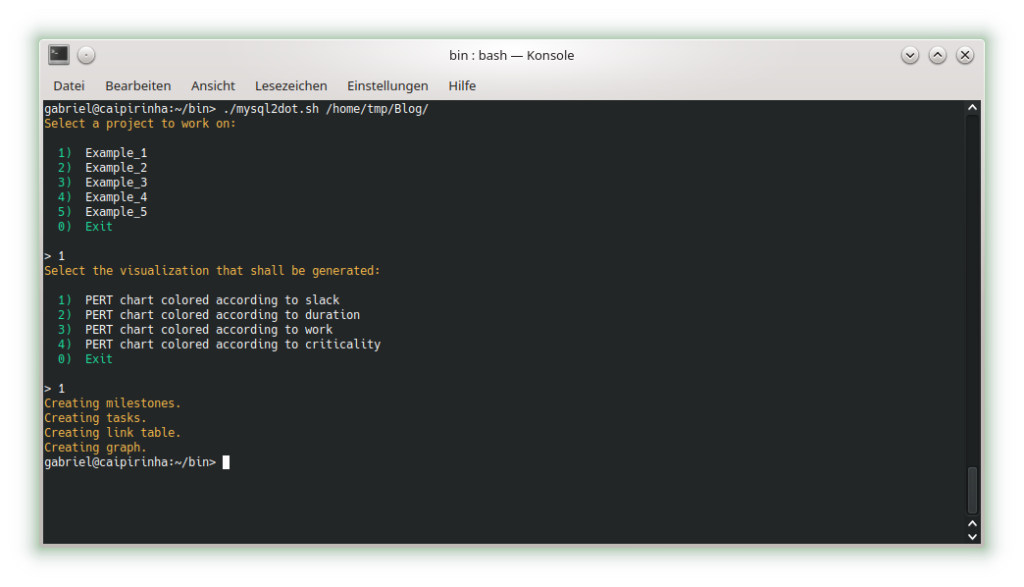

The second step is to extract meaningful data from the database and transform this into a colored network graph; this is done with the bash script mysql2dot.sh. mysql2dot.sh can create colored network graphs according to:

- … the task duration

- … the work allocated to a task

- … the task slack

- … the criticality of a task

mysql2dot.sh relies on a working installation of the graphviz package, and in fact, it is graphviz and its collection of tools that create the graph, while mysql2dot.sh creates a script for graphviz in the dot language. Let us look close to some aspects:

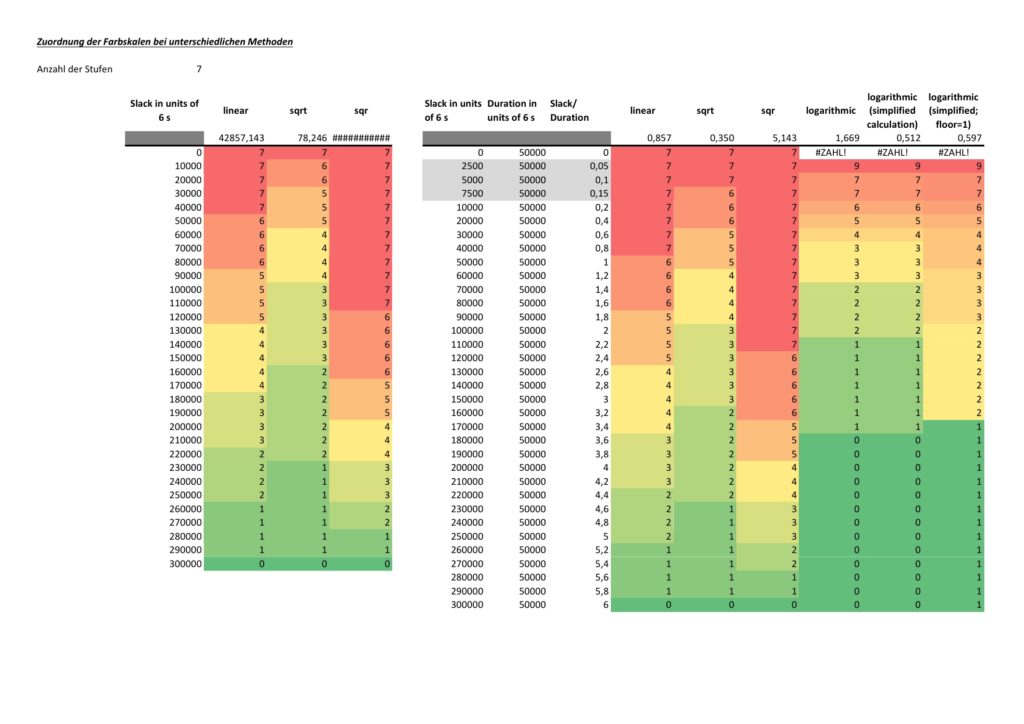

mysql2dot.sh uses an uneven scale of colors, and the smallest scale should be three or more colors. The constant NUM_COLSTEPS sets the number of colors, in our case 7 colors. The constant COLSCHEME defines the graphviz color scheme that shall be used, in our case this is “ylorrd7”. [3] shows some really beautiful color schemes. The ColorBrewer schemes are well suited for our kind of purpose (or for physical maps); however, the license conditions ([4]) must be observed.

mysql2dot.sh is invoked with the name of a directory as argument. The generated script(s) in dot language as well as the generated graph(s) will be stored in that directory. A typical call looks like this:

./mysql2dot.sh /home/tmp/When started, mysql2dot.sh retrieves a list of all projects from the database and displays them to the user who then has to select a project or choose the option Exit. When a project plan has been selected, the user has to choose the graph that shall be generated. Subsequently, the program starts to generate a script in dot language and invokes the program dot (a tool of the graphviz suite) in order to compute the network graph.

Different project plans typically have different ranges of durations, work or slack for all of their tasks. mysql2dot.sh therefore takes into account the maximum duration, the maximum work, or the maximum slack it can find in the whole project. Initially, I used a linear scale to distribute the colors over the whole range from [0; max_value], but after looking at some real project plans, I personally found that too many tasks would then be colored in darker colors and I thought that this would rather distract a project manager from focusing on the few tasks that really need to be monitored closely. Trying out linear, square and square root approaches, I finally decided for the square root approach which uses the darker colors only when the slack is very small, the workload is very high, or the duration is very long. Consequently, the focus of the project manager is guided to the few tasks that really deserve special attention. However, this does not mean that this approach is the only and correct way to do it. Feel free to experience with different scaling methods to tune the outcome to your preferences.

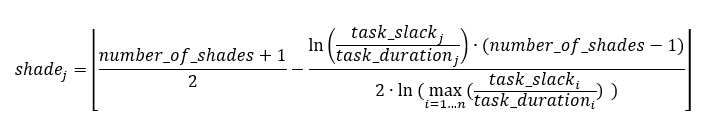

The network graph colored according to task criticality follows a different approach. Task criticality is derived from the ration of task slack and task duration. Tasks which have a low ratio of task slack / task duration are defined as more critical than tasks where this ratio is higher. I also experienced with the square root approach mentioned above, but that seemed to result in different criticalities depending on the maximum ratio of task slack / task duration which seemed unsatisfactory to me. Finally, I opted for a logarithmic approach which puts the ration task slack / task duration = 1,0 to the middle value of NUM_COLSTEPS. So in the current case, a ratio of task slack / task duration = 1,0 (means: task slack = task_duration) would result in a value of 4.0. If NUM_COLSTEPS was 9 rather than 7, the result would be 5.0. This can be reached by the formula:

where:

- n is the number of tasks that are neither a milestone nor a summary task and that have a non-zero duration

- j is the task for which we determine the level of shade

- number_of_shades is the number of different shades you want to have in the graph; this should be an impair number and it should be 3 at least

- shadej is the numerical value of the shade for task j

- task_slackj is the task slack of task j

- task_durationj is the duration of task j

Examples

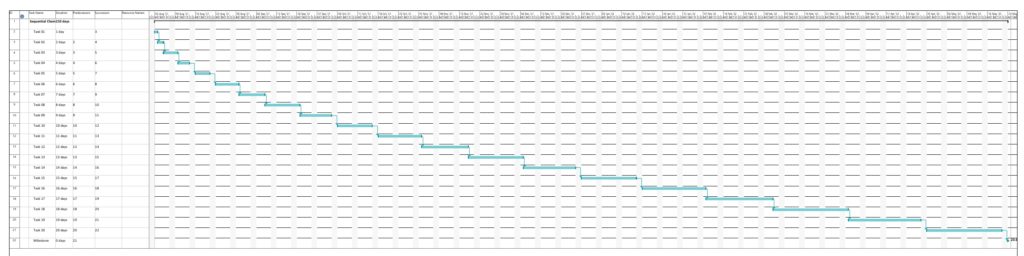

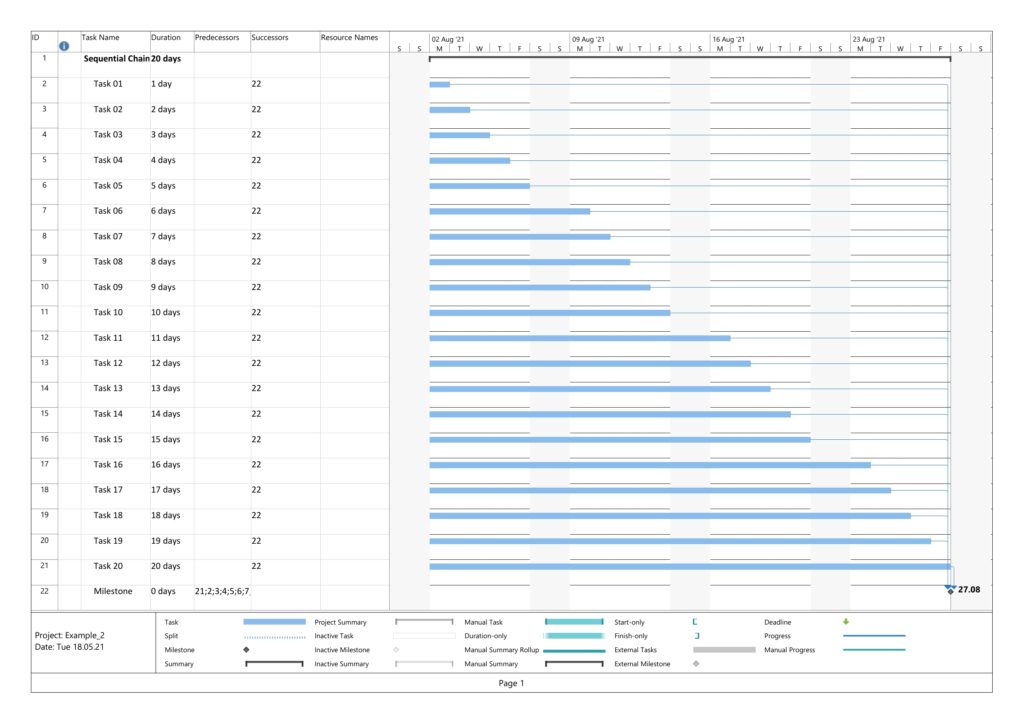

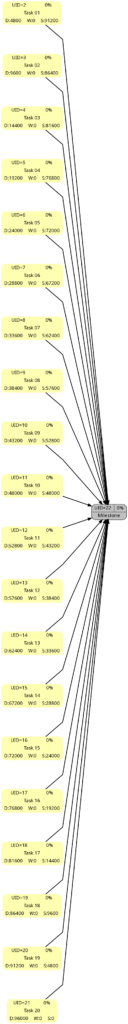

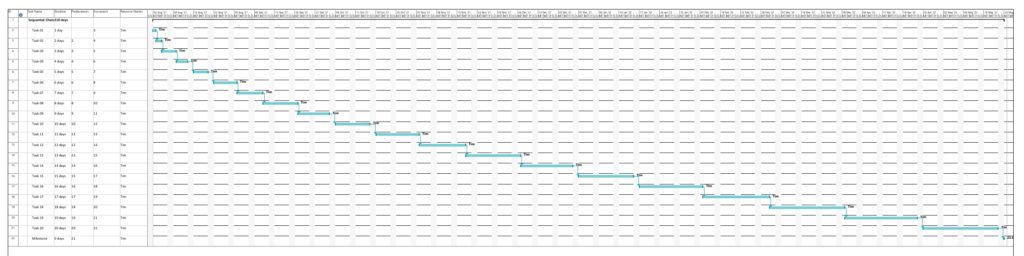

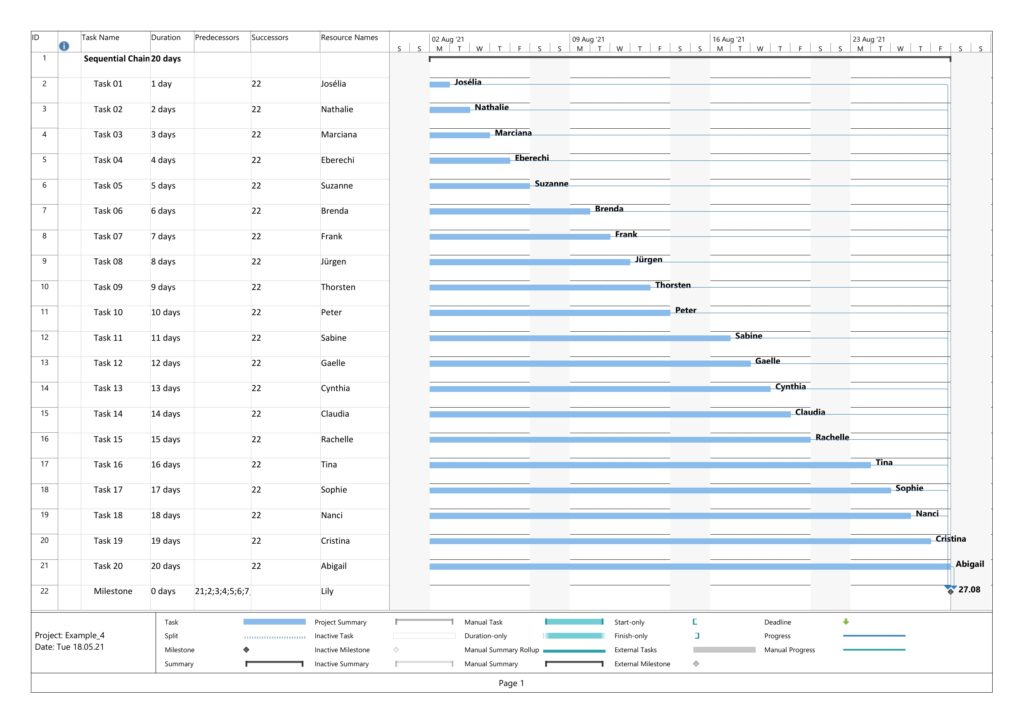

Example 1: Sequential Task Chain

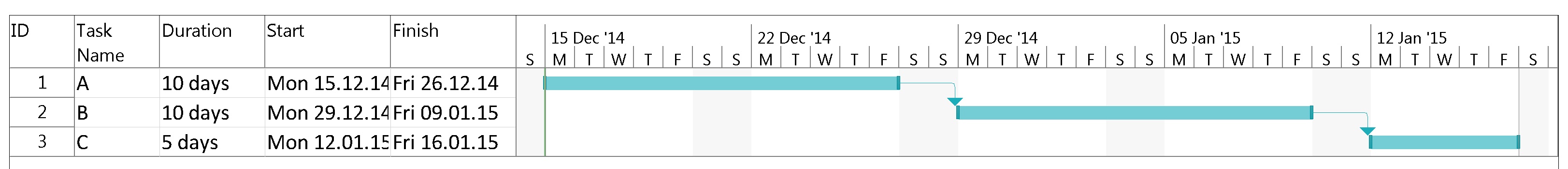

This example is a sequential task chain with 20 tasks; each task is +1d longer than the previous task.

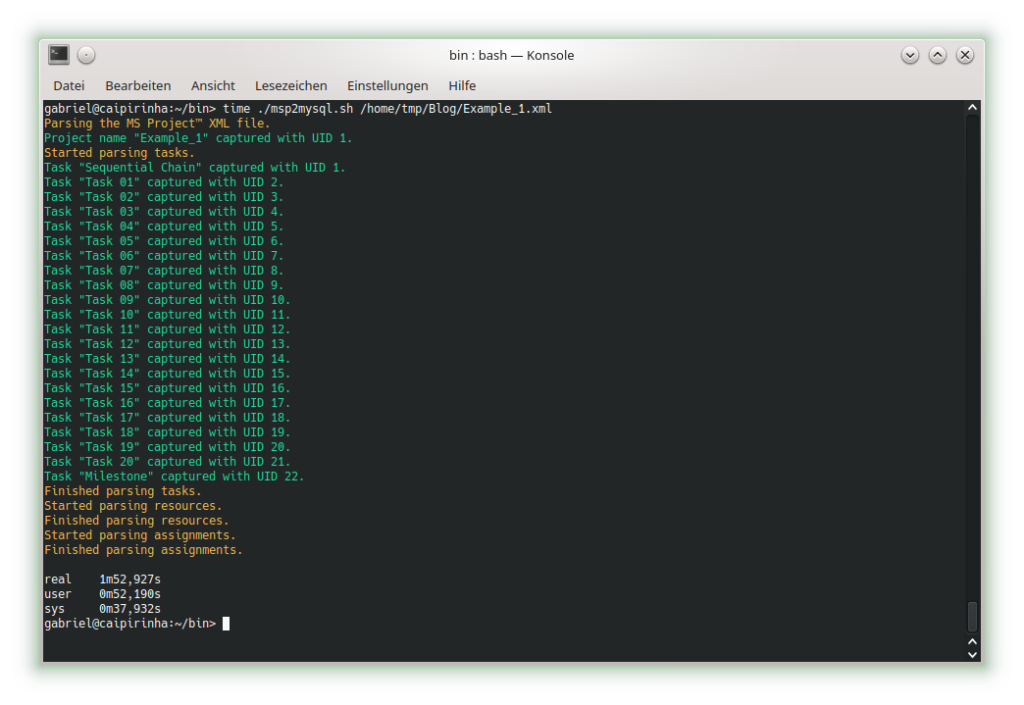

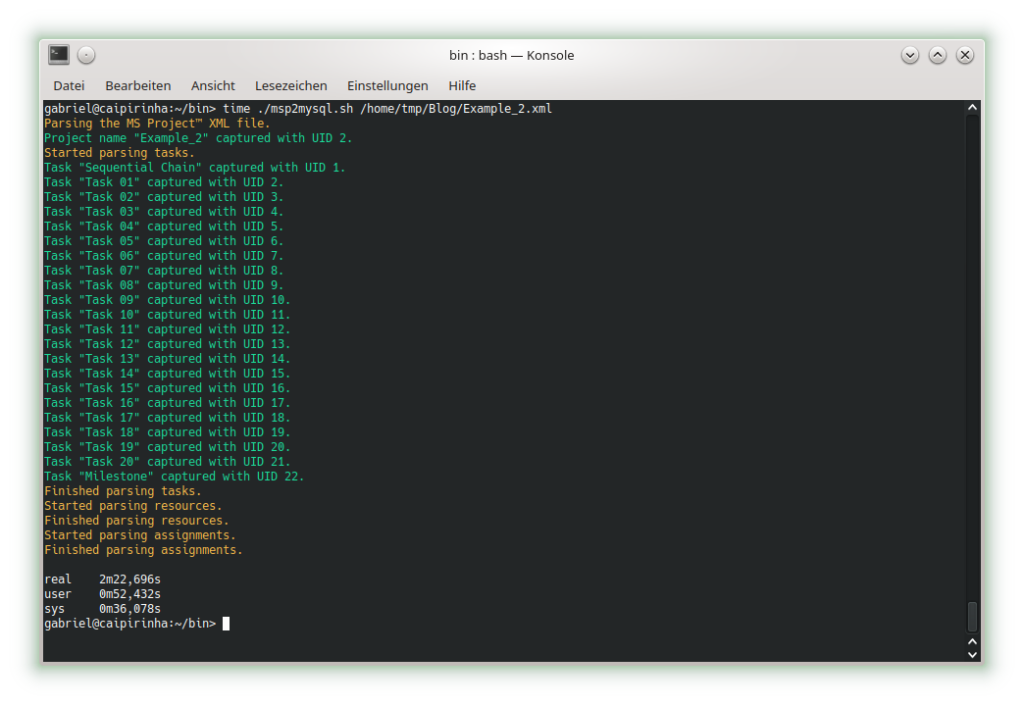

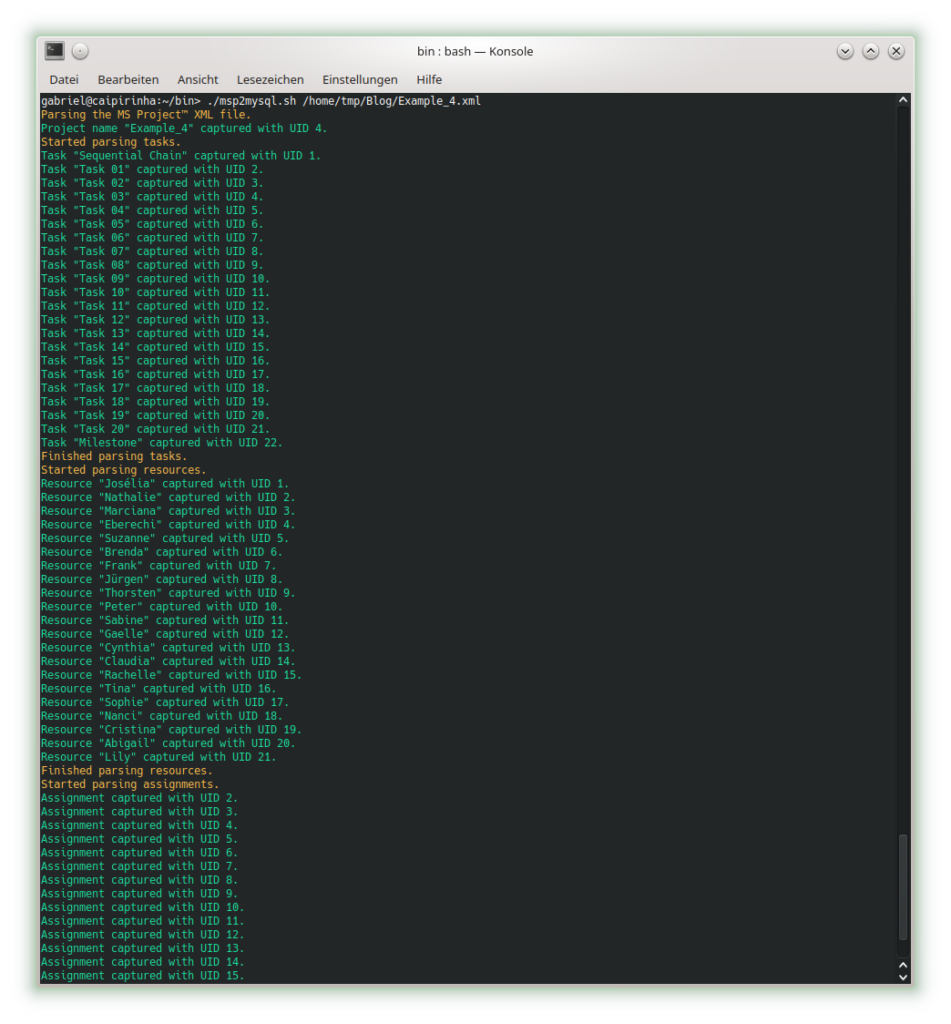

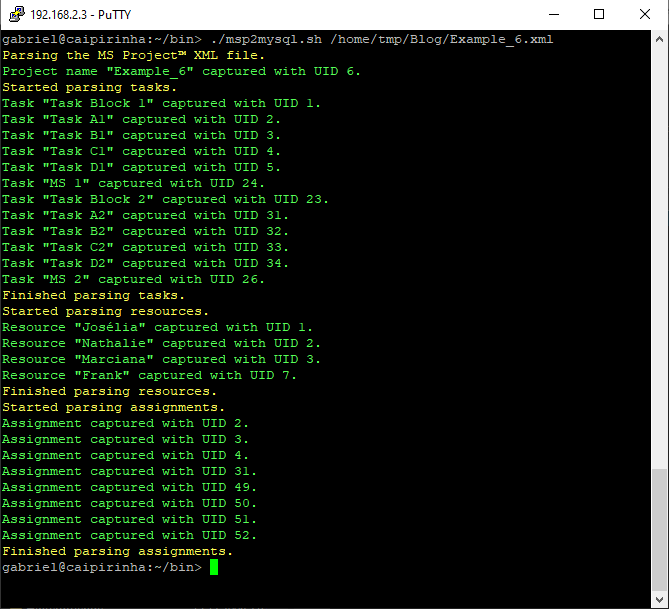

In this example, no resources have been assigned yet. As all tasks are in sequence, there is obviously no slack in any task, and all tasks are in the critical path. Parsing this project plan with msp2mysql.sh leads to:

We can already see that the shall script takes a long time for this easy project plan. For a productive environment, one would have to use C code rather than a shell script, but for educational purposes, the shell script is easier to change, understand and also to debug. Subsequently, we start creating the graphs with mysql2dot.sh, here is an example on how this looks like:

Let us look at the 4 graphs which can be generated:

The result is what could be expected. Tasks with a shorter duration are colored in a lighter color, tasks with a longer duration consequently in a darker color. While the task duration increases in a linear fashion from left to right, the graph colors tasks with a longer duration more in the “dangerous” area, that is, in a darker color.

As no resource has been attributed, no work has been registered for any of the tasks. Therefore, all tasks are equally shown in a light color.

As none of the tasks has any slack, obviously all tasks are in the critical path. Hence all of them are colored in dark color.

Similar to the graph above, all tasks are critical, and progress must be carefully monitored; hence, all tasks are colored in dark color.

Example 2: Parallel Task Chain

This example has the same tasks as Example 1, but all of them in parallel. The longest task therefore determines the overall project runtime.

In this example, too, no resources have been assigned yet. Parsing this project plan with msp2mysql.sh leads to:

The 4 graphs from this example look very different from Example 1:

The color of the tasks is the same as in Example 1; however, all tasks are in parallel.

The color of the tasks is the same as in Example 1 as we still have no work assigned to any of the tasks; however, all tasks are in parallel.

As to slack, the picture is different. Only one task (task #20) does not have any slack, all other tasks have more or less slack, and task #01 has the most slack.

Here, we can see that both tasks #19 and tasks #20 are considered to be critical. More tasks receive a darker color as compared to the graph considering slack alone.

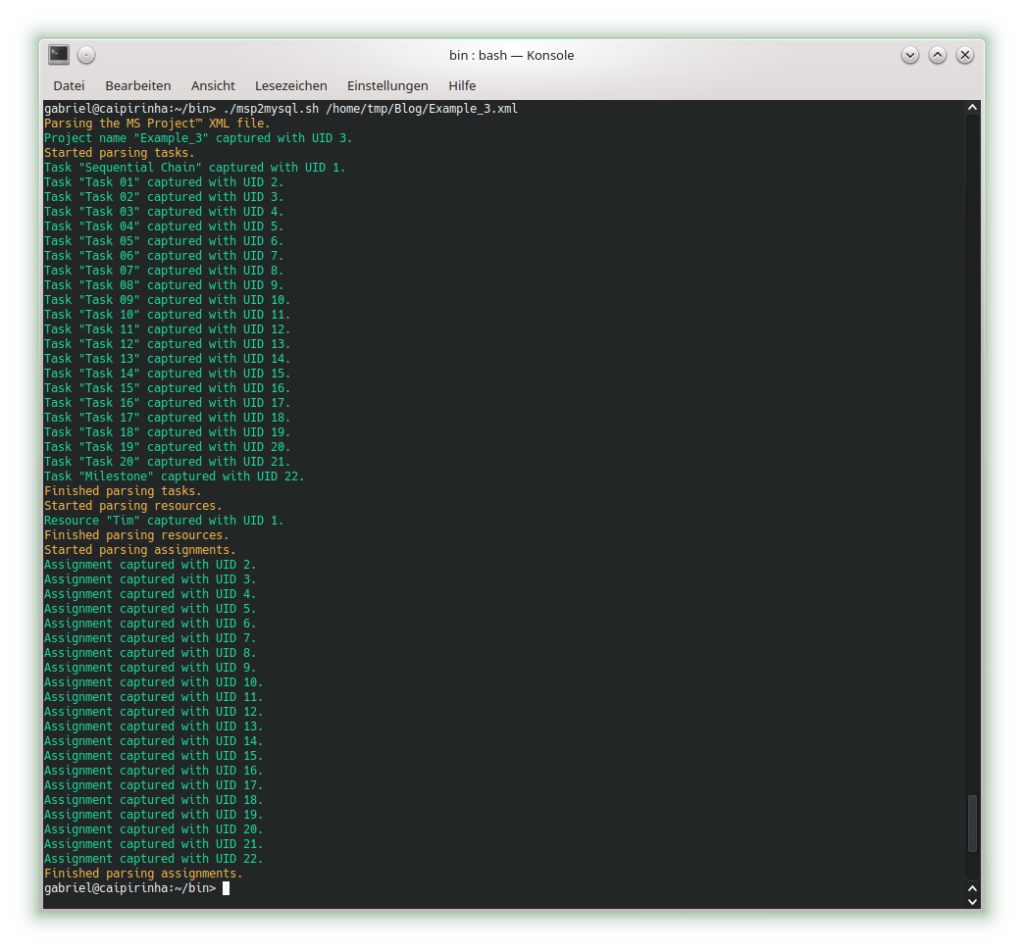

Example 3: Sequential Task Chain with Resource

This example has the same tasks as Example 1, but we assign a resource to each task (the same in this example, but this OK as all tasks are sequential, hence no overload of the resource). Consequently, work is assigned to each task, and we can expect changes in the network graph referring to task work.

Parsing this project plan with msp2mysql.sh leads to:

We can see that now (different from Example 1), resources and assignments are captured, too. Let us look at the 4 graphs from this example:

Nothing has changed compared to Example 1 as the durations are the same.

This graph now experiences the same coloring as the network graph according to task duration. That is understandable given the fact that we have a full-time resource working 100% of the time for the full task duration, hence work and duration have the same numeric values for each respective task.

Nothing has changed compared to Example 1 as still none of the tasks has any slack.

Nothing has changed compared to Example 1 as still none of the tasks has any slack. Consequently, all of them are critical.

Example 4: Parallel Task Chain with Resources

This example has the same tasks as Example 2, but we assign a resource to each task. As all tasks are parallel, we assign a new resource to each task so that there is no resource overload.

Parsing this project plan with msp2mysql.sh leads to:

We can see that now (different from Examples 2), resources and assignments are captured, too. Let us look at the 4 graphs from this example:

Nothing has changed compared to Example 2 as the durations are the same.

This graph now experiences the same coloring as the network graph according to task duration. That is understandable given the fact that we have a full-time resource working 100% of the time for the full task duration, hence work and duration have the same numeric values for each respective task.

Nothing has changed compared to Example 3; the slack of each task has remained the same.

Nothing has changed compared to Example 3; the criticality of the tasks remains the same.

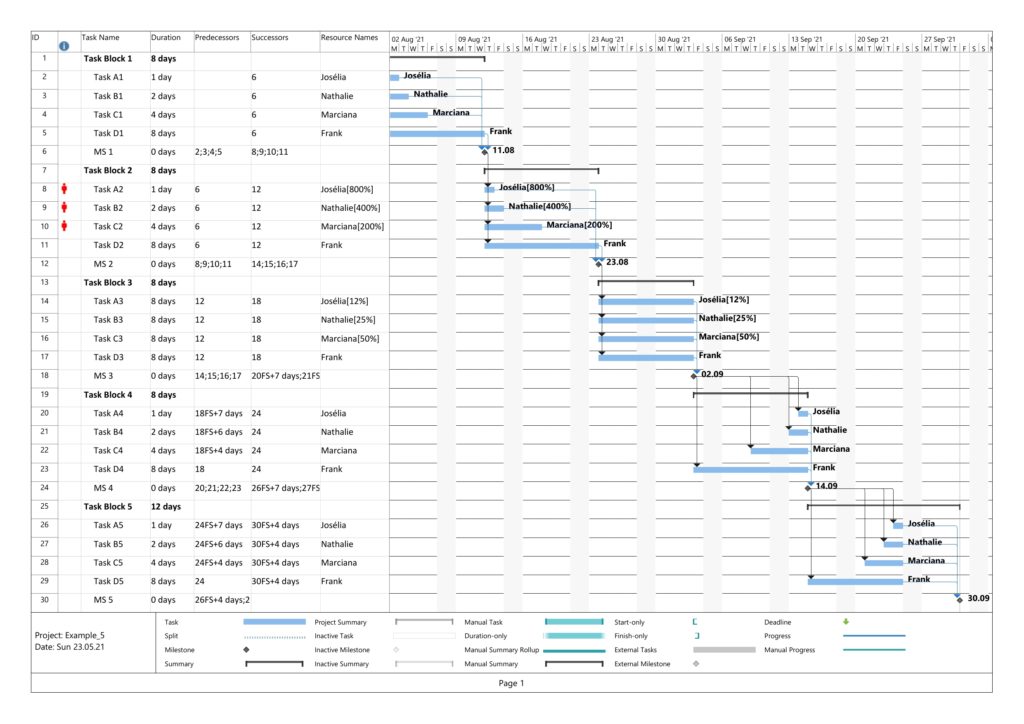

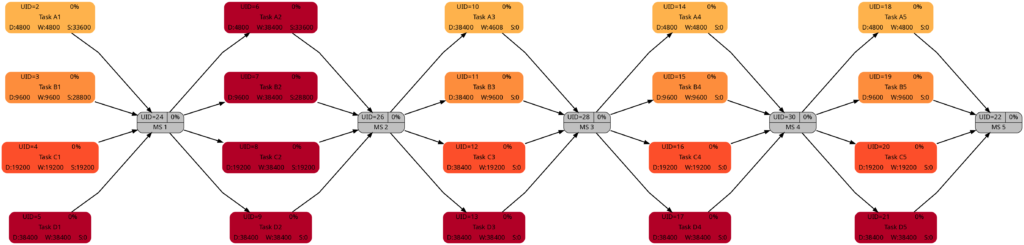

Example 5: Sequential Blocks with Parallel Tasks and with Resources

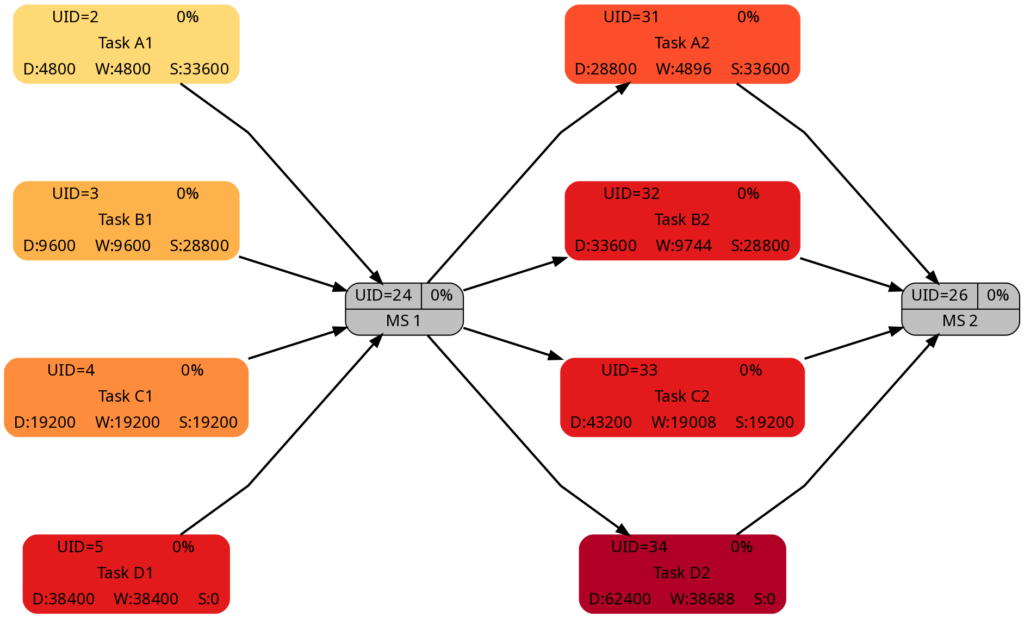

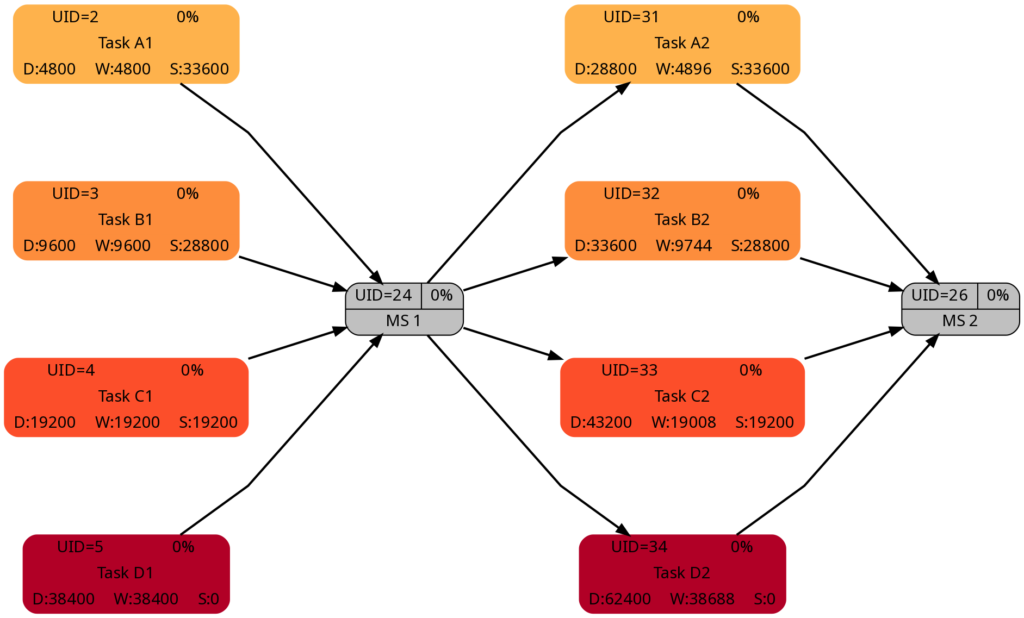

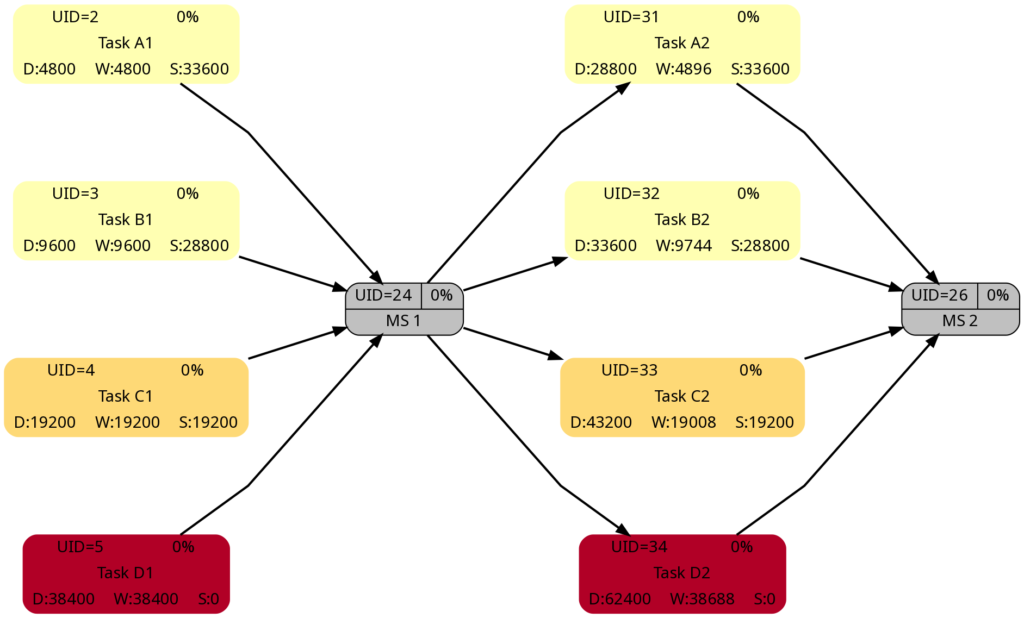

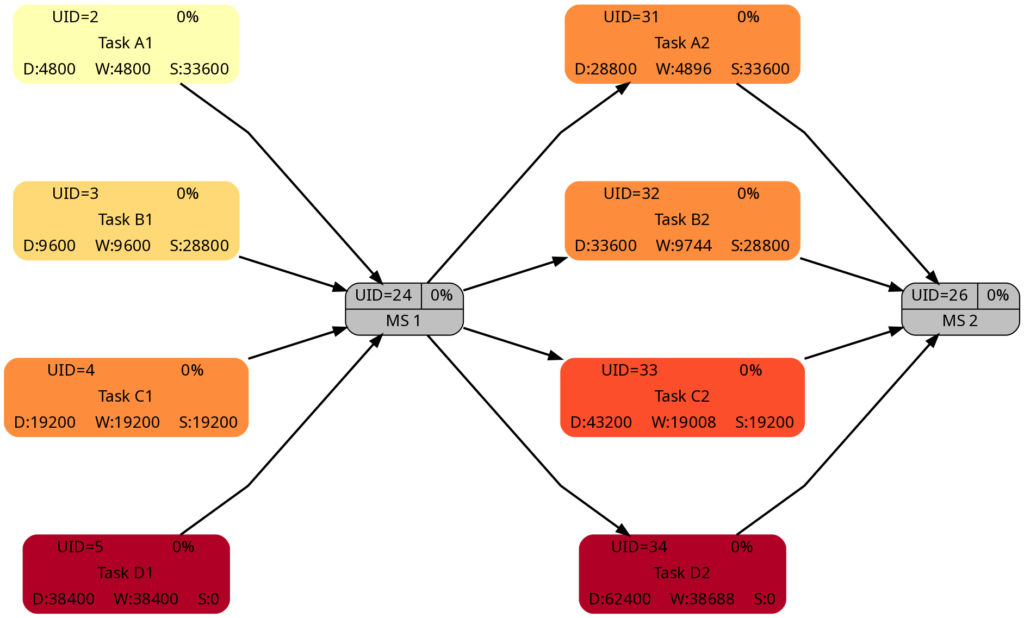

This example is the most interesting one as it combines different aspects within one project plan. We have 5 milestones, and 4 parallel tasks before each milestone. All tasks have resources assigned.

Task Block 1 contains 4 tasks of different durations, and as each task has a 100% resource allocated, also of different work. Due to the different durations, the 4 tasks also have different slack, with the longest task having zero slack.

Task Block 2 is like Task Block 1, but the work is the same for each task; this has been achieved by an over-allocation of the respective resources for 3 out of the 4 tasks.

Task Block 3 consists of 4 parallel tasks with the same duration, however with a work allocation similar to Task Block 1. None of the tasks therefore has slack, but the work for each task is different.

Task Block 4 is like Task Block 1 in terms of task duration and task work. However, the tasks have been arranged in a way so that none of the tasks has slack.

Task Block 5 is like Task Block 4, but each task has (the same amount of) lag time between the task end and the subsequent milestone. None of the tasks has any slack.

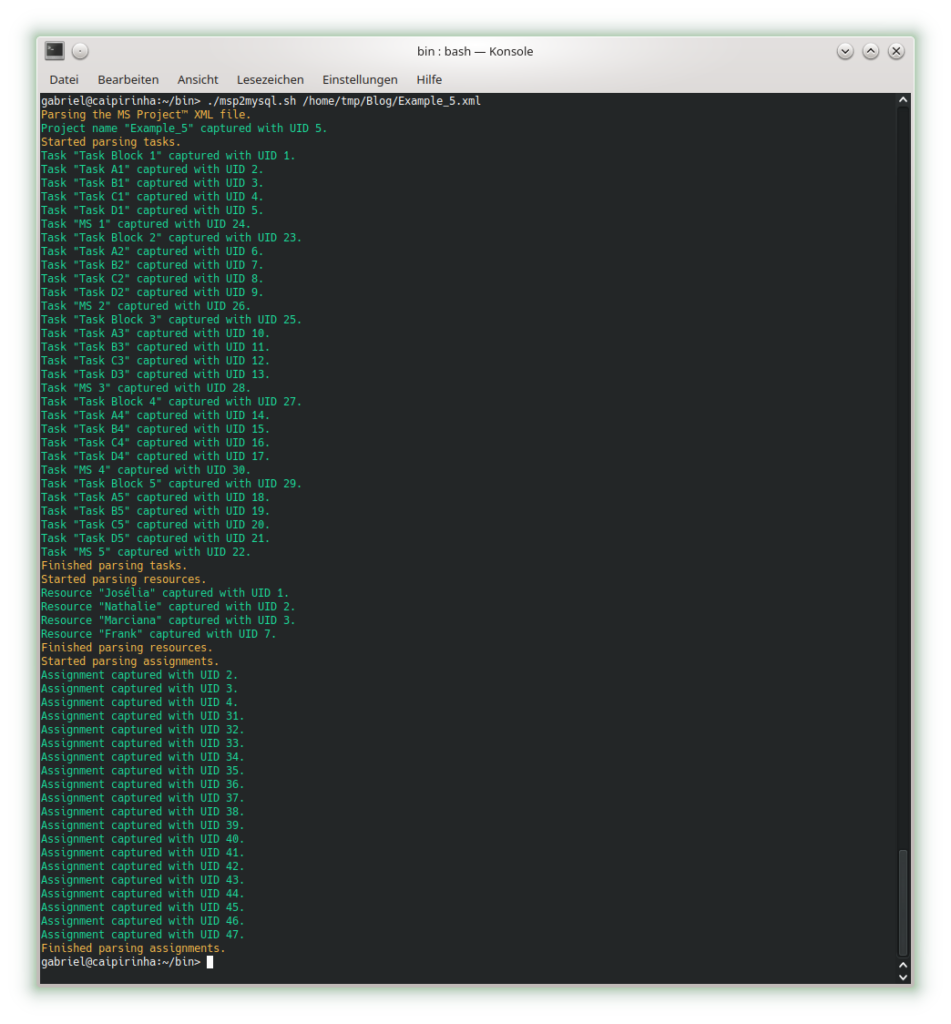

Parsing this project plan with msp2mysql.sh leads to:

Let us look at the 4 graphs from this example:

The colors in Task Block 1, 2, 4 and 5 are the same. Only in Task Block 3 where all tasks have the same (long) duration, all tasks have a dark color.

The colors in Task Block 1, 3, 4, and 5 are the same. Only in Task Block 2 where all tasks have the same amount of work (which is also the highest work a task has in this project plan), all tasks have a dark color.

The colors in Task Block 1 and 2 are the same. The tasks in Task Blocks 3, 4, and 5 are all dark as none of these tasks has any slack.

The network graph according to task criticality is similar to the one according to slack, but we can see that in Task Blocks 1 and 2 tasks get a darker color “earlier” as in the network graph according to criticality.

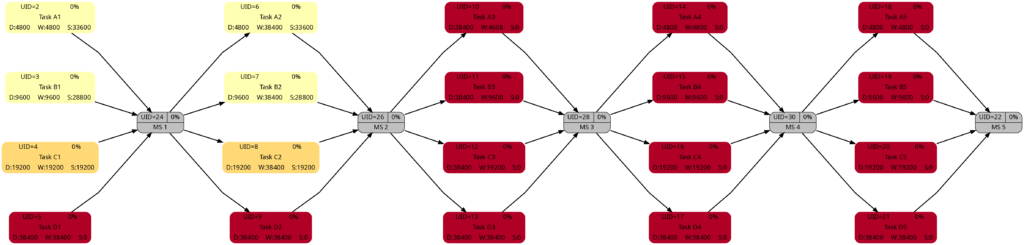

Example 6: Distinguishing Slack and Criticality

So far, we have not seen much difference between the network graphs according to slack and the one according to criticality. In this example, we have two task blocks with 4 tasks each where the slack is the same in the corresponding tasks in each task block. However, the duration is different between both task blocks. Work per task is almost the same, I tried to match the work despite the longer duration of the tasks in Task Block 2 as good as possible.

Parsing this project plan with msp2mysql.sh leads to:

Let us look at the 4 graphs from this example:

The duration of the tasks in Task Block 2 is +5d higher as in Task Block 1. Therefore, it is not surprising that the tasks in Task Block 2 are colored in darker colors.

As mentioned above, I tried to keep the amount of the work the same for corresponding tasks in Task Block 1 and 2 (which can also be seen in the numeric values for the work), and consequently, the colors of corresponding tasks match.

The slack of corresponding tasks in Task Block 1 and 2 is the same as the numeric values how. And so are the colors.

Despite the same slack of corresponding tasks in Task Block 1 and 2, the algorithm rates the criticality of the tasks in Task Block 2 higher as in Task Block 1. This is because the computation of “criticality” is based on the ratio of slack and duration. Out of two tasks with the same slack, the one with a longer duration is rated more critical as relative deviations in the duration have a higher probability to consume the free slack.

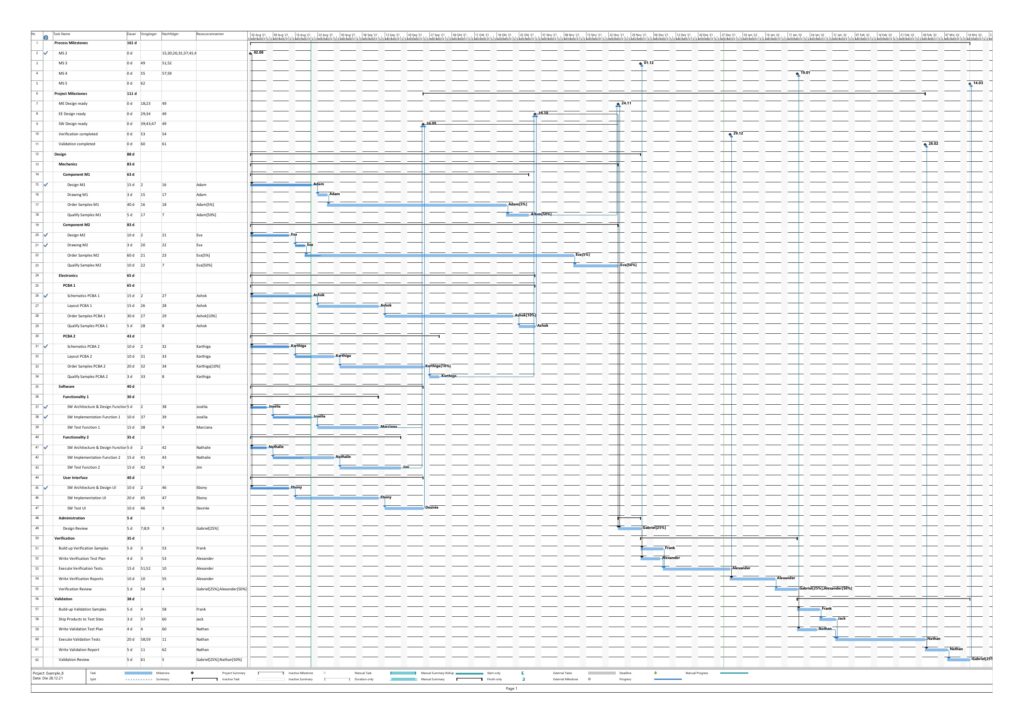

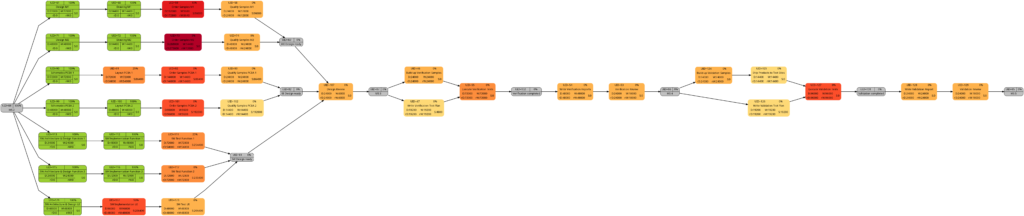

Example 7: A real-world example

Let us now look at a real-world example, a simplified project plan of a development project consisting of mechanical parts, PCBAs and software, including some intermediate milestones. As it is the case in reality, some tasks have only a partial allocation of resources, that means, that the numerical values for the allocated work are less than for the allocated duration. This will be reflected in different shadings of colors in the network graphs task duration and task duration.

The resulting graphs are:

As mentioned above already, we can see the differences in the shading of the graphs according to task duration and task work, the reason being an allocation of resources different to 100%. However, in contrast to an initial expectation, the different shadings occur in large parts of the graph rather than only on a few tasks. This is because the shadings depend on the maximum and the minimum values of task duration or task work, and these values are unique to each type of graph. Well visible is also the critical path in dark red color in the network graph colored according to task criticality.

Example 8: A real-world example with complete tasks

Once a project has been started, sooner or later, the first tasks will be completed, and in this example, we assume that we have run three weeks into the project (green vertical line), and all tasks have been executed according to schedule (this is an example from an ideal world 😀). Consequently, our plan looks like this:

Tasks that have been completed 100% are colored in green color across all network graphs indicating that these tasks do not require any more attention in the remaining project:

The color shadings of the incomplete tasks remain as they were in Example 7. This is because the script mysql2dot.sh determines the step size of the shadings (and thus the resulting shadings) of the individual tasks based on the maximum and minimum value of task duration, task work, task slack, or task criticality for the whole project, independent whether some tasks have been completed or not.

Conclusion

The scripts create_project_db.sql, msp2mysql.sh and mysql2dot.sh and the examples provided above show how, using the powerful graphviz package, traditional project plans created with Microsoft® Project can be visualized in a set pf graphs that can help a project manager to focus on the right tasks. For the ease of understanding, the scripts run in bash so that everyone can easily modify, enlarge, or change the scripts according to the own demands. Users who want to deploy the scripts on productive systems should take speed of execution and cybersecurity (or the lack of both in the provided example scripts) into account.

Outlook

The provided scripts can be enlarged in their scope or improved in numerous ways, and I encourage everyone to tailor them to your own needs. Some examples for improvement are:

- Improve the parsing of Microsoft® Project XML files by:

- …using a real XML parser rather than bash commands

- …enlarging the scope of the parser

- Create additional graphs.

- Consider tasks that have been completed partially.

- Experiment with different definitions of criticality.

- Explore resource usage and how to display it graphically (load, usage, key resource, etc.).

- Improve the function iso2msp () which so far is only a very simplistic implementation and is not yet able to process values like “days”, “weeks”, “months”.

Sources

- [1] = Introduction to Project XML Data

- [2] = ISO 8601 duration format

- [3] = Graphviz Color Names

- [4] = ColorBrewer License

Files

- create_project_db.sql sets up a database in MySQL or MariaDB. The script works with the user gabriel who assumes to have access to the database server without password; you might have to adapt the script to your environment and needs.

- msp2mysql.sh reads a Microsoft® Project plan in XML, parses it and writes the data into the MariaDB database.

- mysql2dot.sh reads from the MariaDB database and creates a script for dot, a tool of the graphviz suite.

- PERT-Farbskalen.xlsx is a work sheet where different approaches for color distributions are tested and where you can play around to find the “right” algorithm for yourself.

- Example_1.zip contains all files with respect to Example 1.

- Example_2.zip contains all files with respect to Example 2.

- Example_3.zip contains all files with respect to Example 3.

- Example_4.zip contains all files with respect to Example 4.

- Example_5.zip contains all files with respect to Example 5.

- Example_6.zip contains all files with respect to Example 6.

- Example_7.zip contains all files with respect to Example 7.

- Example_8.zip contains all files with respect to Example 8.

Disclaimer

- The program code and the examples are for demonstration purposes only.

- The program shall not be used in production environments.

- While the program code has been tested, it might still contain errors.

- The program code has not been optimized for speed (It’s a bash script anyway, so do not expect miracles.).

- The program code has not been written with cybersecurity aspects in mind.

- Only a subset of all possibilities in [1] has been used, and the code does not claim to adhere completely to [1].

BMW 330e (PHEV) Learnings

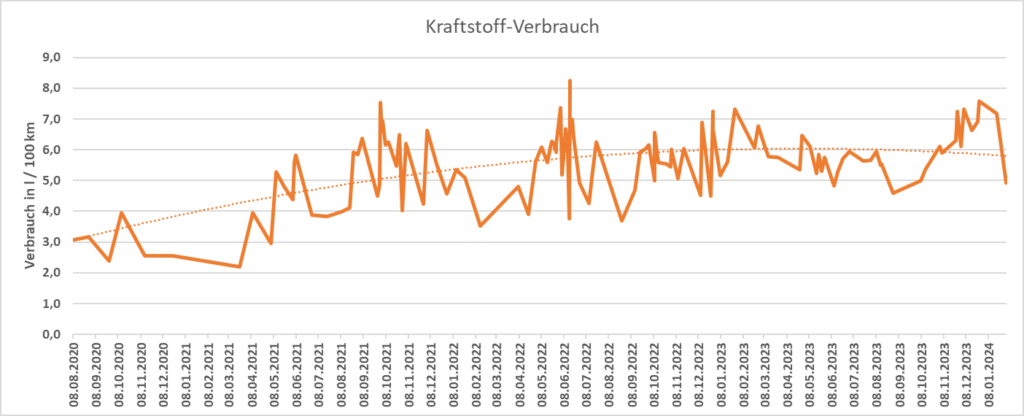

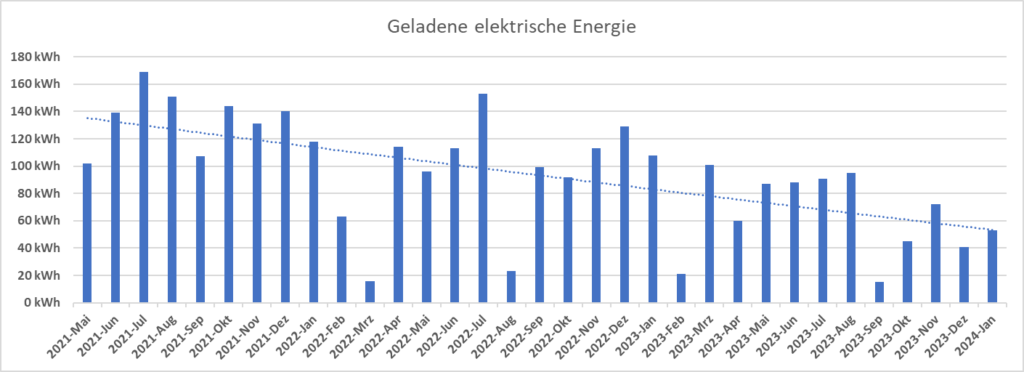

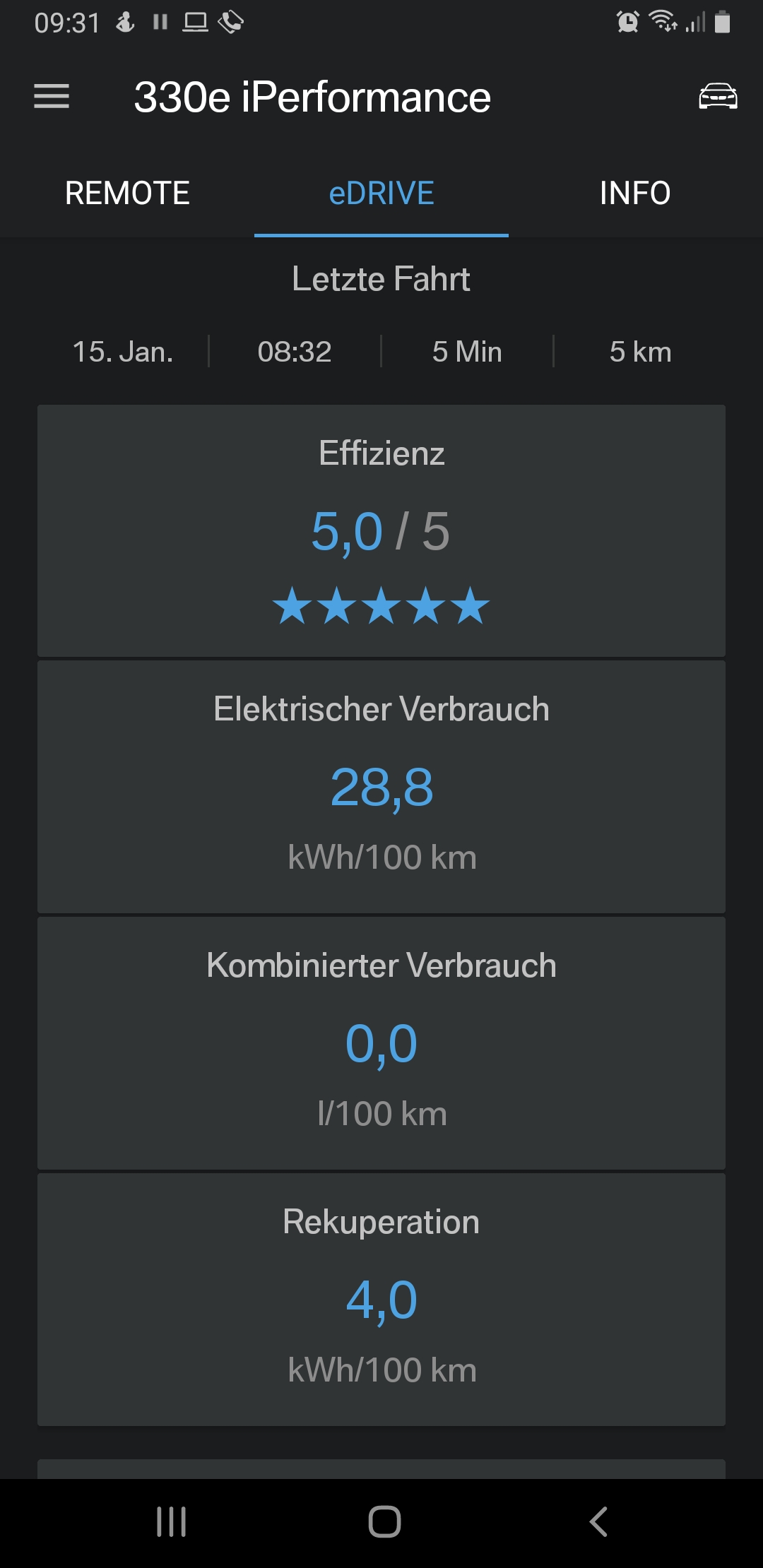

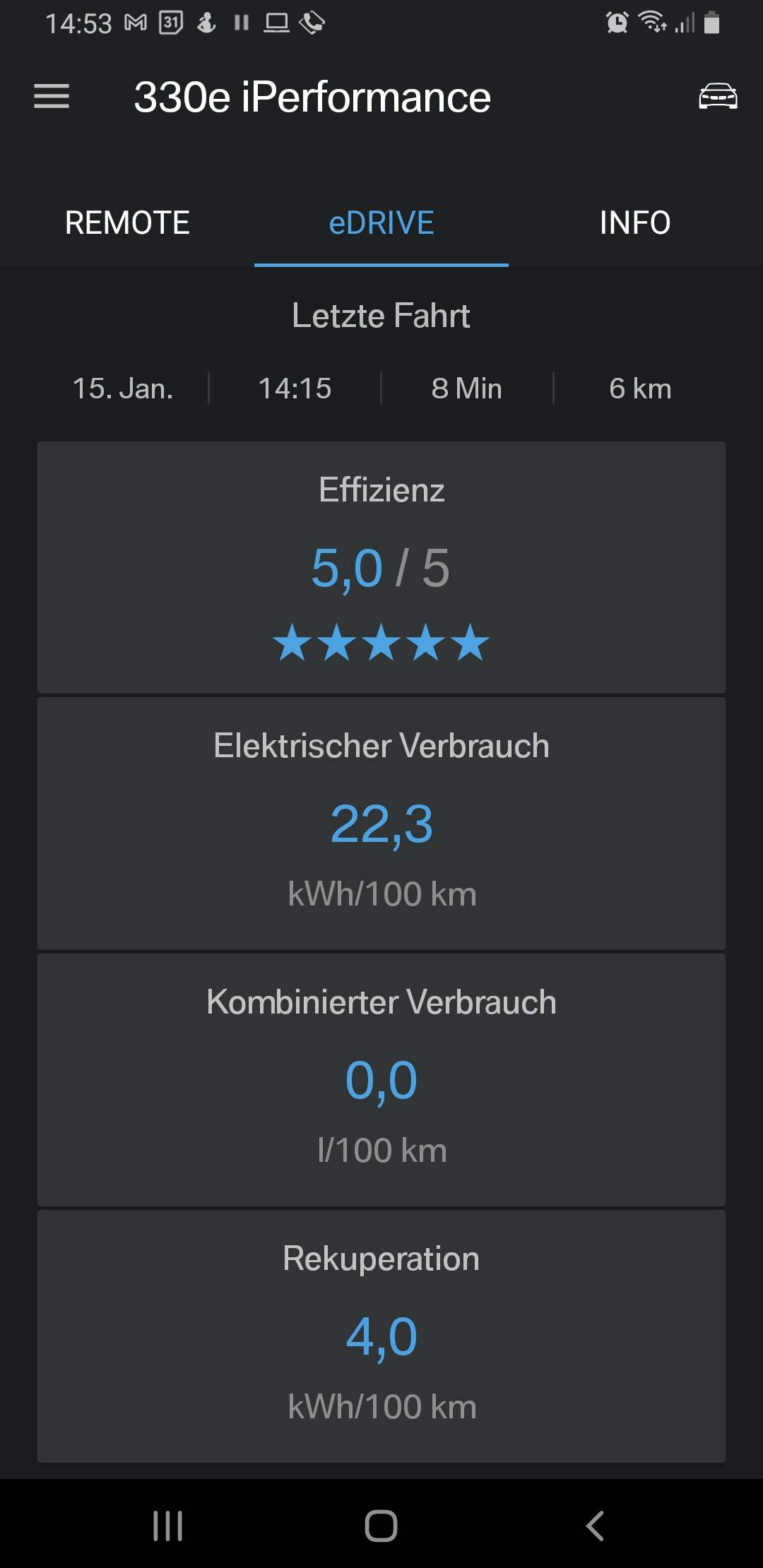

Since 23-Jun-2020, I have used my hybrid electric car, a BMW 330e iPerformance (F30 platform), built in 2016. I bought it from the BMW branch office in Kirkel, and I have gained quite some experience with this model since then. While some learnings may be unique to this type of car, I believe that a great deal of my experience is also applicable to other plug-in hybrid vehicles (PHEV). My experience is not applicable to electric-only cars (battery electric vehicles aka BEV).

These are the points that I would like to hightlight to someone interested in buying or using a PHEV:

General Points

Pure electric Mode (MAX eDRIVE)

- I use the purely electric mode (MAX eDRIVE) when I know that I can achieve my whole trip with the electric charge of the battery (e.g., shopping in the nearby city). When I already know that this will not be possible or when I do not yet know the extension of my whole trip, I leave the car in the default mode AUTO eDRIVE.

- I can reach a realistic maximum range of 24 km under very good conditions (no need to brake too often, summer, flat terrain). When temperatures fall, this range decreases to 15 km, and below +3°C, MAX eDRIVE does not really make sense, as the combustion engine will kick in as soon as I would accelerate only modestly.

- I get a real consumption between 0,20 kWh/km (summer time, little start-and-stop) and 0,32 kWh/km (winter time, a lot of start-and-stop). Pre-heating the car in winter would bring the consumption closer to the summer time values, but pre-heating also has a cost to it. Below are some examples of electricity consumption at various conditions.

- Driving with the electric machine in the BMW 330e is different than driving a BEV. In a BEV, you usually do not have a gearbox, and there is a fixed transmission ratio between the rotation of the electric machine and the axis. In the BMW 330e however, the electric machine is located before the gearbox, and in fact it cycles through a range of gears. In fact, even before you start to roll, the electric machine rotates with a certain idle rotation and is phased in via the clutch of the automated gearbox (ZF GA8P75HZ [1], [2]). Using the smartphone app from [3] and the ODB2 adapter from [4], I undertook some measurements in the modes Sport, Comfort, and Eco, and I found the following results:

- The switching from one gear to the next one does not seem to be related to the mode. While the “feeling” of the Sport mode is definitively more agile than the one of the Eco mode, this does not translate in earlier to later switches to the next gear when using the purely electric mode.

- During acceleration, the switch from one gear to the next higher gear seems to happen around 3000 rpm of the electric machine.

- At no load (releasing the accelerator pedal), switching down to lower gears seems to happen around these rotations:

- From 6→5: 1800 rpm

- From 5→4: 1600 rpm

- From 4→3: 1400 rpm

- From 3→2: 1300 rpm

- From 2→1: 800 rpm

Examples of electricity and fuel consumptions

Public Charging

- While things have become better, public charging can still be some kind of adventure where you can have unpleasant surprises in some cases. However, I can state that things have become much better than in the early days.

- Tariffs can vary greatly, from reasonable to outrageously expensive. One must check the tariffs at the charging station just before each recharge again, as they can change from one day to the other. Even then, this does not mean that you will also be charged the cost that is advised in the app.

- With the consumption values given above, I have set my personal limit at 0.45 €/kWh. If the tariff is higher than that, I am equally or better off if I use the combustion engine.

- Most operators will charge you additionally if your car stays longer than 3h…4h at one charging point. So be aware to stay within this duration. A few operators however have the nasty habit to charge you 0.10 €/min from the first minute on, in addition to the electricity cost. On the other side, they are a few operators that do not charge you such a penalty during the night; so you can safely start charging the car over night.

- In a large German city (Cologne), I have observed the following issue: As parking is free of charge while you are charging the car and as the parking fee on other locations is expensive, there seem to be people that connect their car on Friday evening and leave it connected at the charging point until Sunday evening. While they have to pay a penalty for exceeding the time limit (many operaters start to charge penalties after 4h), the penalty is often capped at around 12 € and therefore still much cheapter than paying for the parking fee that would otherwise have to be paid. Therefore, too, it might be difficult to find a free charging station in the center of the city.

Links

Financial Risk and Opportunity Calculation

Executive Summary

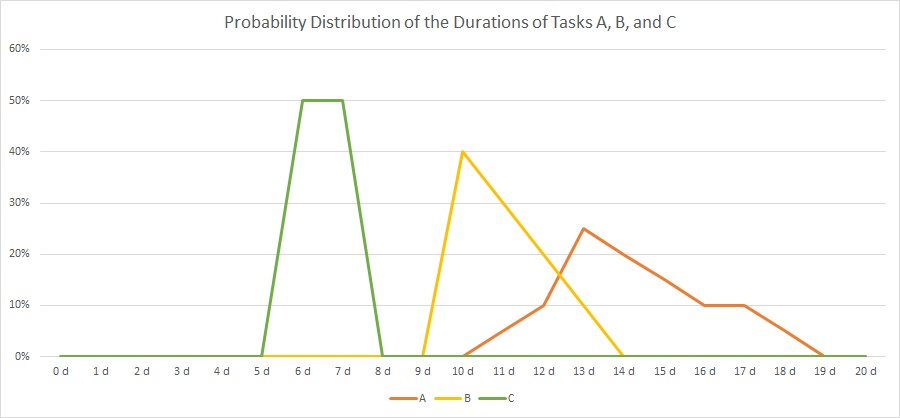

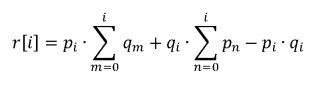

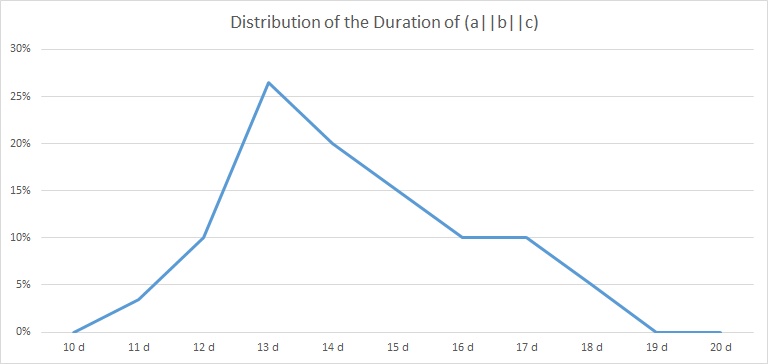

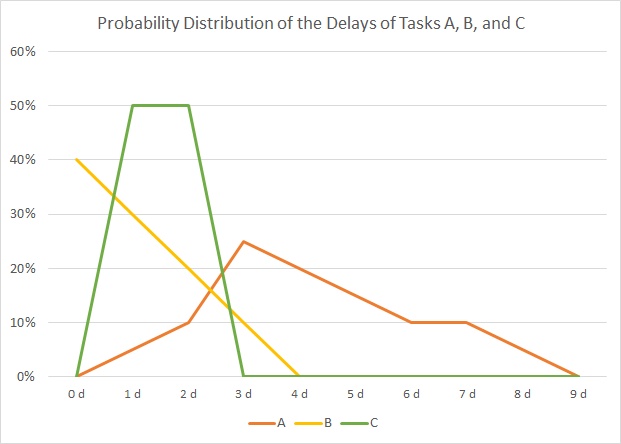

This blog post offers a method to evaluate n-ary project risks and opportunities from a probabilistic viewpoint. The result of the algorithm that will be discussed, allows to answer questions like:

- What is the probability that the overall damage resulting from incurred risks and opportunities exceeds the value x?

- What is the probability that the overall damage resulting from incurred risks and opportunities exceeds the expected value (computed as ∑(pi * vi) for all risks and opportunities)?

- How much management reserve should I assume if the management reserve shall be sufficient with a probability of y%?

The blog post shall also encourage all affected staff to consider more realistic n-ary risks and opportunities rather than the typically used binary risks and opportunities in the corporate world.

This blog post builds on the previous blog post Financial Risk Calculation and improves the algorithm by:

- removing some constraints and errors of the C code

- enabling the code to process risks and opportunities together

Introduction

In the last decades, it has become increasingly popular to not only consider project risks, but also opportunities that may come along with projects. [1] and [2] show aspects of the underlying theory, for example.

In order to accommodate that trend (and also in order to use the algorithm I developed earlier in my professional work), it became necessary to overhaul the algorithm so that it can be used with larger input vectors of risks as well as with opportunities. The current version of the algorithm (faltung4.c) does that.

Examples

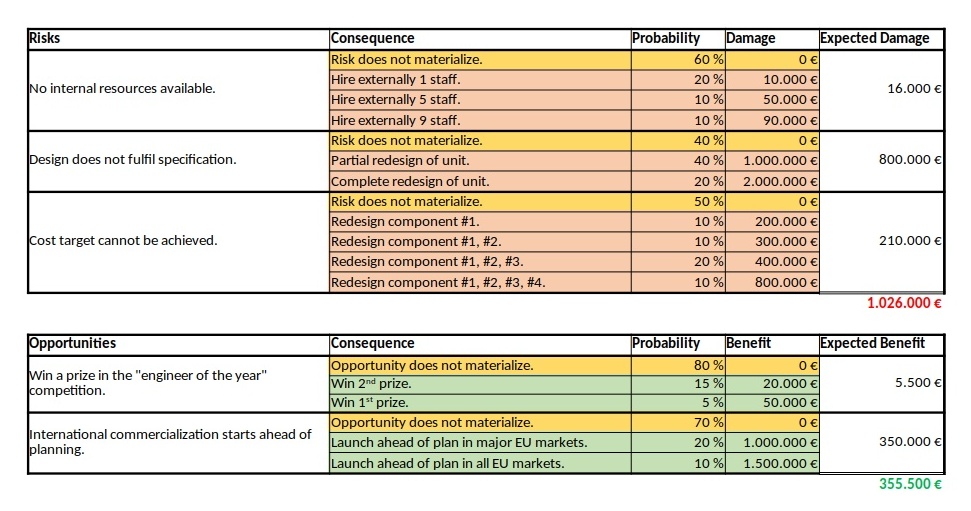

Example 1: Typical Risks and Opportunities

In the first example, we will consider some typical risks and opportunities that might occur in a project. The source data for this example is available for download in the file Risks_Opportunities.xlsx.

We can see a ternary, a quaternary, and a quinary risk and two ternary opportunities. This is already different from the typical corporate environment in which risks and opportunities are still mostly perceived as binary options (“will not happen” / “will happen”), probably because of a lack of methods to process any other form. At that point I would like to encourage everyone in a corporate environment to take a moment and think whether binary risks only are really still contemporary in the complex business world of today. I believe that they are not.

As already described in the previous blog post Financial Risk Calculation, these values have to copied to a structured format in order to be processed with the convolution algorithm. For the risks and the opportunities shown in the table above, that would turn out to be:

# Distribution of the Monetary Risks and Opportunities

# Risks have positive values indicating the damage values.

# Opportunities have negative values indicating the benefit values.

# START

0 0.60

10000 0.20

50000 0.10

90000 0.10

# STOP

# START

0 0.40

1000000 0.40

2000000 0.20

# STOP

# START

0 0.50

200000 0.10

300000 0.10

400000 0.20

800000 0.10

# STOP

# START

0 0.80

-20000 0.15

-50000 0.05

# STOP

# START

0 0.70

-1000000 0.20

-1500000 0.10

# STOPNote that risks do have positive (damage) values whereas opportunities have negative (benefit) values. That is inherently programmed into the algorithm, but of course, could be changed in the C code (for those who like it the opposite way).

After processing the input with:

cat risks_opportunities_input.dat | ./faltung4.exe > risks_opportunities_output.datthe result is stored in the file risks_opportunities_output.dat which is lengthy and therefore not shown in full here on this page. However, let’s discuss about some important result values at the edge of the spectrum:

# Convolution Batch Processing Utility (only for demonstration purposes)

# The resulting probability density vector is of size: 292

# START

-1550000 0.0006000000506639

...

2890000 0.0011200000476837

# STOP

# The probability values are:

-1550000 0.0006000000506639

-1540000 0.0008000000625849

...

0 0.2721000205919151

...

2870000 0.9988800632822523

2890000 1.0000000633299360The most negative outcome corresponds to the possibility that no risk occurs, but that all opportunities realize with their best value. In our case this value is 1,550,000 €, and from the corresponding line in the density vector we see that the probability of this occurring is only 0.06%.

The most positive outcome corresponds to the possibility that no opportunity occurs and that all risks realize with their worst value. On our case, this is 2,890,000 €, and the probability of this to occur is 0.11%. In between those extreme values, there are 290 more possible outcomes (292 in total) for a benefit or damage.

Note that the probability density vector is enclosed in the tags #START and #STOP so that it can directly be used as input for another run of the algorithm.

The second part of the output contains the probability vector which (of course) also has 292 in this example. The probability vector is the integration of the probability density vector and sums up all probabilities. The first possible outcome (most negative value) therefore starts with the same probability value, but the last possible outcome (most positive value) has the probability 1. In our case, the probability is slightly higher as 1, and this is due to the fact that float or double variables in C do not have infinite resolution. In fact, already when converting a number with decimals to a float or double value, you most probably encounter imprecisions (unless the value is a multiple or fraction of 2).

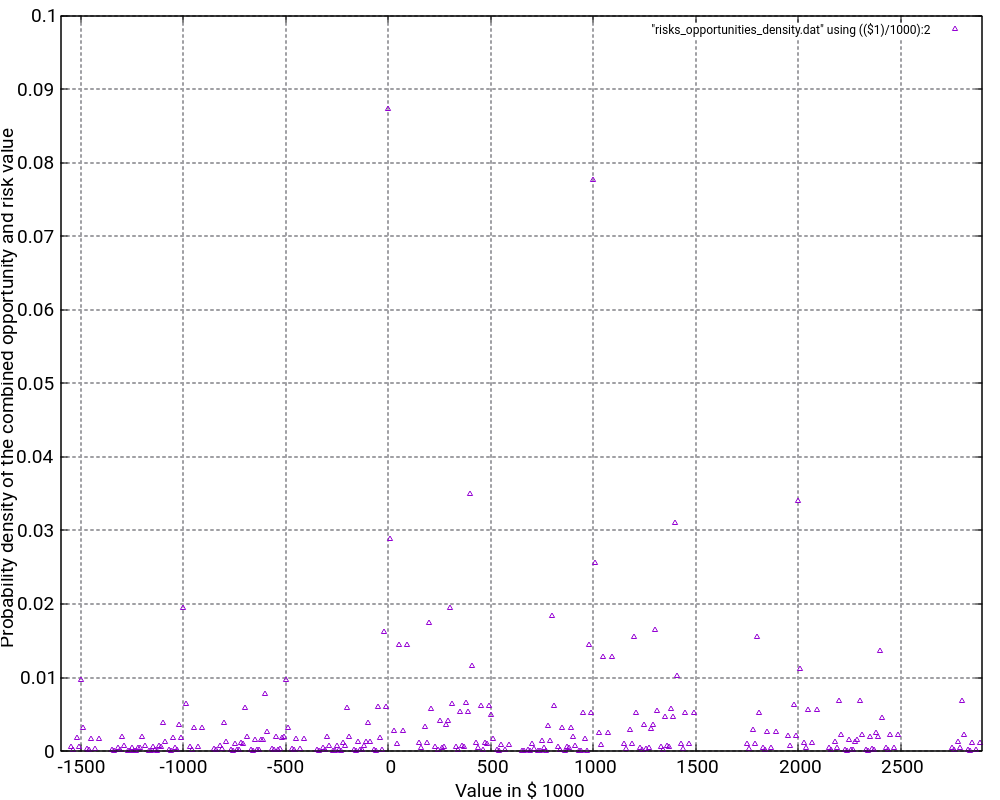

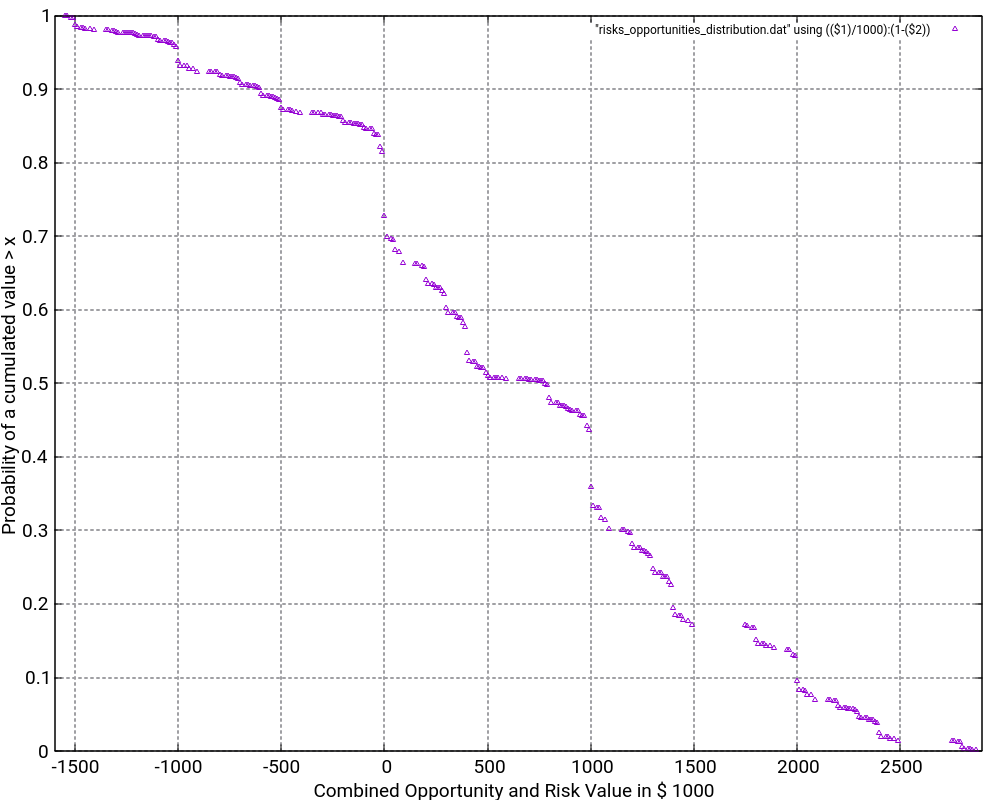

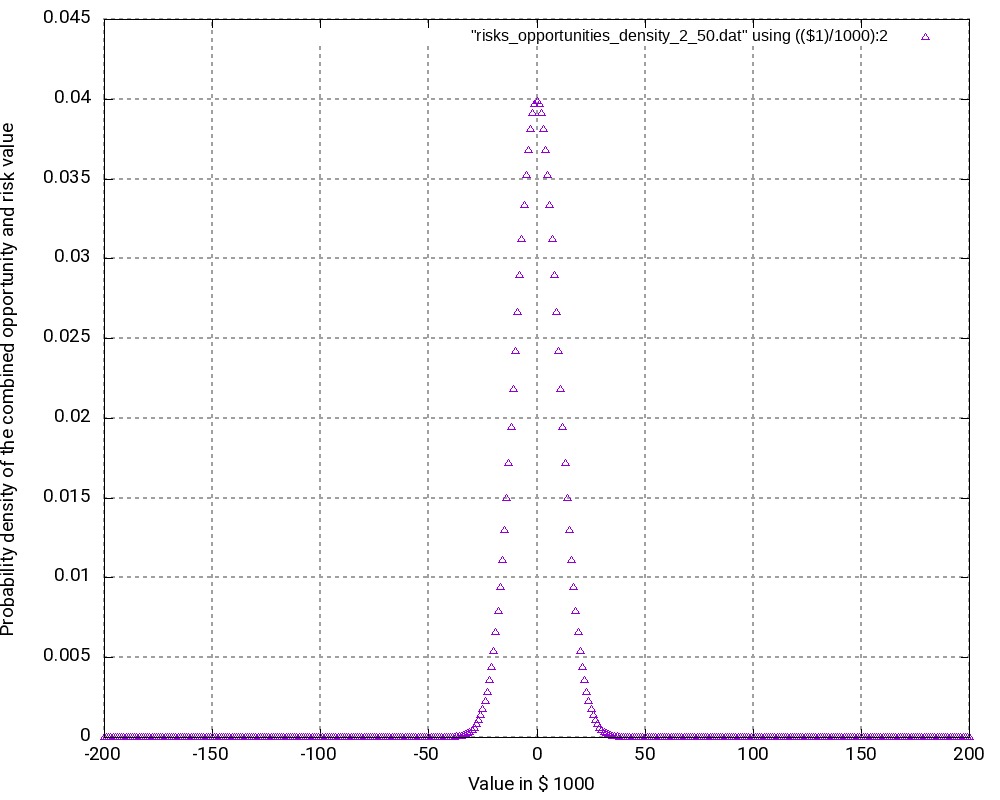

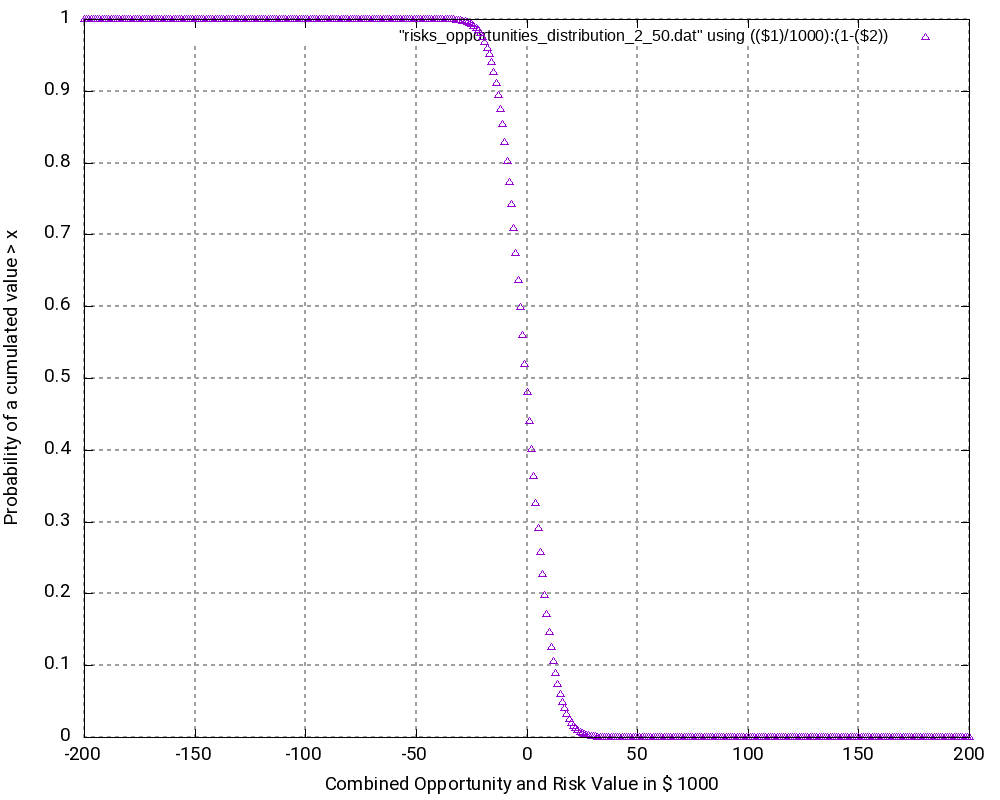

The file Risks_Opportunities.xlsx contains the input vector and the output of the convolution as well as some graphic visualizations in Excel. However, for large output values, I do not recommend to process or visualize them in Excel, but instead, I recommend to use gnuplot for the visualization. The images below show the probability density distribution as well as (1 – probability distribution) as graphs generated with gnuplot.

What is the purpose of the (1 – Probability distribution) graph? The purpose is to answer the question: “What is the probability that the damage value is higher as x?”

Let’s look what the probability of a damage value of more than 1,000,000 € is? In order to do so, we locate “1000” on the x-axis and go vertically up until we reach the graph. Then, we branch to the left and read the corresponding value on the y-axis. In our case, that would be something like 36%. This means that there is a probability of 36% that thus project experiences a damage of more than 1,000,000 €. This is quite a notable risk, something which one would probably not guess by just looking at the expected damage value of 1,026,000 € listed in the Excel table.

And even damage values of more than 2,000,000 € can still occur with some 10% of probability.

The graph would always start relatively on the top left and finish on the bottom right. Note that the graph does not start at 1.0 because there is a small chance that the most negative value (no risks, but the maximum of the opportunities realized) happens. However, the graph reaches the x-axis at the most positive value. Therefore, it is important asking the question “What is the probability that the damage value is higher than x?” rather than asking the question “What is the probability that the damage value is than x or higher?” The second question is incorrect although the difference might be minimal when many risks and opportunities are used as input.

Example 2: Many binary risks and opportunities of the same value

This example is not a real one, but it shall help us to understand the theory behind the algorithm. In this example, we have 200 binary risks and 200 binary opportunities with a damage or benefit value of 1,000 € and a probability of occurrence of 50%.

# Risks have positive values indicating the damage values.

# Opportunities have negative values indicating the benefit values.

# START

0 0.50

1000 0.50

# STOP

# START

0 0.50

-1000 0.50

# STOP

...From this, we can already conclude that the outcomes are in the range [-200,000 €; +200,000 €] corresponding to the rare events “no risk materializes, but all opportunities materialize” respectively “no opportunity materializes, but all risks materialize”. As the example is simple in nature, we can also conclude immediately that the possibility of such an extreme outcome is

p = 0.5(200+200) = 3.87259*10-121

and that is really a very low probability. We might also guess that the outcome value zero would have a relatively high probability as we can expect this value to be reached by many different combinations of risks and opportunities.

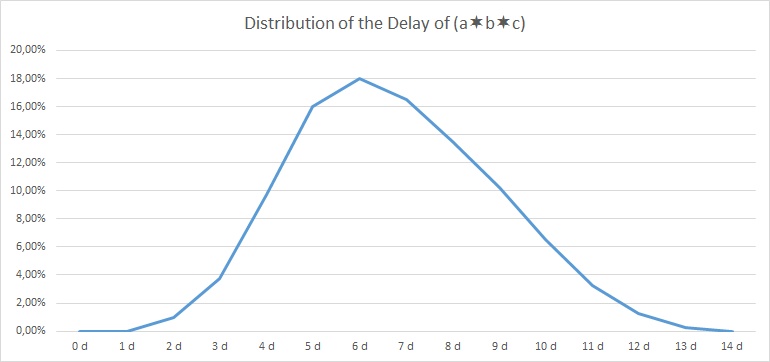

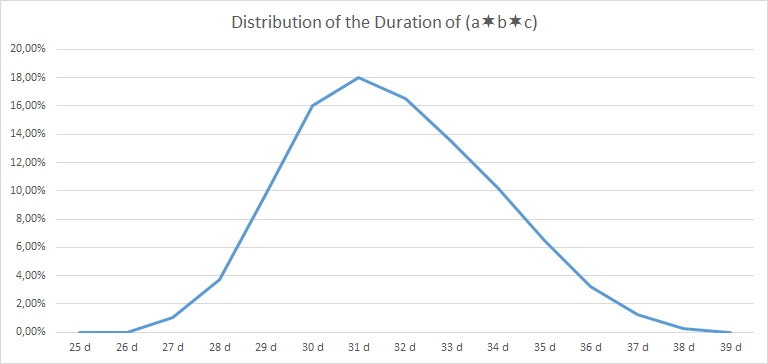

When we look at the resulting probability density curve and the resulting probability distribution, we can see a bell-shaped curve in the probability density and the resulting S curve as the integration of the bell-shaped curve. While the curve resembles a Gaussian distribution, this is not the case in reality as our possible outcomes are bound by a lower negative value and an upper positive value whereas a strict Gaussian distribution has a probability (and be it minimal only) for any value on the x-axis.

Let us have a closer look at some values in the output tables:

# Convolution Batch Processing Utility (only for demonstration purposes)

# The resulting probability density vector is of size: 401

# START

-200000 0.0000000000000000

-199000 0.0000000000000000

...

-2000 0.0390817747440756

-1000 0.0396709472276547

0 0.0398693019637929

1000 0.0396709472276547

2000 0.0390817747440756

...

199000 0.0000000000000000

200000 0.0000000000000000

# STOP

# The probability values are:

-200000 0.0000000000000000

-199000 0.0000000000000000

...

-2000 0.4403944017904490

-1000 0.4800653490181037

0 0.5199346509818966

1000 0.5596055982095512

2000 0.5986873729536268

...

199000 0.9999999999999996

200000 0.9999999999999996The following observations can be made:

- The most negative outcome (-200,000 €) and the most positive outcome (+200,000 €) can only be reached in one constellation and have, as calculated above, minimal probability of occurrence. Therefore, the probability density vector shows all zero in the probability.

- The outcome 0 has the highest probability of all possible outcomes (3.99%), and outcomes close to 0 have a similarly high probability. Hence, it is very probable that the overall outcome of a project with this (artificially constructed) risk and opportunity pattern is around 0.

- It may astonish at first that in the probability distribution (not the probability density), the value 0 is attributed to a probability of 51.99% rather than to 50.00%. However, we must keep in mind that the values in the probability distribution answer the question: “What is the probability that the outcome is > x?” and not “… ≥ x?” That makes the difference.

- At the upper end of the probability distribution (+200,000 €), we would expect the probability to be 1.0 rather than a value below that. However, here, the limited resolution of even double variables in C result in the fact that errors in the precision of arithmetic results add up. This might be improved by using long double as probability variable.

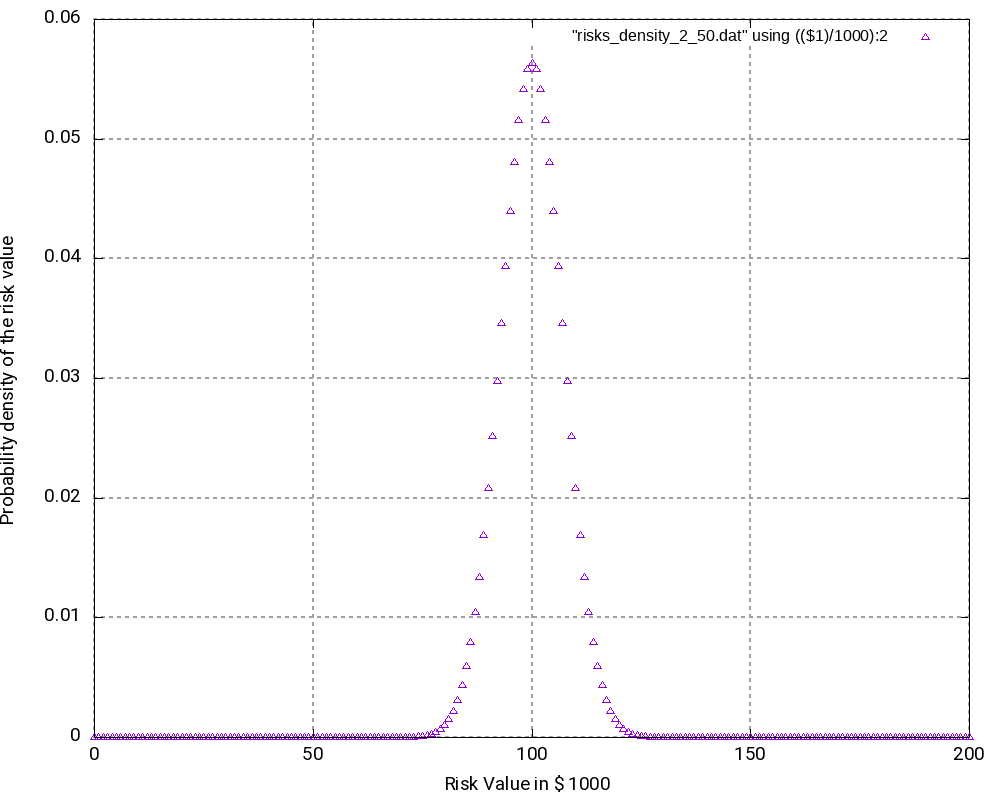

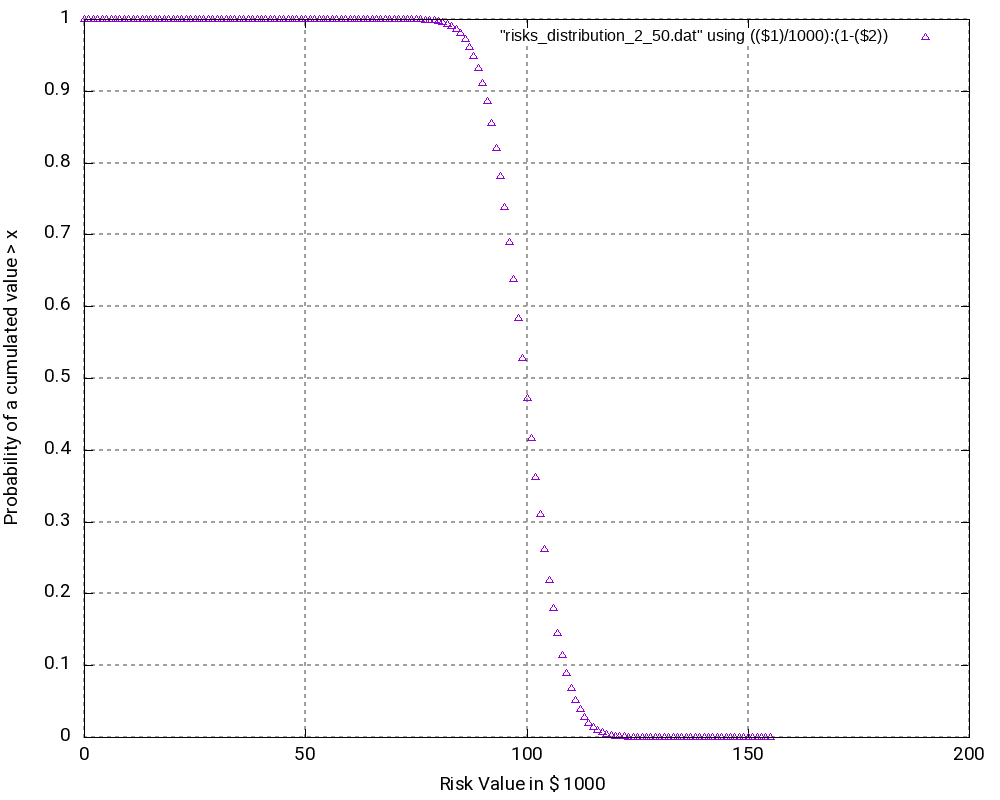

Example 3

The third example is similar to the second one, but it only contains risks, that is, we leave away all opportunities from the second example. Consequently, outcomes (damages) lie in the interval [0; +200,000 €]. The outcomes at the edges of the interval have the lowest probability of occurrence, in this case:

p = 0.5200 = 6.22302*10-61

While this probability of occurrence is much higher than the one for the edge values in Example 2, it is still very low.

As we can see (and this could be expected somewhat), the most probably outcome now is a damage of +100,000 €, again the value in the middle of the interval which now has a probability of occurrence of 5.63% (higher than in the second example). While the curves in the second and in the third example look very similar, the curves are “steeper” in the third example.

# Convolution Batch Processing Utility (only for demonstration purposes)

# The resulting probability density vector is of size: 201

# START

0 0.0000000000000000

1000 0.0000000000000000

...

99000 0.0557905732764915

100000 0.0563484790092564

101000 0.0557905732764915

...

199000 0.0000000000000000

200000 0.0000000000000000

# STOP

# The probability values are:

0 0.0000000000000000

1000 0.0000000000000000

...

99000 0.4718257604953718

100000 0.5281742395046283

101000 0.5839648127811198

...

199000 1.0000000000000002

200000 1.0000000000000002Conclusion

This blog post shows that with the help of the algorithm faltung4.c, it has become possible to computer probability densities and probability distributions of combined risks and opportunities and answer valid and important questions with respect to the project risk and the monetary reserves (aka “management reserve”) that should be attributed to a project with a certain portfolio of risks and opportunities.

Outlook

The algorithm may be enlarged so that it can accommodate start and finish dates of risks and opportunities. In this case, probability densities and probability distributions can be computed for any point in time that changes the portfolio of risks and opportunities due to the start or finish of a risk or opportunity. In this case, the graphs will become 3-dimensional and look somewhat like a “mountain area”. This would have relevance in the sense that some projects might start heavily on the risky side and opportunities might only become available later. For such projects, there is a real chance that materialized risks result in a large cash outflow which later is then partially compensated by materialized opportunities.

Sources

- [1] = Balancing project risks and opportunities

- [2] = Project opportunity – risk sink or risk source?

Files

- Risks_Opportunities.xlsx contains the example table as well as the result tables of the analysis, graphs and some scripting commands.

- faltung4.c contains the C program code that was used for the analysis.

- Example 1:

- risks_opportunities_input.dat is the input table.

- risks_opportunities_output.dat is the output table which contains the probability density and the probability distribution.

- risks_opportunities_density.dat is the output table containing the probability density only.

- risks_opportunities_density.png is a graphic visualization of the probability density with gnuplot.

- risks_opportunities_distribution.dat is the output table containing the probability distribution only.

- risks_opportunities_distribution.png is a graphic visualization of (1 – probability distribution) with gnuplot.

- Example 2:

- risks_opportunities_input_2_50.dat is the input table.

- risks_opportunities_output_2_50.dat is the output table which contains the probability density and the probability distribution.

- risks_opportunities_density_2_50.dat is the output table containing the probability density only.

- risks_opportunities_density_2_50.png is a graphic visualization of the probability density with gnuplot.

- risks_opportunities_distribution_2_50.dat is the output table containing the probability distribution only.

- risks_opportunities_distribution_2_50.png is a graphic visualization of (1 – probability distribution) with gnuplot.

- Example 3:

- risks_input_2_50.dat is the input table.

- risks_output_2_50.dat is the output table which contains the probability density and the probability distribution.

- risks_density_2_50.dat is the output table containing the probability density only.

- risks_density_2_50.png is a graphic visualization of the probability density with gnuplot.

- risks_distribution_2_50.dat is the output table containing the probability distribution only.

- risks_distribution_2_50.png is a graphic visualization of (1 – probability distribution) with gnuplot.

Usage

Scripts and sequences indicating how to use the algorithm faltung4.c can be found in the table Risks_Opportunities.xlsx on the tab Commands and Scripts. Please follow these important points:

- The input file which represents n-ary risks and n-ary opportunities as well as their probabilities of occurrence, has to follow a certain structure which is hard-coded in the algorithm faltung4.c. A risk or opportunity is always enclosed in the tags # START and # STOP.

- Lines starting with “#” are ignored as are empty lines.

- The probabilities of occurrence of an n-ary risk or an n-ary opportunity must sum up to 1.0.

- The C code can be compiled with a standard C compiler, e.g., gcc, with the sequence:

gcc -o faltung4.exe faltung4.c- The input file is computed and transformed into an output file with the sequence:

cat input_file.dat | ./faltung4.exe > output_file.dat- The output file consists of two parts:

- a resulting probability density which has the same structure as the input file (with the tags # START and # STOP)

- a resulting probability distribution

- In order to post-process the output, e.g., with gnuplot, you can help yourself with standard Unix tools like head, tail, wc, cat, etc.

- The output file is structured in a way so that it can be easily used for visualizations with gnuplot.

Disclaimer

- The program code and the examples are for demonstration purposes only.

- The program shall not be used in production environments.

- While the program code has been tested, it might still contain errors.

- Due to rounding errors with float variables, you might experience errors depending on your input probability values when reading the input vector.

- The program code has not been optimized for speed.

- The program code has not been written with cybersecurity aspects in mind.

- This method of risk and opportunity assessment does not consider that risks and opportunities in projects might have different life times during the project, but assumes a view “over the whole project”. In the extreme case, for example, that risks are centered at the beginning and opportunities are centered versus the end of a project, it might well be possible that the expected damage/benefit of the overall project is significantly exceeded or significantly subceeded.

DICOM Experiments with dcmtk and TLS

Executive Summary

In this small article, I would like to point out how we can use the command line tools from the dcmtk toolkit in order to play around with DICOM images, setting up a DICOM recipient and how we integrate TLS (encryption) in the whole setup.

The dcmtk toolkit is a powerful set of command line tools which enable us to play around with DICOM, program test environments, transform images between DICOM and other common formats (like JPEG or PNG) and simulate a simple PACS or a worklist server. It does not feature all tools that you would wish to have (e.g., dealing with MP4 videos), but it’s powerful enough to start with.

As always, I recommend that you execute all scripts on a properly installed Linux machine. (Windows will also do it, but it’s… uh!) Fortunately, the dcmtk toolkit is available for most Linuxes.

Sending DICOM images

Let’s start with a simple setup. We need a folder structure like this:

gabriel@caipirinha:~> tree /reserve/playground/ /reserve/playground/ ├── images │ ├── IM000001 │ ├── IM000002 │ ├── IM000003 │ ├── IM000004 │ ├── IM000005 │ ├── IM000006 │ ├── IM000007 │ ├── IM000008 │ └── IM000009 └── pacs

The folder images will contain some sample DICOM images. Either you have some from your own medical examinations, or you download some demo pictures from the web. The folder pacs will serve as recipient of our transmitted DICOM images.

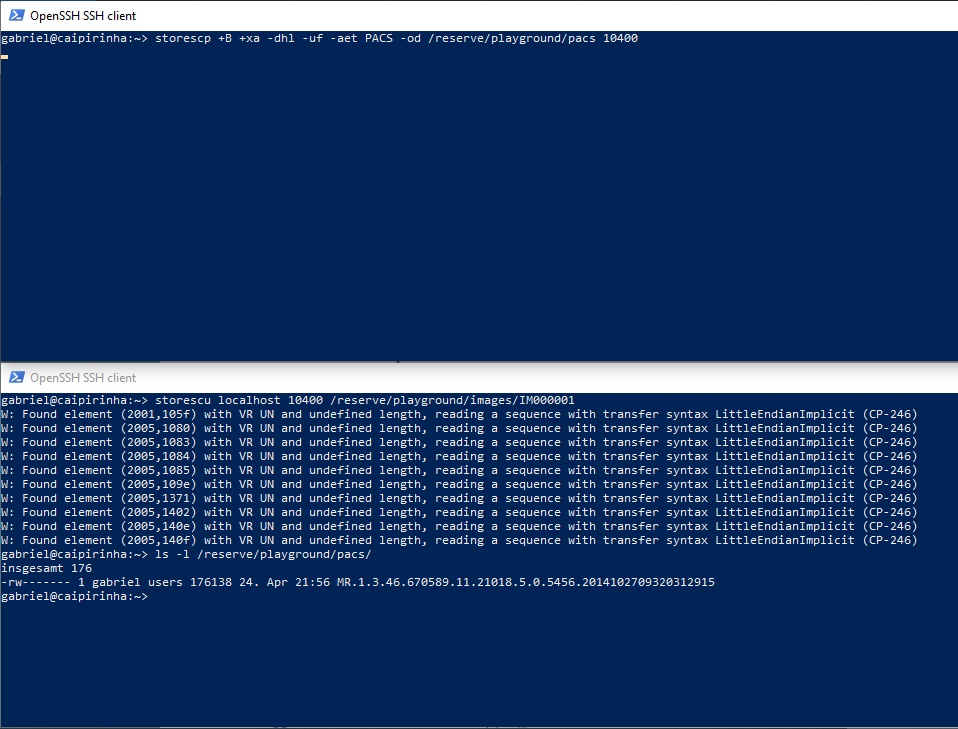

We furthermore need two (bash) shells open, and in the first shell, we start a storescp instance:

storescp +B +xa -dhl -uf -aet PACS -od /reserve/playground/pacs 10400

This command enables a recipient of DICOM images; it will be our “mini” PACS, and it will just store all the data it receives in the folder /reserve/playground/pacs.

The storescp command has a range of options which you might explore using “man storescp” on your Linux machine. Here, we use the options:

- +B: write data exactly as read. Actually, I use this option as it seems to write directly on disk. On a weaker Raspberry Pi I did have issues with large files until I started to use this option. So: recommended.

- +xa: accept all supported transfer syntaxes. That’s what you expect from a test system.

- -dhl: disable hostname lookup. In our test environment, we do not want to complicate things by using DNS and reverse DNS lookups.

- -uf: generate filename from instance UID (default). This means that you will get files names from the instance UID. They are not exactly “telling” filenames, but they refer to some instance UID that comes from your other test systems or from a worklist or from whereever.

- -aet: set my AE title. This is the AE title, an important identifier in the DICOM world. In reality, this would probably be a name referring to the respective hospital owning the PACS or to the manufacturer of the PACS.

You can also see that the instance is listening on TCP port 10400. This is not the standardized port for DICOM operation; in fact, in reality you would use port 104. But as we want to test around not using the root account, we will not use privileged ports.

In the second shell, we transmit a DICOM image by invoking a storescu command:

storescu localhost 10400 /reserve/playground/images/IM000001

This will transfer the DICOM image IM000001 to the storescp and ultimately store it in the folder /reserve/playground/pacs. The whole operation looks like in the image below. Do not get bothered by the messages of the storescu command; it just evidences that even commercially taken DICOM images do not always fulfil the specs.

Sending DICOM images with TLS

Now, let’s switch one gear higher and use TLS encryption for the transfer of our DICOM image. We need some preparation though, and for that, we need to download a script named dcmtk_ca.pl. You can get that at Github, for example (here). Save it and make it executable. Of course, you need a Perl interpreter, but as you work on a well installed Linux machine, it is simply available.

We first need to create a (self-generated) root certificate and subsequently two key pairs. On this blog post, you can find the commands for that, and those are:

./dcmtk_ca.pl newca /reserve/playground/myca

mkdir /reserve/playground/mycert

./dcmtk_ca.pl mkcert -des no /reserve/playground/myca/ /reserve/playground/mycert/cert1 /reserve/playground/mycert/key1

./dcmtk_ca.pl mkcert -des no /reserve/playground/myca/ /reserve/playground/mycert/cert2 /reserve/playground/mycert/key2

During the course of the execution of these commands, you will be asked several questions which refer to attributes used in X.509 certificates, like “organizational unit”, … The values that you give do not matter here in our test environment. But for certificates used in production environment, those attributes should be populated with meaningful values, of course.

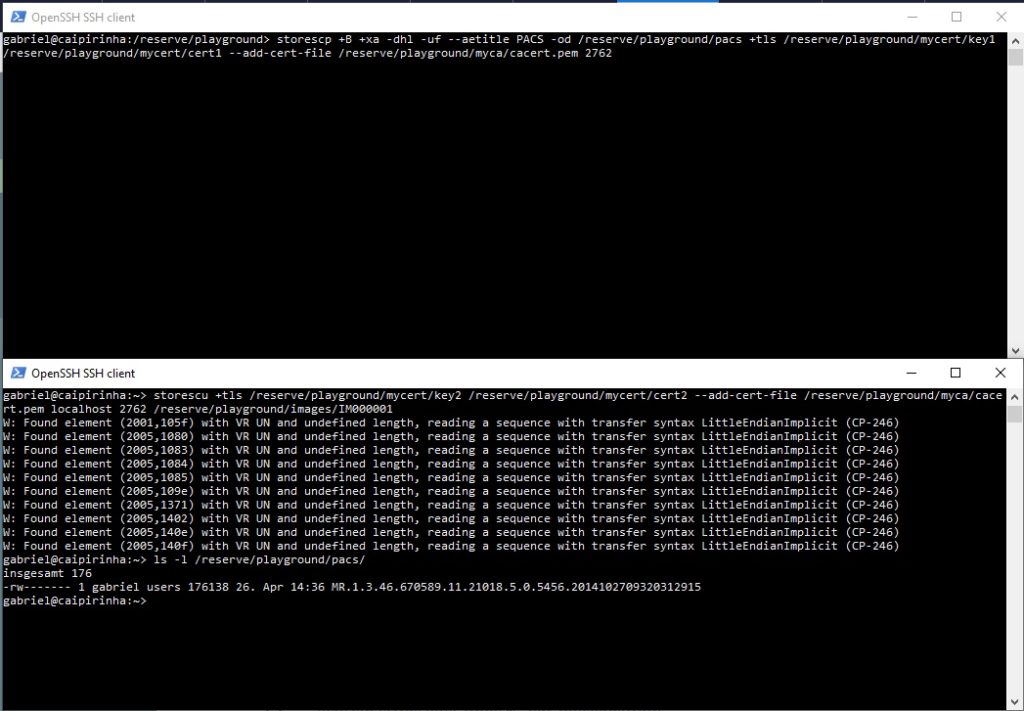

The command sequence above has created our root certificate in the file /reserve/playground/myca/cacert.pem, and two key pairs in the folder /reserve/playground/mycert/. We will now repeat the transmission of a DICOM image as shown in the previous chapter, however, using a TLS connection. Hence, in our first shell, we will issue the command:

storescp +B +xa -dhl -uf --aetitle PACS -od /reserve/playground/pacs +tls /reserve/playground/mycert/key1 /reserve/playground/mycert/cert1 --add-cert-file /reserve/playground/myca/cacert.pem 2762

which will be our “mini” PACS again, and in the second shell we issue the command:

storescu +tls /reserve/playground/mycert/key2 /reserve/playground/mycert/cert2 --add-cert-file /reserve/playground/myca/cacert.pem localhost 2762 /reserve/playground/images/IM000001

You can see that for this example, I have used TCP port 2762 which is also the recommendation from NEMA.

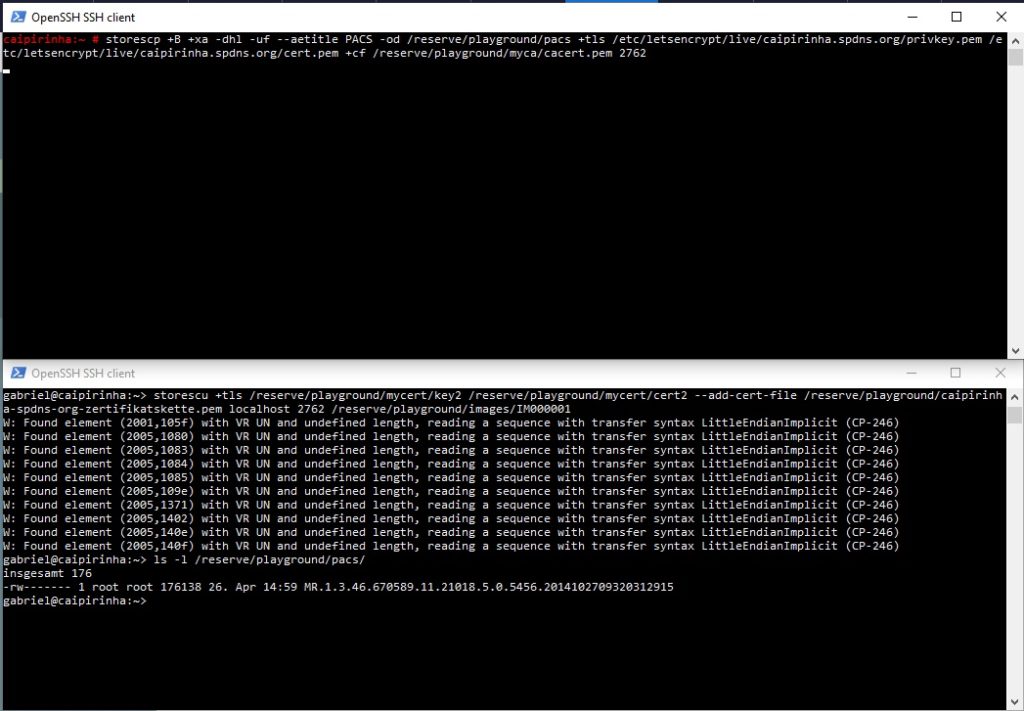

In this example, we have used a self-generated root certificate (cacert.pem) and derived two key pairs from this. But in real life, you might have an existing certificate that shall be used. My web server (caipirinha.spdns.org) for example, has a real certificate (thanks to the Let’s Encrypt initiative), and now, we want to use this certificate for the storescp sequence simulating our “mini” PACS. In order to do so, I will start the storescp instance as root user (because on my machine, only root can read the server key). On a production system, this is exactly what you shall not do; rather than that, you will have to find a way for you to make the server key available for the user which shall run the PACS, and this user should not be a normal user. But for our test here, this is OK. So, in that case, the storescp command would be:

storescp +B +xa -dhl -uf --aetitle PACS -od /reserve/playground/pacs +tls /etc/letsencrypt/live/caipirinha.spdns.org/privkey.pem /etc/letsencrypt/live/caipirinha.spdns.org/cert.pem +cf /reserve/playground/myca/cacert.pem 2762

The corresponding storescu command is:

storescu +tls /reserve/playground/mycert/key2 /reserve/playground/mycert/cert2 --add-cert-file /reserve/playground/caipirinha-spdns-org-zertifikatskette.pem localhost 2762 /reserve/playground/images/IM000001

You will realize here that in this example, the storescp instance has the root certificate of the storescu instance (which is the self-generated root certificate) whereas the storescu instance has the root ceritifcate chain of the storescp instance (which I extracted using the Firefox browser and accessing my own page https://caipirinha.spdns.org/). The reason for this crossover mentioning of the root certificates is simple:

- The storescp instance needs the root certificate (chain) of the storescu instance in order to verify whether the certificate that the storescu instance presents is valid.

- The storescu instance needs to the root certificate (chain) of the storescp instance in order to verify whether the certificate that the storescp instance presents is valid.

This might put a problem for hospital installations. Very rarely would you be able to add self-generated root certificates to your PACS, and therefore, the recommendation is that, when using TLS in your hospital environment, the probably best approach is that all certificates (key pairs) are generated from the very same certificate authority that was used to issue the key pair for the PACS. How certificate and key information is set up in various DICOM-capable devices depend on the device. In one device I came across, all related certificates and keys could be saved on the hard disk where I created a folder D:\Zertifikate and subsequently entered the related information in a JSON object for the DICOM configuration. That looked like this:

{

...

"DefaultSendOptions": {

"sendExecutable": "storescu-tls.exe",

...

},

"args": [

"--log-level",

"info",

...

"+tls",

"D:/Zertifikate/key",

"D:/Zertifikate/cert",

"+cf",

"D:/Zertifikate/caipirinha-ca-chain.pem"

],

...

}In this example, I still used a self-created certificate and key for the DICOM-enabled device that shall talk to the PACS, but the PACS itself uses a real certificate. You can see that the DICOM-enabled device also uses the storescu command in order to transmit DICOM data. This is not unusual, as most device manufacturers would use the dcmtk toolkit rather than programming DICOM routines from scratch.

I hope that this small article can help you to better understand the two related commands storescp and storescu with the focus on TLS encryption during the communication.

Links

Key Words

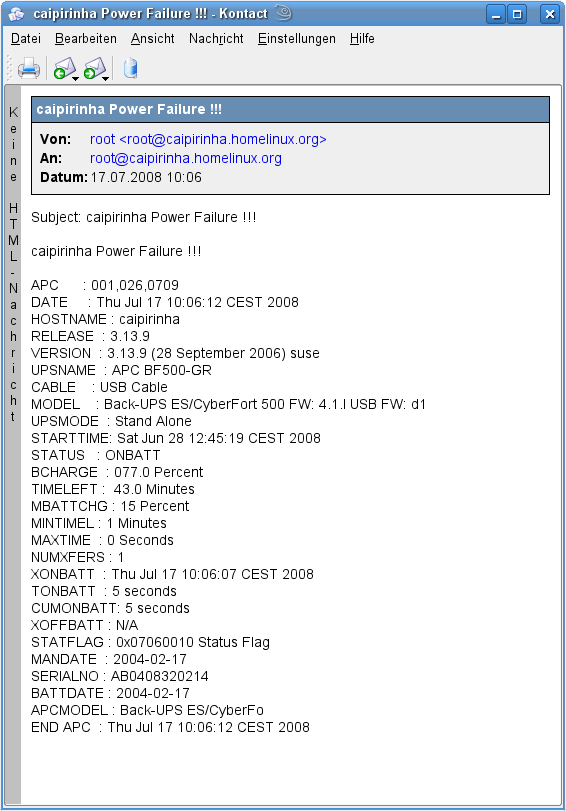

USV

Konzept

Die Stabilität eines Servers hängt auch von der Versorgungssicherheit des Stromnetzes ab. Hier drohen folgende Gefahren:

- Stromausfall

- Spannungsschwankungen

- Blitzschlag bzw. plötzliche Überspannung

Diese Ursachen können plötzlichen Systemabsturz oder gar Hardware-Schäden zur Folge haben und müssen daher vermieden werden. Glücklicherweise wird man mit solchen Problemen in Deutschland weniger geplagt als in weniger entwickelten Ländern, wo solche Probleme im wörtlichen Sinne an der Tagesordnung sind.

Um dennoch Risiken zu minimieren, ist der Caipirinha-Server nicht direkt ans Stromnetz angeschlossen, sondern er wird über eine “unterbrechungsfreie Stromversorgung” (USV) betrieben. Die Wahl fiel auf eine kleine SoHo-Version der Herstellers APC, in diesem Fall das Modell “BE700-GR“, weil diese preiswert zu bekommen war und über den USB-Anschluß mit dem Server verbunden werden kann. Sie bietet außerdem Blitzschutz (im üblichen Rahmen) für sie Stromversorgung und für eine durchgeschleifte Datenleitung, was hier für die DSL-Leitung benutzt wird.

Am Standort des Caipirinha-Servers ist daher nicht nur der Server selbst an die USV angeschlossen, sondern auch der WLAN-Router und die Telefone, so dass bei einem kurzfristigen Stromausfall keine Beeinträchtigung des WLANs oder des Telefonnetzes oder des Serverbetriebs auftritt.

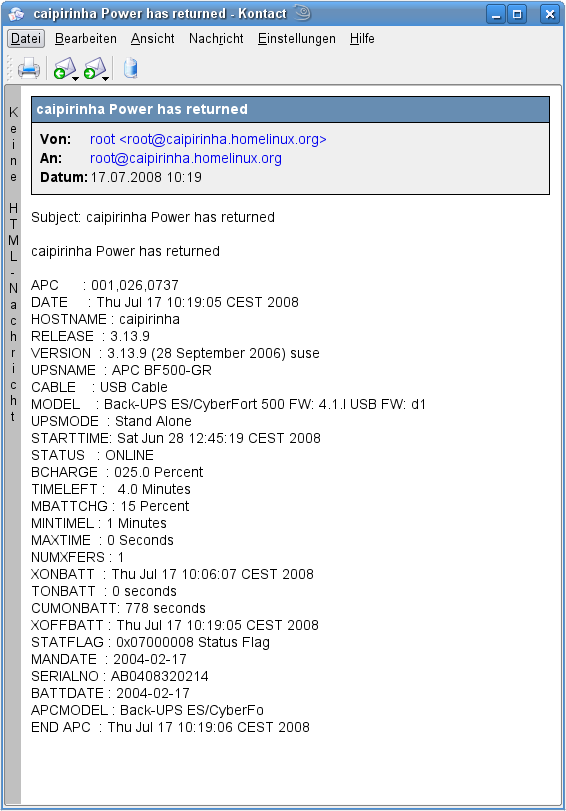

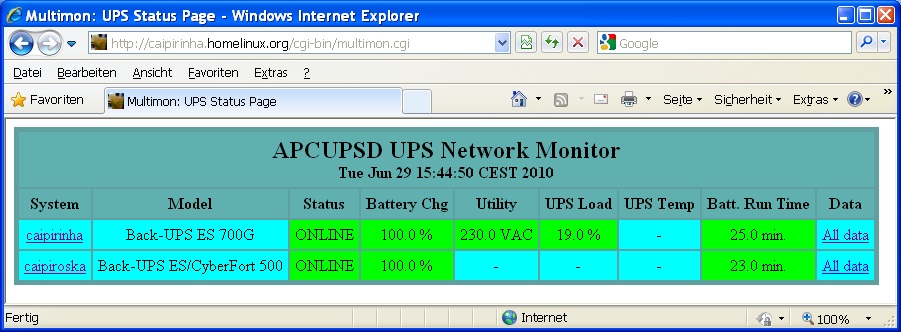

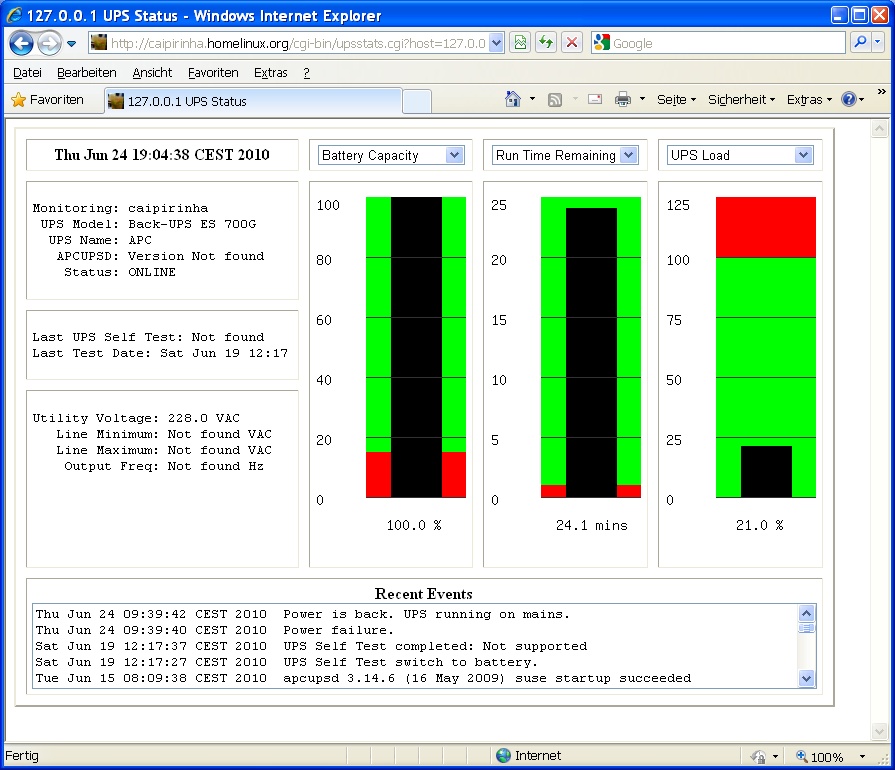

Einrichtung

Bei openSuSE-Linux gibt es glücklicherweise gleich ein ganzes Paket zur Kommunikation des Servers mit einer USV von APC. Dazu muss man das Paket apcupsd installieren. Nach der Installation befinden sich auf dem Server mehrere Programme, von denen der gleichnamige Dämon (apcupsd) das wichtigste ist. Dieser Dämon überwacht den Zustand der USV und kann in Abhängigkeit von gezielt diesem verschiedene Aktionen auslösen. Die zentrale Konfigurationsdatei ist /etc/apcupsd/apcupsd.conf. Die Default-Datei ist ausreichend kommentiert, so dass man sich gut darin zurechtfinden kann. Auf [1] gibt es außerdem eine sehr ausführliche und gut verständliche Dokumentation. Hier ist die aktuelle Konfiguration des Caipirinha-Servers abgedruckt: