Author: Gabriel Rüeck

Protected: Road Trip nach Portugal (II)

Learnings from Dynamic Electricity Pricing

Executive Summary

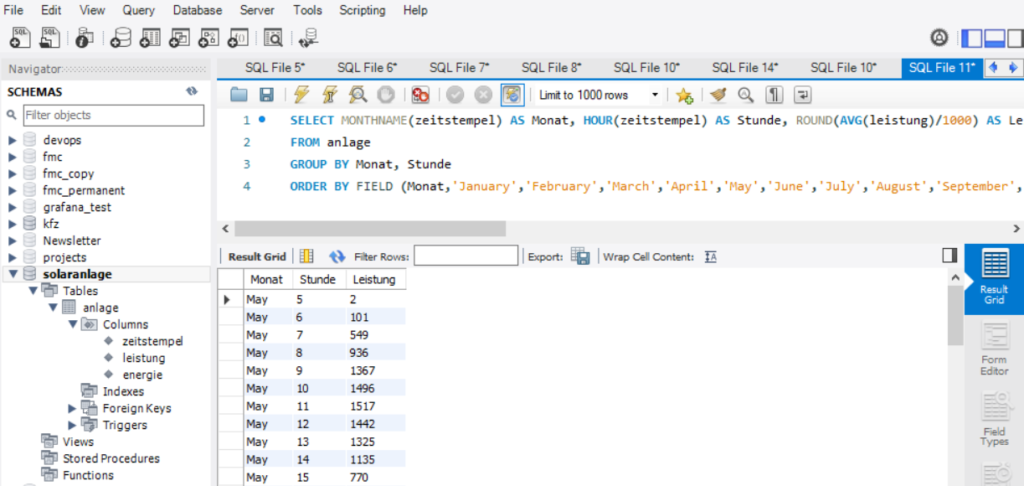

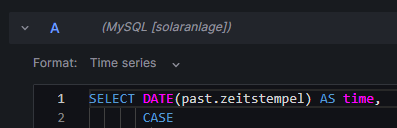

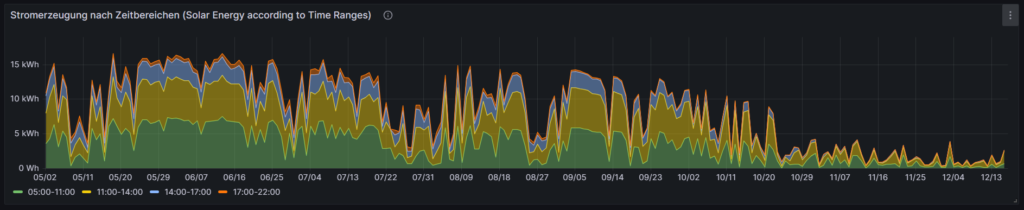

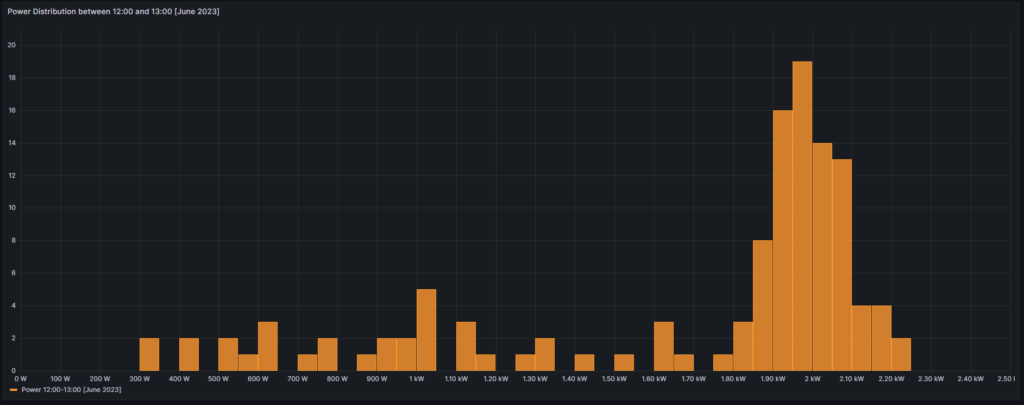

Unlike previous articles, I chose to split this new blog post into two parts. In the first part (Findings), I will elaborate on my findings as a consumer with dynamic electricity prices (day-ahead market) in connection with a small solar electricity generation unit. We will look at various visualizations that help to understand the impact and to gain some more insight in how dynamic electricity prices can be useful. In the second part (Annex: Technical Details), technically interested folks will find the respective queries and sample data with which they can replicate the findings or even do their own examination with the sample data. As in previous articles, Grafana is used in connection with a MariaDB database.

Background

On 1st of March 2024, I switched from a traditional electricity provider to one with dynamic day-ahead pricing, in my case, Tibber. I wanted to try this contractual model and see if I could successfully manage to shift chunks of high electricity consumption such as:

- … loading the battery-electric vehicle (BEV) or the plug-in hybrid car (PHEV)

- … washing clothes

- … drying clothes in the electric dryer

to those times of the day when the electricity price is lower. I also wanted to see if that makes economic sense for me. And, after all, it is fun to play around with data and gain new insights.

As my electricity supplier, I had chosen Tibber because they were the first one I got to know and they offer a device called Pulse which can connect a digital electricity meter to their infrastructure for metering and billing purposes. Furthermore, they do have an API [1] which allows me to read out my own data; that was very important for me. I understand that meanwhile, there are several providers Tibber that have similar models and comparable features.

Findings

Price and consumption patterns

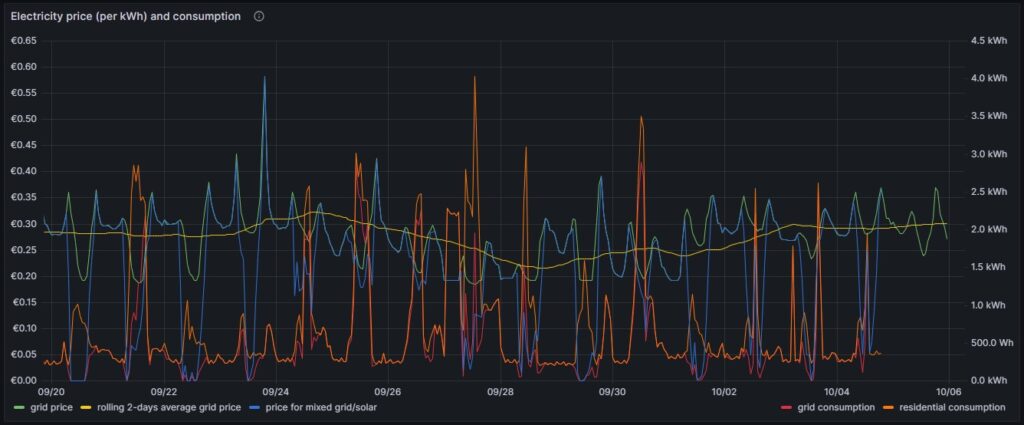

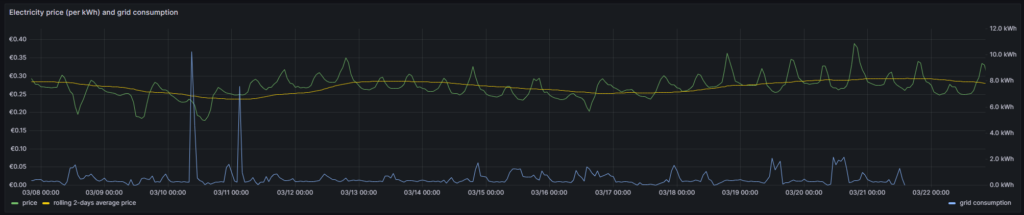

The graph below shows five curves and is an upgraded version of the respective graph in [6] visualizing data over two weeks:

As in [6], the green curve is the hourly price of the day-ahead market. It is well recognizable that the price has peaks in the evening and in the morning at breakfast time when residential consumption is high, but little solar energy is available in Germany. The yellow curve is a two-days floating average and shows that the average price is not below a fixed rate electricity contract, an important point that we shall discuss later. The orange curve is the consumption of my house; the higher peaks indicate times when I charged one of the cars using an ICCB (one phase, current: 10 A). The red curve shows the consumption of the grid. During the day, when there is sunshine, the red curve lies below the orange curve as a part of the overall consumed electricity comes from the solar panels. At nighttime, the red curve will exactly lie on the orange curve as there is no solar electricity generation. The blue curve shows the average electricity price per kWh based on a mixed calculation of the grid price and zero for my own solar electricity generation. One might argue whether zero is an adequate assumption as also solar panels cost money, but as I do have them installed already, I consider them to be sunk cost now. The blue curve show an interesting behavior: When my consumption is low, the blue curve shows a small average price. When the consumption of the house is less than the power that is generated by the solar panels, then the blue curve is flat zero. When, however, I consume a lot of power, then, the blue curve approaches the green curve. At nighttime when there is no solar energy generation, the blue curve lies identical with the green curve.

My goal is to consume more electricity in the times when either the green curve points to a low electricity price or when there is enough electricity generated by the solar panels so that my average price that I pay (blue curve) is reasonable low. This also explains why I mostly the ICCB (one phase, 10 A) to charge the cars as then, I still can get a good average price (although charging takes a lot more time then). I think that by looking at the curves, I have adapted my consumption pattern well to the varying electricity price.

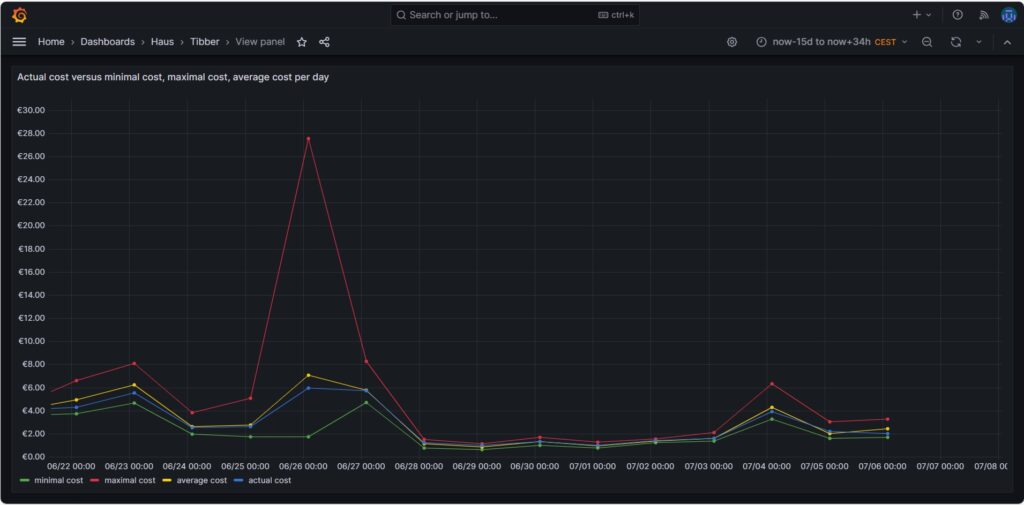

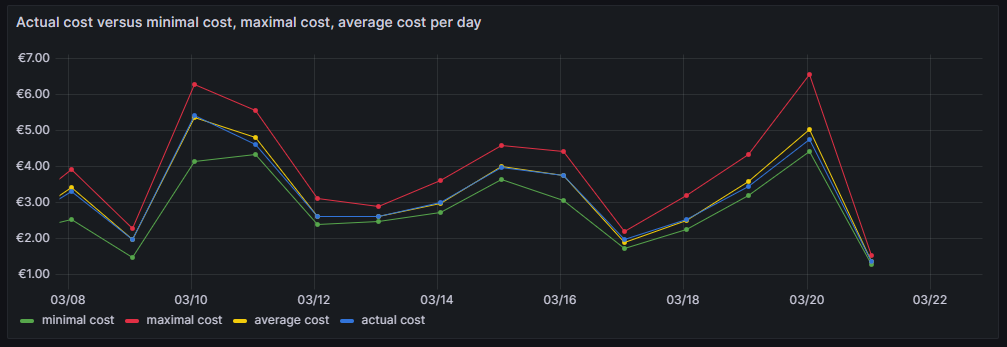

Actual cost, minimal cost, maximal cost, average cost per day

The graph above shows four curves. The green curve is the cost that I would have incurred if I had bought all the electricity of the respective day in the hour of the cheapest electricity price. This would only be possible if I had a battery that could bridge the remaining 23 hours of the day (and probably some hours more as the cheapest hour of the following day is not necessarily 00:00-01:00). The red curve is the cost that I would have incurred if I had bought all the electricity of the respective day in the hour of the most expensive electricity price. The yellow curve is the average cost of the respective day, the multiplication of the average price per kWh of that day by my consumed energy. The blue curve is the real cost that I have paid. If the blue curve is between the yellow curve and the green curve, then this is very good, and I have succeeded in shifting my consumption versus the hours with cheaper electricity. Without a battery, it is almost impossible to come very close to the green line.

The graph above shows one peculiarity as on 2024-06-26, there were some hours with an extremely high price, but that was due to a communication error that decoupled the auction in Germany from the rest of Europe [7], clearly (and hopefully) a one-time event.

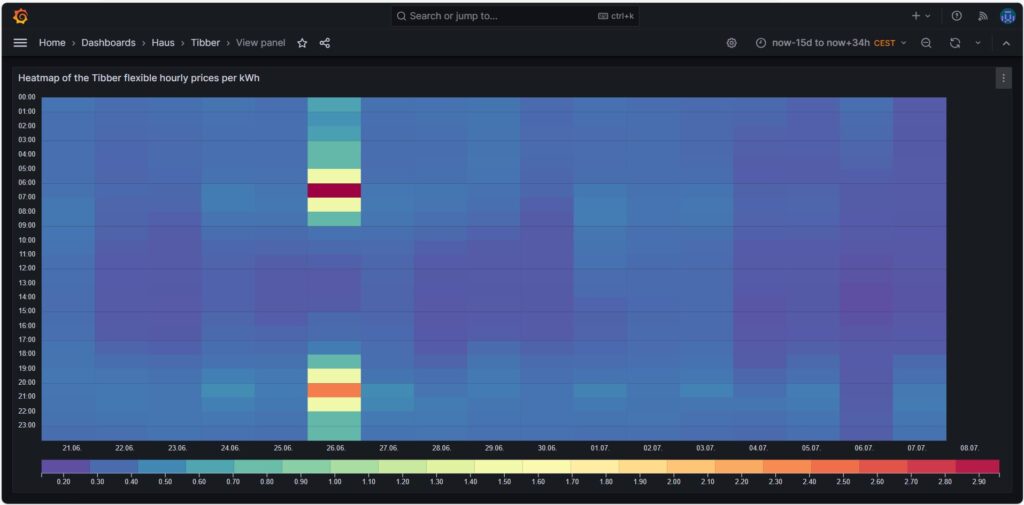

Price heatmap of the hourly prices per kWh

The one-time event with unusually high prices [7] is well visible in the price heatmap that was already introduced and explained in [6]. The one-time event overshadows all price fluctuations in the rest of the week.

Cost and amount of purchased electricity from grid with price indication

The first graph shown below was already introduced and explained in [6] and shows the cost of purchased electricity from the grid in 24 rectangles, whereby each rectangle represents an hour. The order of the rectangles starts with the most expensive hour at the top and ends with the least expensive hour at the bottom. The larger the rectangle, the more money has been spent in the respective hour. I already explained in [6] that the goal should be – if electricity has to be purchased from the grid at all – the purchases ideally should happen in times when the price is low. In the graph those are the rectangles with green or at maximum yellow color. The rectangles with orange and red color indicate purchases during times of a high electricity price. In reality, one will not be able to avoid purchases at times of a high price completely. My experience is that in summer, I let the air conditioning systems run also in the evening hours when the electricity price is higher, just to keep the house at reasonable temperatures inside. Similarly, in spring and autumn, I opt for leaving the central heating switched off and try to heat the rooms in which I am usually with the air conditioning systems (in heating mode) as in spring and autumn, the difference in temperature between outside and inside is not too high, and the air conditioning systems will have a high efficiency.

Then, for 13-Oct (10/13), one can see that in fact I bought substantial electricity at high prices. The reason for that was that I returned home at night and had to re-charge the car for the next day. So, one cannot always avoid purchasing electricity at high prices.

The next chart offers another view on the same topic. Rather than looking at the cost of the purchased electricity, we look at the amount of purchased electricity. This offers an interesting insight. Except for 13-Oct, the amount of electricity that is purchased at hours with a high price, is less than 5 kWh. This means that with an energy-storage system (ESS), it should be possible to charge a battery of around 5 kWh during times of a lower electricity price and to discharge the battery and supply the house during times of a high electricity price so that ideally, there would be no or only minimally sized orange and red rectangles. Of course, this only makes sense if the low prices and the high prices per day differ substantially as the ESS will have losses of 10%…20%. And in fact, this is exactly an idea that I am planning to try out within the next months (so stay tuned).

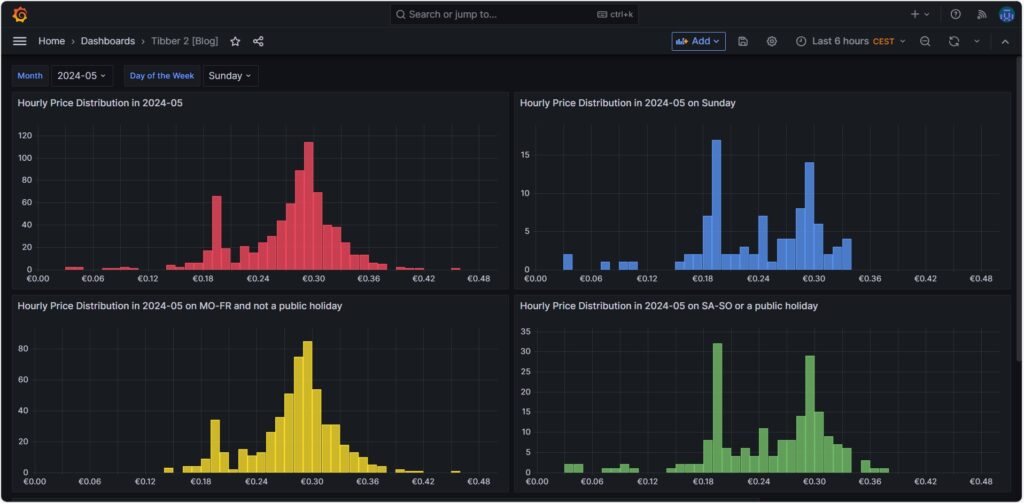

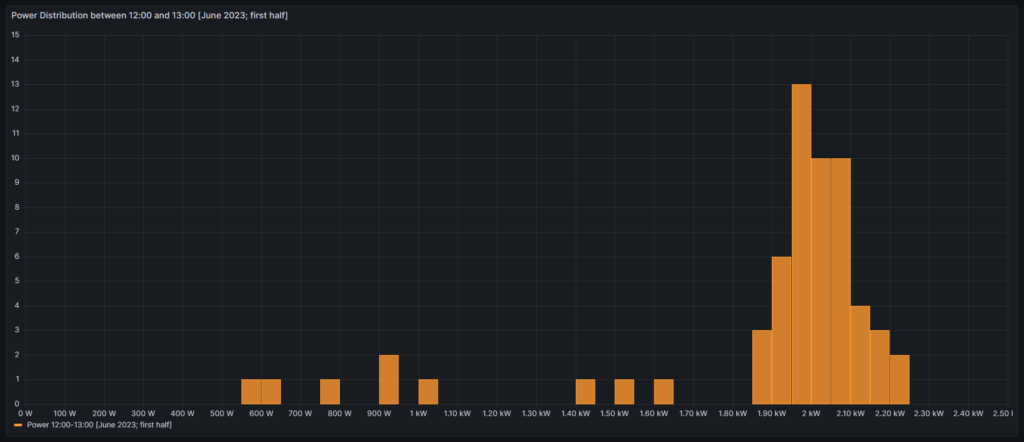

Hourly price distribution

Another finding which was interesting for me was that the price of electricity is not necessarily cheaper on the weekend (at least not in May 2024). One might assume that because industry consumption is low on the weekend, the price of electricity is lower. And that is true to some parts, as the lowest prices that occur within a whole month tend to happen on the weekend (green histogram). We can also see a peak at around 19 ¢/kWh in the green histogram which is much lower in the yellow histogram. However, there are also many hours with prices that are similar to the prices that we have during the week (yellow histogram).

Monthly hourly price curves

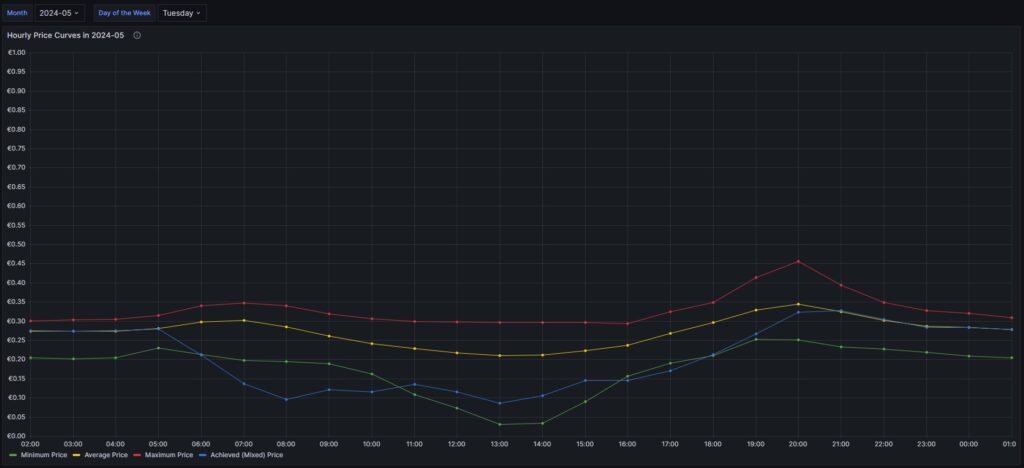

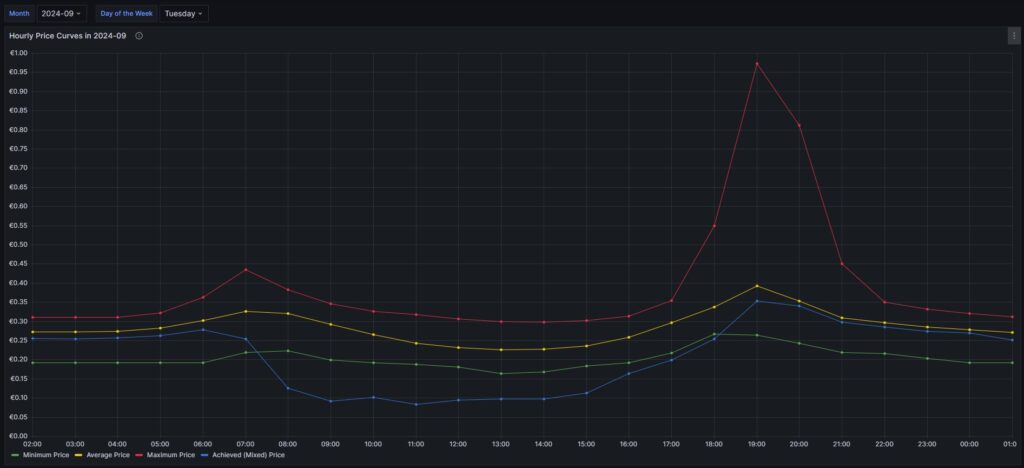

The following three graphs show the hourly price curves over the hour of the day per month; the selected Day of the Week is irrelevant for this graph as only the variable Month is used.

The green curve shows the minimum hourly price per kWh that occurred in the selected month. The red curve shows the maximum hourly price per kWh that occurred in the selected month. The yellow curve shows the average hourly price per kWh that occurred in the selected month. The blue curve shows the average hourly price per kWh that I have experienced in the selected month, calculating my own solar electricity generation as “free of cost” (like the blue curve of the graph in chapter Price and consumption patterns).

There are a couple of interesting findings that can easily be seen in the curves:

- Prices tend to be higher in the early morning (06:00-08:00) and in the evening (18:00-21:00). The evening price peak is higher than the one in the morning.

- The price gap in the morning, but especially in the evening hours between the minimum and the maximum hourly price seems to increase from May to September. I do not yet know if this is because of the season, or if the market has become more volatile in general.

- In my personal situation (the solar modules face East), I am not bothered by the price peak in the morning, as I seem to have already quite a good power generation (blue curve descending in the morning). That is, however, different in the evening; then, I am personally affected by the price peak.

For me, this is an indication that it might make sense to install a battery and charge it during the day and discharge it especially in the evening hours (18:00-21:00) when the price peaks.

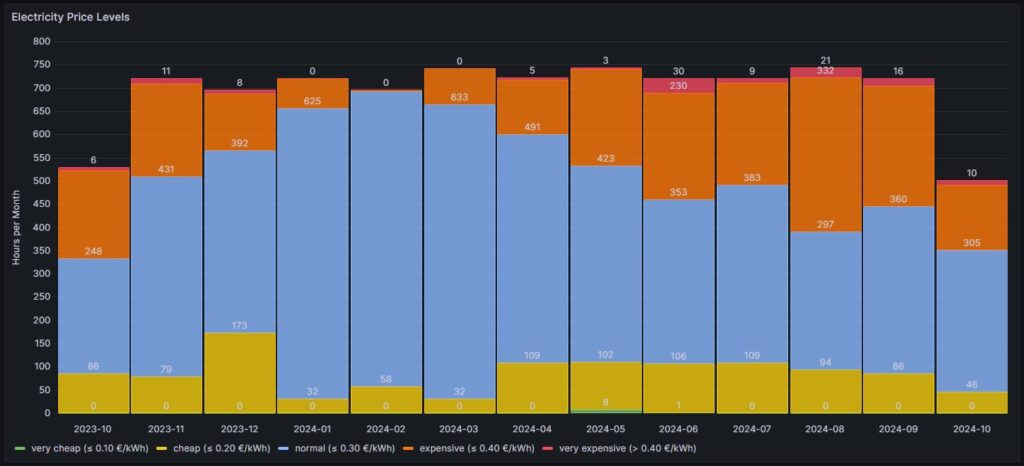

Electricity price levels

In order to look into the hourly prices from a different perspective, I have grouped the hourly prices into five categories:

- Price per kWh ≤10 ¢/kWh (green block), labelled as very cheap

- Price per kWh >10 ¢/kWh and ≤20 ¢/kWh (yellow block), labelled as cheap

- Price per kWh >20 ¢/kWh and ≤30 ¢/kWh (blue block), labelled as normal

- Price per kWh >30 ¢/kWh and ≤40 ¢/kWh (orange block), labelled as expensive

- Price per kWh >40 ¢/kWh (red block), labelled as very expensive

For each month (except for Oct 2023, Oct 2024) the sum of the blocks in different colors are the hours of the respective month; depending on the number of days per month, the number of hours vary, of course. Larger blocks correspond to more hours in the respective price category. Do not pay attention to the numbers listed in the graph in white color as some numbers are missing. I will give a numerical statistic below the graph.

| Month | very cheap (≤ 10 ¢/kWh) | cheap (≤ 20 ¢/kWh) | normal (≤ 30 ¢/kWh) | expensive (≤ 40 ¢/kWh) | very expensive (> 40 ¢/kWh) |

| 2023-10 | 0 | 86 | 248 | 189 | 6 |

| 2023-11 | 0 | 79 | 431 | 199 | 11 |

| 2023-12 | 0 | 173 | 392 | 123 | 8 |

| 2024-01 | 0 | 32 | 625 | 63 | 0 |

| 2024-02 | 0 | 58 | 635 | 3 | 0 |

| 2024-03 | 0 | 32 | 633 | 78 | 0 |

| 2024-04 | 0 | 109 | 491 | 117 | 5 |

| 2024-05 | 8 | 102 | 423 | 208 | 3 |

| 2024-06 | 1 | 106 | 353 | 230 | 30 |

| 2024-07 | 0 | 109 | 383 | 219 | 9 |

| 2024-08 | 0 | 94 | 297 | 332 | 21 |

| 2024-09 | 0 | 86 | 360 | 258 | 16 |

| 2024-10 | 0 | 46 | 305 | 141 | 10 |

Before I had generated this graph and the ones of the previous chapter, my initial assumption had been that in summer, the prices would go down compared to winter. And to some part, this is true. There is a higher number of hours in the category “cheap” (yellow block). However, the category “expensive” (orange block) increases even more in summer. And even the category “very expensive” (red block) becomes noticeable whereas the category “very cheap” (green block) does not manifest itself, not even in summer. I do not have an explanation for this; my assumption though is that maybe in winter, some fossil power stations are active that are switched off in summer and that therefore, the price is much more susceptible to the change in the electricity offer over the day. I suspect that during the day, there is mostly enough solar electricity generation, and in the evening, gas power stations need to be started that then determine the price level and lead to increased prices. However, I do not have any statistics underpinning this assumption.

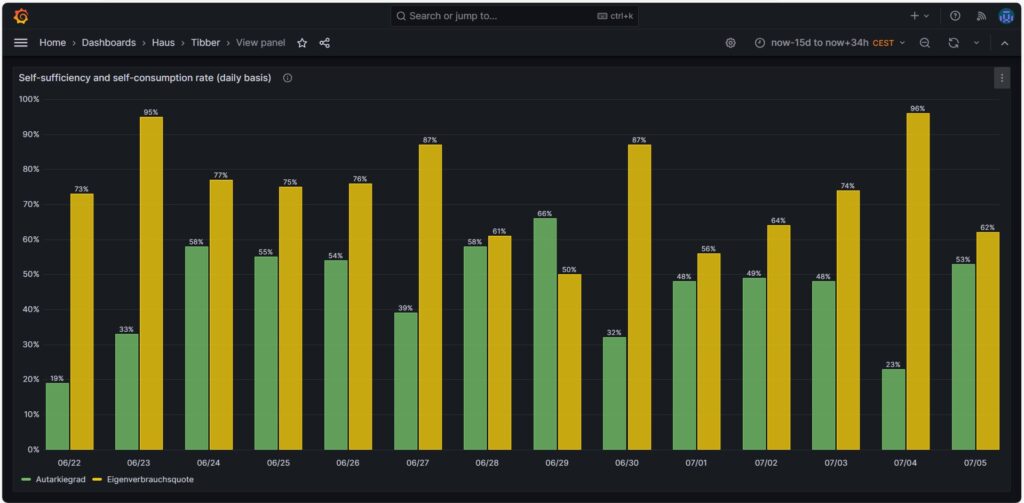

Self-sufficiency (Autarkie) and sef-consumption (Eigenverbrauch)

I am not much of a friend of looking at the values of self-sufficiency (de: Autarkie) and self-consumption (de: Eigenverbrauch), and this is for the following reasons:

- If you do not live in a place where an electricity grid is not available or where the grid is very unreliable, it does not make sense to strive for complete self-sufficiency. It is still economically best if you use your electricity during the day and consume electricity from the grid at night. Similarly, you will probably need to buy electricity from the grid in winter, at least in Central or Northern Europe as in winter, the output from your own solar electricity generation is usually insufficient to power the consumption of your house.

- The value for self-consumption varies strongly with your consumption pattern and with the solar intensity on the respective day. The only conclusion that you can draw from observing the self-consumption values over a longer time frame is:

- If your values are constantly low, then you probably have spent into too large of a solar system.

- If your values are constantly high or 100%, then it might make sense to add some more solar panels to your system.

Nevertheless, for the sake of completion, here are some example values from July of this year.

Conclusion

- The field of dynamic electricity pricing is technically very interesting and certainly helps to sharpen awareness for the need to consume electricity precisely then, when there is a lot of it available.

- Economically, having a dynamic electricity price can make sense under certain conditions. I side with [8] when I say:

- For a household without an electric car or another large electricity consumer, it does not make sense to use dynamic electricity pricing. The consumption of the washing machine, the dryer, and the dishwasher are not large enough to really draw a benefit.

- For a household with an electric car that has a large battery (e.g., some 80+ kWh, unfortunately not my use case), it makes sense then when you have the flexibility to charge the car during times with a low electricity price.

Annex: Technical Details

Preconditions

In order to use the approach described below, you should:

- … have access to a Linux machine or account

- … have a MySQL or MariaDB database server installed, configured, up and running

- … have the package Grafana [2] installed, configured, up and running

- … have the package Node-RED [3] installed, configured, up and running

- … have access to the data of your own electricity consumption and pricing information of your supplier or use the dataset linked below in this blog

- … have some basic knowledge of how to operate in a Linux environment and some basic understanding of shell scripts

- … have read and understood the previous blog post [6], especially the part on how to connect Grafana to the MySQL or MariaDB database server

The Database

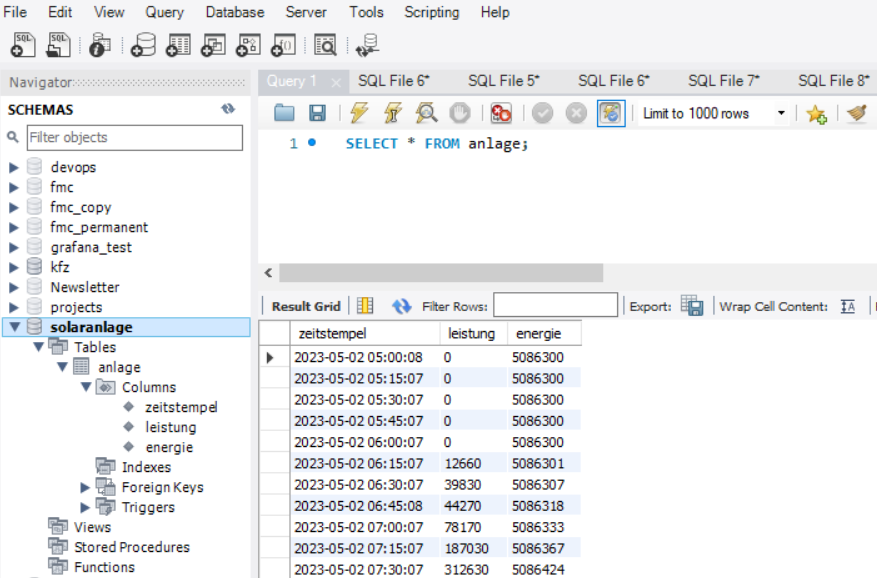

The base for the following visualizations is a fully populated MariaDB database with the following structure:

# Datenbank für Analysen mit Tibber

# V2.0; 2024-09-12, Gabriel Rüeck <gabriel@rueeck.de>, <gabriel@caipirinha.spdns.org>

# Delete existing databases

REVOKE ALL ON tibber.* FROM 'gabriel';

DROP DATABASE tibber;

# Create a new database

CREATE DATABASE tibber DEFAULT CHARSET utf8mb4 COLLATE utf8mb4_general_ci;

GRANT ALL ON tibber.* TO 'gabriel';

USE tibber;

SET default_storage_engine=Aria;

CREATE TABLE preise (uid INT UNSIGNED NOT NULL PRIMARY KEY AUTO_INCREMENT,\

zeitstempel DATETIME NOT NULL UNIQUE,\

preis DECIMAL(5,4),\

niveau ENUM('VERY_CHEAP','CHEAP','NORMAL','EXPENSIVE','VERY_EXPENSIVE'));

CREATE TABLE verbrauch (uid INT UNSIGNED NOT NULL PRIMARY KEY AUTO_INCREMENT,\

zeitstempel DATETIME NOT NULL UNIQUE,\

energie DECIMAL(5,3),\

kosten DECIMAL(5,4));

CREATE TABLE zaehler (uid INT UNSIGNED NOT NULL PRIMARY KEY AUTO_INCREMENT,\

zeitstempel TIMESTAMP NOT NULL DEFAULT UTC_TIMESTAMP,\

bezug DECIMAL(9,3) DEFAULT NULL,\

erzeugung DECIMAL(9,3) DEFAULT NULL,\

einspeisung DECIMAL(9,3) DEFAULT NULL);

Data Acquisition

Data for the MariaDB database is acquired with two different methods.

The tables preise and verbrauch are populated with the following bash script. You will need a valid API token of your Tibber account [4]; in this script, you need to replace _API_TOKEN with your personal API token ([4]) and _HOME_ID with your personal Home ID ([1]). The script further assumes that the user gabriel can login to the MySQL database without further authentication; this can be achieved by writing the MySQL login information in the file ~/.my.cnf.

#!/bin/bash

#

# Dieses Skript liest Daten für die Tibber-Datenbank und speichert das Ergebnis in einer MySQL-Datenbank ab.

# Das Skript wird einmal pro Tag aufgerufen.

#

# V2.0; 2024-09-12, Gabriel Rüeck <gabriel@rueeck.de>, <gabriel@caipirinha.spdns.org>

#

# CONSTANTS

declare -r MYSQL_DATABASE='tibber'

declare -r MYSQL_SERVER='localhost'

declare -r MYSQL_USER='gabriel'

declare -r TIBBER_API_TOKEN='_API_TOKEN'

declare -r TIBBER_API_URL='https://api.tibber.com/v1-beta/gql'

declare -r TIBBER_HOME_ID='_HOME_ID'

# VARIABLES

# PROGRAM

# Read price information for tomorrow

curl -s -S -H "Authorization: Bearer ${TIBBER_API_TOKEN}" -H "Content-Type: application/json" -X POST -d '{ "query": "{viewer {home (id: \"'"${TIBBER_HOME_ID}"'\") {currentSubscription {priceInfo {tomorrow {total startsAt level }}}}}}" }' "${TIBBER_API_URL}" | jq -r '.data.viewer.home.currentSubscription.priceInfo.tomorrow[] | .total, .startsAt, .level' | while read cost; do

read LINE

read level

timestamp=$(echo "${LINE%%+*}" | tr 'T' ' ')

# Determine timezone offset and store the UTC datetime in the database

offset="${LINE:23}"

mysql --default-character-set=utf8mb4 -B -N -r -D "${MYSQL_DATABASE}" -h ${MYSQL_SERVER} -u ${MYSQL_USER} -e "INSERT INTO preise (zeitstempel,preis,niveau) VALUES (DATE_SUB(\"${timestamp}\",INTERVAL \"${offset}\" HOUR_MINUTE),${cost},\"${level}\");"

done

# Read consumption information from the past 24 hours

curl -s -S -H "Authorization: Bearer ${TIBBER_API_TOKEN}" -H "Content-Type: application/json" -X POST -d '{ "query": "{viewer {home (id: \"'"${TIBBER_HOME_ID}"'\") {consumption (resolution: HOURLY, last: 24) {nodes {from to cost consumption}}}}}" }' "${TIBBER_API_URL}" | jq -r '.data.viewer.home.consumption.nodes[] | .from, .consumption, .cost' | while read LINE; do

read consumption

read cost

timestamp=$(echo "${LINE%%+*}" | tr 'T' ' ')

# Determine timezone offset and store the UTC datetime in the database

offset="${LINE:23}"

mysql --default-character-set=utf8mb4 -B -N -r -D "${MYSQL_DATABASE}" -h ${MYSQL_SERVER} -u ${MYSQL_USER} -e "INSERT INTO verbrauch (zeitstempel,energie,kosten) VALUES (DATE_SUB(\"${timestamp}\",INTERVAL \"${offset}\" HOUR_MINUTE),${consumption},${cost});"

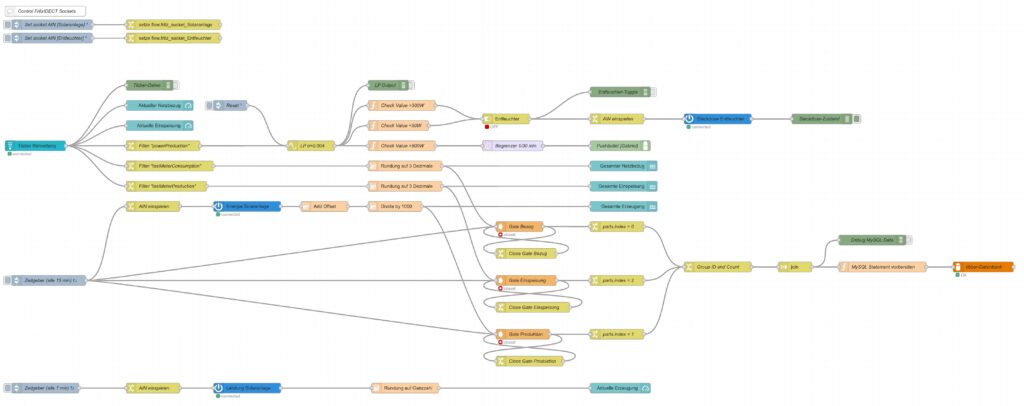

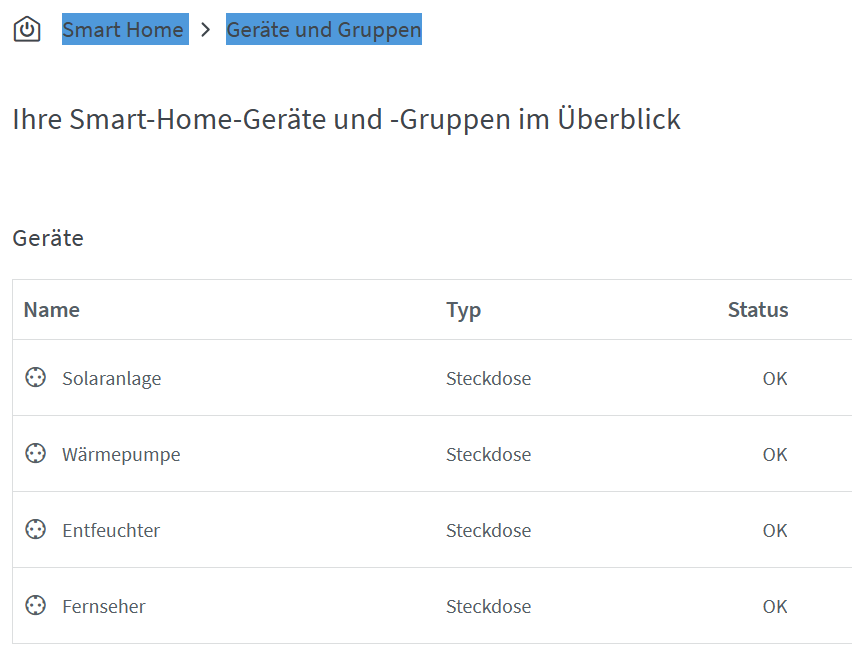

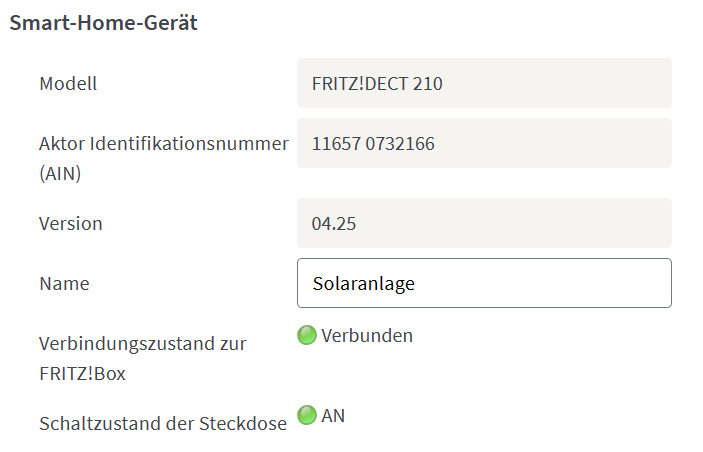

doneThe table zaehler is populated via a Node-RED flow that reads data from the Tibber API [4] as well as from the AVM socket FRITZ!DECT 210 and stores new values for the columns bezug, erzeugung, einspeisung every 15 minutes. I used a Node-RED flow for this because otherwise, I would have to program code for the access of the Tibber API [4] and include code [5] for the AVM socket FRITZ!DECT 210. However, I do plan to enlarge the functionality later with an Energy Storage System (ESS) and a battery, and so Node-RED seemed suitable to me. The flow is shown here; it contains some additional nodes apart from the now needed functionality, and it is still in development:

I have decided not to publish the JSON code here as I am not yet entirely familiar with Node-RED [3] and would not know how to remove my personal tokens from the JSON code.

Queries for the Graphs

Price and Consumption Patterns

The graph is based on four queries that correspond to the green and yellow, to the red, to the orange and to the blue curve:

SELECT zeitstempel,

preis AS 'grid price',

AVG(preis) OVER (ORDER BY zeitstempel ROWS BETWEEN 47 PRECEDING AND CURRENT ROW) as 'rolling 2-days average grid price'

FROM preise;

SELECT zeitstempel, energie AS 'grid consumption' FROM verbrauch;

SELECT alt.zeitstempel,

(neu.bezug-alt.bezug+neu.erzeugung-alt.erzeugung-neu.einspeisung+alt.einspeisung) AS 'residential consumption'

FROM zaehler AS neu JOIN zaehler AS alt ON neu.uid=(alt.uid+4)

WHERE MINUTE(alt.zeitstempel)=0;

SELECT alt.zeitstempel,

ROUND(preise.preis*(neu.bezug-alt.bezug)/(neu.bezug-alt.bezug+neu.erzeugung-alt.erzeugung-neu.einspeisung+alt.einspeisung),4) AS 'price for mixed grid/solar'

FROM zaehler AS neu

JOIN zaehler AS alt ON neu.uid=(alt.uid+4)

LEFT JOIN preise ON DATE_FORMAT(preise.zeitstempel, "%Y%m%d%H%i")=DATE_FORMAT(alt.zeitstempel, "%Y%m%d%H%i")

WHERE MINUTE(alt.zeitstempel)=0

AND preise.zeitstempel>=DATE_SUB(CURRENT_DATE(),INTERVAL 30 DAY);Actual cost, minimal cost, maximal cost, average cost per day

This graph uses only one query:

SELECT DATE(preise.zeitstempel) AS 'date',

MIN(preise.preis)*SUM(verbrauch.energie) AS 'minimal cost',

MAX(preise.preis)*SUM(verbrauch.energie) AS 'maximal cost',

AVG(preise.preis)*SUM(verbrauch.energie) AS 'average cost',

SUM(verbrauch.kosten) AS 'actual cost'

FROM preise JOIN verbrauch ON verbrauch.zeitstempel=preise.zeitstempel

WHERE DATE(preise.zeitstempel)>'2024-03-03'

GROUP BY DATE(preise.zeitstempel);Cost and amount of purchased electricity from grid with price indication

The first graph (Cost of purchased electricity from grid with price indication) uses the query:

SELECT DATE_FORMAT(preise.zeitstempel,'%m/%d') AS 'Datum',

ROW_NUMBER() OVER (PARTITION BY DATE(preise.zeitstempel) ORDER BY preise.preis ASC) AS 'Row',

verbrauch.kosten AS 'Kosten'

FROM preise JOIN verbrauch ON verbrauch.zeitstempel=preise.zeitstempel

WHERE DATE(preise.zeitstempel)>DATE_ADD(CURDATE(), INTERVAL -15 DAY)

ORDER BY DATE(preise.zeitstempel) ASC, preise.preis ASC;The second graph (Amount of purchased electricity from grid with price indication) uses the query:

SELECT DATE_FORMAT(preise.zeitstempel,'%m/%d') AS 'Datum',

ROW_NUMBER() OVER (PARTITION BY DATE(preise.zeitstempel) ORDER BY preise.preis ASC) AS 'Row',

verbrauch.energie AS 'Energie'

FROM preise JOIN verbrauch ON verbrauch.zeitstempel=preise.zeitstempel

WHERE DATE(preise.zeitstempel)>DATE_ADD(CURDATE(), INTERVAL -15 DAY)

ORDER BY DATE(preise.zeitstempel) ASC, preise.preis ASC;Hourly price distribution

The red histogram uses the query:

SELECT preis FROM preise WHERE DATE_FORMAT(zeitstempel,'%Y-%m')='${Month}';The blue histogram uses the query:

SELECT preis FROM preise

WHERE DATE_FORMAT(zeitstempel,'%Y-%m')='${Month}'

AND DAYNAME(zeitstempel)='${Weekday}';The yellow histogram uses the query:

SELECT preis FROM preise

WHERE DATE_FORMAT(zeitstempel,'%Y-%m')='${Month}'

AND WEEKDAY(zeitstempel)<5

AND DATE(zeitstempel) NOT IN (SELECT datum FROM aux_feiertage);The green histogram uses the query:

SELECT preis FROM preise

WHERE DATE_FORMAT(zeitstempel,'%Y-%m')='${Month}'

AND WEEKDAY(zeitstempel)>=5

OR DATE(zeitstempel) IN (SELECT datum FROM aux_feiertage WHERE DATE_FORMAT(zeitstempel,'%Y-%m')='${Month}');The tables use these variables on the respective Grafana dasboard as well as the additional helper table aux_feiertage (indicating national holidays) in the MariaDB database.

The variable Month on the Grafana dasboard has the query:

SELECT DISTINCT(DATE_FORMAT(zeitstempel,'%Y-%m')) FROM preise;The variable Weekday on the Grafana dasboard has the query:

SELECT aux_wochentage.wochentag AS Weekday

FROM aux_wochentage JOIN aux_sprachen ON aux_sprachen.id_sprache=aux_wochentage.id_sprache

WHERE aux_sprachen.sprachcode='en'

ORDER BY aux_wochentage.id_tag ASC;The last query also uses the tables aux_wochentage and aux_sprachen in order to offer a multi-lingual interface (not really necessary for the functionality). The tables are:

CREATE TABLE aux_wochentage (id_tag TINYINT UNSIGNED NOT NULL,\

id_sprache TINYINT UNSIGNED NOT NULL,\

wochentag VARCHAR(30) NOT NULL);

CREATE TABLE aux_sprachen (id_sprache TINYINT UNSIGNED NOT NULL PRIMARY KEY,\

sprachcode CHAR(2),\

sprache VARCHAR(30));

CREATE TABLE aux_feiertage (uid SMALLINT UNSIGNED NOT NULL PRIMARY KEY AUTO_INCREMENT,\

datum DATE NOT NULL,\

beschreibung VARCHAR(50));

INSERT INTO aux_sprachen VALUES (0,'en','English'),\

(1,'de','Deutsch'),\

(2,'pt','Português'),\

(3,'zh','中文');

INSERT INTO aux_wochentage VALUES (0,0,'Monday'),\

(1,0,'Tuesday'),\

(2,0,'Wednesday'),\

(3,0,'Thursday'),\

(4,0,'Friday'),\

(5,0,'Saturday'),\

(6,0,'Sunday'),\

(0,1,'Montag'),\

(1,1,'Dienstag'),\

(2,1,'Mittwoch'),\

(3,1,'Donnerstag'),\

(4,1,'Freitag'),\

(5,1,'Samstag'),\

(6,1,'Sonntag'),\

(0,2,'segunda-feira'),\

(1,2,'terça-feira'),\

(2,2,'quarta-feira'),\

(3,2,'quinta-feira'),\

(4,2,'sexta-feira'),\

(5,2,'sábado'),\

(6,2,'domingo'),\

(0,3,'星期一'),\

(1,3,'星期二'),\

(2,3,'星期三'),\

(3,3,'星期四'),\

(4,3,'星期五'),\

(5,3,'星期六'),\

(6,3,'星期日');

INSERT INTO aux_feiertage (datum, beschreibung) VALUES ('2023-01-01','Neujahr'),\

('2023-01-06','Heilige Drei Könige'),\

('2023-04-07','Karfreitag'),\

('2023-04-10','Ostermontag'),\

('2023-05-01','Tag der Arbeit'),\

('2023-05-18','Christi Himmelfahrt'),\

('2023-05-29','Pfingstmontag'),\

('2023-06-08','Fronleichnam'),\

('2023-08-15','Mariä Himmelfahrt'),\

('2023-10-03','Tag der Deutschen Einheit'),\

('2023-11-01','Allerheiligen'),\

('2023-12-25','1. Weihnachtstag'),\

('2023-12-26','2. Weihnachtstag'),\

('2024-01-01','Neujahr'),\

('2024-01-06','Heilige Drei Könige'),\

('2024-03-29','Karfreitag'),\

('2024-04-01','Ostermontag'),\

('2024-05-01','Tag der Arbeit'),\

('2024-05-09','Christi Himmelfahrt'),\

('2024-05-20','Pfingstmontag'),\

('2024-05-30','Fronleichnam'),\

('2024-08-15','Mariä Himmelfahrt'),\

('2024-10-03','Tag der Deutschen Einheit'),\

('2024-11-01','Allerheiligen'),\

('2024-12-25','1. Weihnachtstag'),\

('2024-12-26','2. Weihnachtstag'),\

('2025-01-01','Neujahr'),\

('2025-01-06','Heilige Drei Könige'),\

('2025-04-18','Karfreitag'),\

('2025-04-21','Ostermontag'),\

('2025-05-01','Tag der Arbeit'),\

('2025-05-29','Christi Himmelfahrt'),\

('2025-06-09','Pfingstmontag'),\

('2025-06-19','Fronleichnam'),\

('2025-08-15','Mariä Himmelfahrt'),\

('2025-10-03','Tag der Deutschen Einheit'),\

('2025-11-01','Allerheiligen'),\

('2025-12-25','1. Weihnachtstag'),\

('2025-12-26','2. Weihnachtstag');Monthly hourly price curves

This graph uses only one query:

SELECT preise.zeitstempel AS 'time',

MIN(preise.preis) AS 'Minimum Price',

ROUND(AVG(preise.preis),4) AS 'Average Price',

MAX(preise.preis) AS 'Maximum Price',

ROUND((SUM(verbrauch.kosten)/(SUM(neu.bezug)-SUM(alt.bezug)+SUM(neu.erzeugung)-SUM(alt.erzeugung)-SUM(neu.einspeisung)+SUM(alt.einspeisung))),4) AS 'Achieved (Mixed) Price'

FROM preise

INNER JOIN verbrauch ON preise.zeitstempel=verbrauch.zeitstempel

LEFT JOIN zaehler AS alt ON DATE_FORMAT(alt.zeitstempel,"%Y%m%d%H%i")=DATE_FORMAT(preise.zeitstempel,"%Y%m%d%H%i")

INNER JOIN zaehler AS neu ON neu.uid=(alt.uid+4)

WHERE DATE_FORMAT(preise.zeitstempel,'%Y-%m')='${Month}'

GROUP BY TIME(preise.zeitstempel);It is important to keep in mind that the results from the query show the timespan of the first day of the month (and only the first day) between 00:00 UTC and 23:59 UTC; hence the time window on the Grafana dashboard has to be chosen accordingly (e.g. from 2024-07-01 02:00:00 to 2024-07-02 01:00:00 in the timezone CEST).

Electricity price levels

This graph uses only one query:

SELECT DATE_FORMAT(zeitstempel,'%Y-%m') AS 'Month',

COUNT(CASE WHEN preis<=0.1 THEN preis END) AS 'very cheap (≤ 0.10 €/kWh)',

COUNT(CASE WHEN preis>0.1 AND preis<=0.2 THEN preis END) AS 'cheap (≤ 0.20 €/kWh)',

COUNT(CASE WHEN preis>0.2 AND preis<=0.3 THEN preis END) AS 'normal (≤ 0.30 €/kWh)',

COUNT(CASE WHEN preis>0.3 AND preis<=0.4 THEN preis END) AS 'expensive (≤ 0.40 €/kWh)',

COUNT(CASE WHEN preis>0.4 THEN preis END) AS 'very expensive (> 0.40 €/kWh)'

FROM preise

GROUP BY Month;Self-sufficiency (Autarkie) and sef-consumption (Eigenverbrauch)

This graph uses only one query:

SELECT DATE_FORMAT(alt.zeitstempel,'%m/%d') AS 'Datum',

ROUND((neu.erzeugung-alt.erzeugung-neu.einspeisung+alt.einspeisung)*100/(neu.bezug-alt.bezug+neu.erzeugung-alt.erzeugung-neu.einspeisung+alt.einspeisung)) AS 'Autarkiegrad',

ROUND((neu.erzeugung-alt.erzeugung-neu.einspeisung+alt.einspeisung)*100/(neu.erzeugung-alt.erzeugung)) AS 'Eigenverbrauchsquote'

FROM zaehler AS neu

JOIN zaehler AS alt ON neu.uid=(alt.uid+96)

WHERE HOUR(alt.zeitstempel)=0

AND MINUTE(alt.zeitstempel)=0

AND alt.zeitstempel>=DATE_SUB(CURRENT_DATE(),INTERVAL 14 DAY);

Files

The following dataset was used for the graphs:

Sources

- [1] = Tibber Developer

- [2] = Download Grafana | Grafana Labs

- [3] = Node-RED

- [4] = Tibber Developer: Communicating with the API

- [5] = Smarthome: AVM-Steckdosen per Skript auslesen

- [6] = Grafana Visualizations (Part 2)

- [7] = Strom: heute Extrempreise für Kunden im EPEX SPOT – ISPEX

- [8] = Dynamischer Stromtarif – Warum fallen ALLE darauf rein?

Disclaimer

- Program codes and examples are for demonstration purposes only.

- Program codes are not recommended be used in production environments without further enhancements in terms of speed, failure-tolerance or cyber-security.

- While program codes have been tested, they might still contain errors.

- I am neither affiliated nor linked to companies named in this blog post.

Grafana Visualizations (Part 2)

Executive Summary

In this article, we use Grafana in order to examine real-world data of electricity consumption stored in a MariaDB database. As dynamic pricing (day-ahead market) is used, we also try to investigate how well I have fared so far with dynamic pricing.

Background

On 1st of March 2024, I switched from a traditional electricity provider to one with dynamic day-ahead pricing, in my case, Tibber. I wanted to try this contractual model and see if I could successfully manage to shift chunks of high electricity consumption such as:

- … loading the battery-electric vehicle (BEV) or the plug-in hybrid car (PHEV)

- … washing clothes

- … drying clothes in the electric dryer

to those times of the day when the electricity price is lower. I also wanted to see if that makes economic sense for me. And, after all, it is fun to play around with data and gain new insights.

As my electricity supplier, I had chosen Tibber because they were the first one I got to know and they offer a device called Pulse which can connect a digital electricity meter to their infrastructure for metering and billing purposes. Furthermore, they do have an API [1] which allows me to read out my own data; that was very important for me. I understand that meanwhile, there are several providers Tibber that have similar models and comparable features.

In my opinion, dynamic electricity prices will play an important role in the future. As we generate ever more energy from renewables (solar and wind power), there are times when a lot of electricity is generated or when we even see over-production, and there are times when less electricity will be produced (“dunkelflaute”). Dynamic prices are an excellent tool to motivate people to shift a part of their consumption pattern to times with a large offer in electricity supply (cheap price). An easy-to-understand example is washing clothes during the day in summer (rather than in the evening) when there is a large supply of solar energy; then, the price will experience a local minimum between lunch time and dinner time.

Preconditions

In order to use the approach described here, you should:

- … have access to a Linux machine or account

- … have a MySQL or MariaDB database server installed, configured, up and running

- … have a populated MySQL or MariaDB database like in our example to which you have access

- … have the package Grafana [2] installed, configured, up and running

- … have access to the data of your own electricity consumption and pricing information of your supplier or use the dataset linked below in this blog

- … have some understanding of day-ahead pricing in the electricity market [3]

- … have some basic knowledge of how to operate in a Linux environment and some basic understanding of shell scripts

Description and Usage

The Database

The base for the following visualizations is a fully populated MariaDB database with the following structure:

# Datenbank für Analysen mit Tibber

# V1.1; 2023-10-19, Gabriel Rüeck <gabriel@rueeck.de>, <gabriel@caipirinha.spdns.org>

# Delete existing databases

REVOKE ALL ON tibber.* FROM 'gabriel';

DROP DATABASE tibber;

# Create a new database

CREATE DATABASE tibber DEFAULT CHARSET utf8mb4 COLLATE utf8mb4_general_ci;

GRANT ALL ON tibber.* TO 'gabriel';

USE tibber;

SET default_storage_engine=Aria;

CREATE TABLE preise (zeitstempel DATETIME NOT NULL,\

preis DECIMAL(5,4) NOT NULL,\

niveau ENUM('VERY_CHEAP','CHEAP','NORMAL','EXPENSIVE','VERY_EXPENSIVE'));

CREATE TABLE verbrauch (zeitstempel DATETIME NOT NULL,\

energie DECIMAL(5,3) NOT NULL,\

kosten DECIMAL(5,4) NOT NULL);The section Files at the end of this blog post provides real-world sample data which you can use to populate the database and do your own calculations and graphs. The database contains two tables. The one named preise contains the day-ahead prices and some price level tag which is determined by Tibber themselves according to [1]. The second tables named verbrauch contains the electrical energy I have consumed, and the cost associated with the consumption. zeitstempel in both tables indicates the date and the hour when the respective 1-hour-block with the respective electricity price or the electricity consumption starts. Consequently, the day is divided in 24 blocks of 1 hour. Data from the tables might look like this:

MariaDB [tibber]> SELECT * FROM preise WHERE DATE(zeitstempel)='2024-03-18';

+---------------------+--------+-----------+

| zeitstempel | preis | niveau |

+---------------------+--------+-----------+

| 2024-03-18 00:00:00 | 0.2658 | NORMAL |

| 2024-03-18 01:00:00 | 0.2575 | NORMAL |

| 2024-03-18 02:00:00 | 0.2588 | NORMAL |

| 2024-03-18 03:00:00 | 0.2601 | NORMAL |

| 2024-03-18 04:00:00 | 0.2661 | NORMAL |

| 2024-03-18 05:00:00 | 0.2737 | NORMAL |

| 2024-03-18 06:00:00 | 0.2922 | NORMAL |

| 2024-03-18 07:00:00 | 0.3059 | EXPENSIVE |

| 2024-03-18 08:00:00 | 0.3019 | EXPENSIVE |

| 2024-03-18 09:00:00 | 0.2880 | NORMAL |

| 2024-03-18 10:00:00 | 0.2761 | NORMAL |

| 2024-03-18 11:00:00 | 0.2688 | NORMAL |

| 2024-03-18 12:00:00 | 0.2700 | NORMAL |

| 2024-03-18 13:00:00 | 0.2707 | NORMAL |

| 2024-03-18 14:00:00 | 0.2715 | NORMAL |

| 2024-03-18 15:00:00 | 0.2768 | NORMAL |

| 2024-03-18 16:00:00 | 0.2834 | NORMAL |

| 2024-03-18 17:00:00 | 0.3176 | EXPENSIVE |

| 2024-03-18 18:00:00 | 0.3629 | EXPENSIVE |

| 2024-03-18 19:00:00 | 0.3400 | EXPENSIVE |

| 2024-03-18 20:00:00 | 0.3129 | EXPENSIVE |

| 2024-03-18 21:00:00 | 0.2861 | NORMAL |

| 2024-03-18 22:00:00 | 0.2827 | NORMAL |

| 2024-03-18 23:00:00 | 0.2781 | NORMAL |

+---------------------+--------+-----------+

24 rows in set (0,002 sec)

MariaDB [tibber]> SELECT * FROM verbrauch WHERE DATE(zeitstempel)='2024-03-18';

+---------------------+---------+--------+

| zeitstempel | energie | kosten |

+---------------------+---------+--------+

| 2024-03-18 00:00:00 | 0.554 | 0.1472 |

| 2024-03-18 01:00:00 | 0.280 | 0.0721 |

| 2024-03-18 02:00:00 | 0.312 | 0.0808 |

| 2024-03-18 03:00:00 | 0.307 | 0.0799 |

| 2024-03-18 04:00:00 | 0.282 | 0.0750 |

| 2024-03-18 05:00:00 | 0.315 | 0.0862 |

| 2024-03-18 06:00:00 | 0.377 | 0.1102 |

| 2024-03-18 07:00:00 | 0.368 | 0.1126 |

| 2024-03-18 08:00:00 | 0.275 | 0.0830 |

| 2024-03-18 09:00:00 | 0.793 | 0.2284 |

| 2024-03-18 10:00:00 | 1.041 | 0.2875 |

| 2024-03-18 11:00:00 | 0.453 | 0.1217 |

| 2024-03-18 12:00:00 | 0.362 | 0.0977 |

| 2024-03-18 13:00:00 | 0.005 | 0.0014 |

| 2024-03-18 14:00:00 | 0.027 | 0.0073 |

| 2024-03-18 15:00:00 | 0.144 | 0.0399 |

| 2024-03-18 16:00:00 | 0.248 | 0.0703 |

| 2024-03-18 17:00:00 | 0.363 | 0.1153 |

| 2024-03-18 18:00:00 | 0.381 | 0.1382 |

| 2024-03-18 19:00:00 | 0.360 | 0.1224 |

| 2024-03-18 20:00:00 | 0.354 | 0.1108 |

| 2024-03-18 21:00:00 | 0.382 | 0.1093 |

| 2024-03-18 22:00:00 | 0.373 | 0.1055 |

| 2024-03-18 23:00:00 | 0.417 | 0.1159 |

+---------------------+---------+--------+

24 rows in set (0,001 sec)

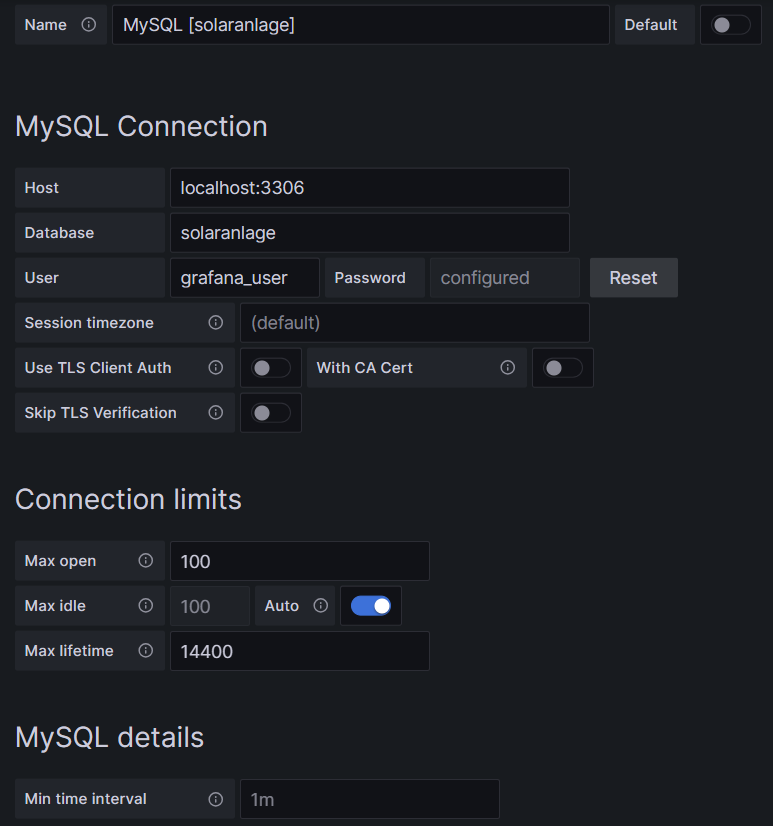

Connecting Grafana to the Database

Now we shall visualize the data in Grafana. Grafana is powerful and mighty visualization tool with which you can create state-of-the-art dashboards and professional visualizations. I must really laude the team behind Grafana for making such a powerful tool free for personal and other usage (for details to their licenses und usage models, see Licensing | Grafana Labs).

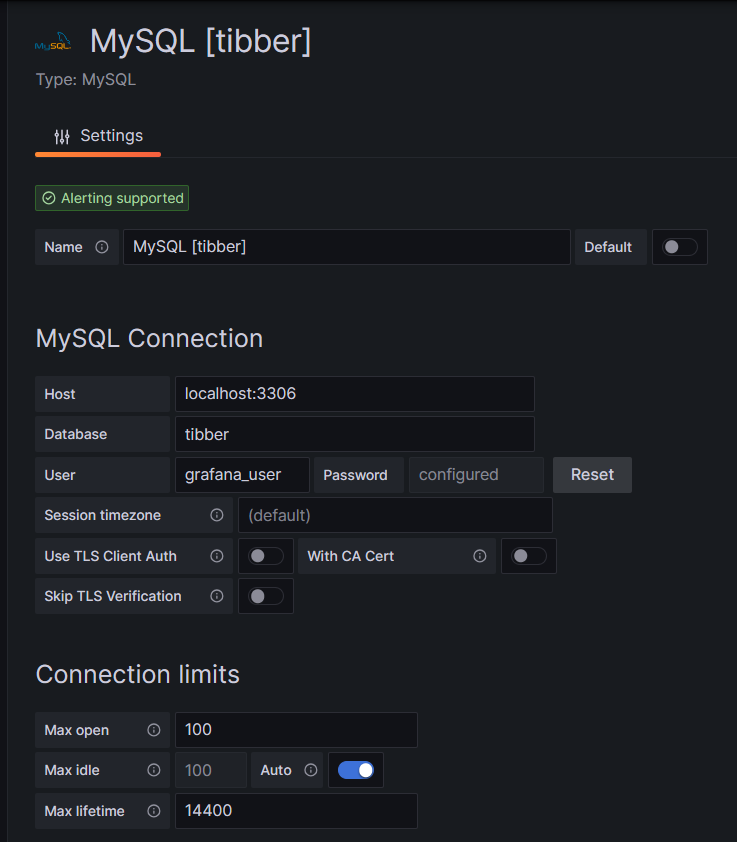

Before you can use data from a MySQL database in Grafana, you have to set up MySQL as a data source in Connections. Remember that MySQL is one of many possible data sources for Grafana and so, you have to walk through the jungle of offered data sources and find the MySQL connection and set up your data source accordingly. On my server, both Grafana and MariaDB run on the same machine, so there is no need for encryption, etc. My setup simply looks like this:

One step where I always stumble again is that in the entry mask for the connection setup, localhost:3306 is proposed in grey color as Host, but unless you type that in, too, Grafana will actually not use localhost:3306. So be sure to physically type that in.

Populating the Database

Tibber customers who have created an API token for themselves [4] can populate the database with the following bash script; in this script, you need to replace _API_TOKEN with your personal API token ( [4]) and _HOME_ID with your personal Home ID ([1]). The script further assumes that the user gabriel can login to the MySQL database without further authentication; this can be achieved by writing the MySQL login information in the file ~/.my.cnf.

#!/bin/bash

#

# Dieses Skript liest Daten für die Tibber-Datenbank und speichert das Ergebnis in einer MySQL-Datenbank ab.

# Das Skript wird einmal pro Tag aufgerufen.

#

# V1.3; 2024-03-24, Gabriel Rüeck <gabriel@rueeck.de>, <gabriel@caipirinha.spdns.org>

#

# CONSTANTS

declare -r MYSQL_DATABASE='tibber'

declare -r MYSQL_SERVER='localhost'

declare -r MYSQL_USER='gabriel'

declare -r TIBBER_API_TOKEN='_API_TOKEN'

declare -r TIBBER_API_URL='https://api.tibber.com/v1-beta/gql'

declare -r TIBBER_HOME_ID='_HOME_ID'

# VARIABLES

# PROGRAM

# Read price information for tomorrow

curl -s -S -H "Authorization: Bearer ${TIBBER_API_TOKEN}" -H "Content-Type: application/json" -X POST -d '{ "query": "{viewer {home (id: \"'"${TIBBER_HOME_ID}"'\") {currentSubscription {priceInfo {tomorrow {total startsAt level }}}}}}" }' "${TIBBER_API_URL}" | jq -r '.data.viewer.home.currentSubscription.priceInfo.tomorrow[] | .total, .startsAt, .level' | while read cost; do

read LINE

read level

timestamp=$(echo "${LINE%%+*}" | tr 'T' ' ')

# Determine timezone offset and store the UTC datetime in the database

offset="${LINE:23}"

mysql --default-character-set=utf8mb4 -B -N -r -D "${MYSQL_DATABASE}" -h ${MYSQL_SERVER} -u ${MYSQL_USER} -e "INSERT INTO preise (zeitstempel,preis,niveau) VALUES (DATE_SUB(\"${timestamp}\",INTERVAL \"${offset}\" HOUR_MINUTE),${cost},\"${level}\");"

done

# Read consumption information from the past 24 hours

curl -s -S -H "Authorization: Bearer ${TIBBER_API_TOKEN}" -H "Content-Type: application/json" -X POST -d '{ "query": "{viewer {home (id: \"'"${TIBBER_HOME_ID}"'\") {consumption (resolution: HOURLY, last: 24) {nodes {from to cost consumption}}}}}" }' "${TIBBER_API_URL}" | jq -r '.data.viewer.home.consumption.nodes[] | .from, .consumption, .cost' | while read LINE; do

read consumption

read cost

timestamp=$(echo "${LINE%%+*}" | tr 'T' ' ')

# Determine timezone offset and store the UTC datetime in the database

offset="${LINE:23}"

mysql --default-character-set=utf8mb4 -B -N -r -D "${MYSQL_DATABASE}" -h ${MYSQL_SERVER} -u ${MYSQL_USER} -e "INSERT INTO verbrauch (zeitstempel,energie,kosten) VALUES (DATE_SUB(\"${timestamp}\",INTERVAL \"${offset}\" HOUR_MINUTE),${consumption},${cost});"

doneIn my case, I call this script with cron once per day, at 14:45 as Tibber releases the price information for the subsequent day only at 14:00. The script furthermore stores UTC timestamps in the database. Grafana will adjust them to the local time for graphs of the type Time series.

Easy Visualizations

Price level

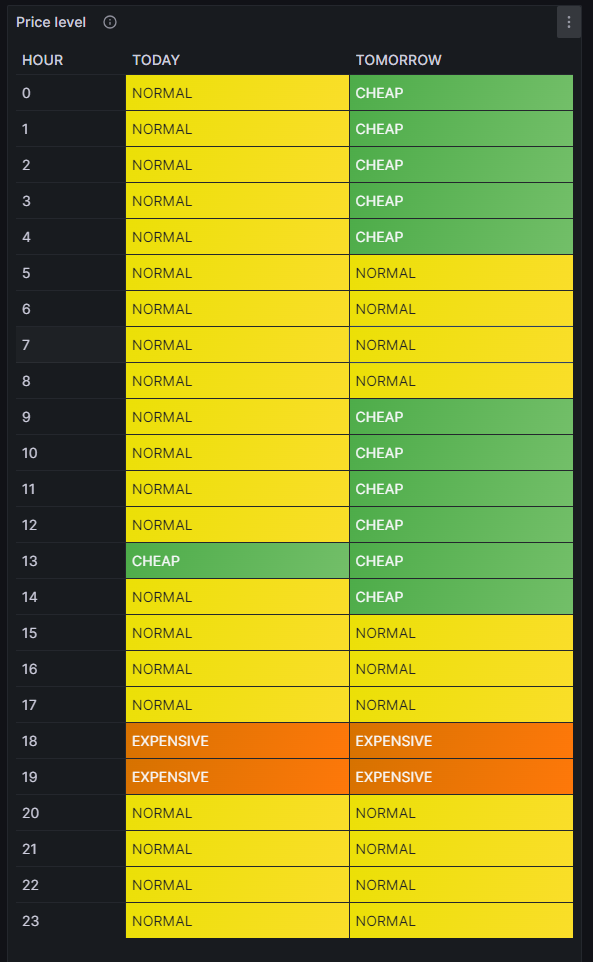

One of my first visualizations was a table that shows the price level tags for today and tomorrow. The idea behind is that I would look at the table once per day during breakfast and immediately identify the sweet spots on where I could charge the car, turn on the washing machine, etc.

For this table, we use the built-in Grafana visualization Table. We select the according database, and our MySQL query is:

SELECT IF(DATE(DATE_ADD(zeitstempel,INTERVAL HOUR(TIMEDIFF(SYSDATE(),UTC_TIMESTAMP())) HOUR))=CURDATE(),'TODAY','TOMORROW') AS Tag, HOUR(DATE_ADD(zeitstempel,INTERVAL HOUR(TIMEDIFF(SYSDATE(),UTC_TIMESTAMP())) HOUR)) AS Stunde, niveau

FROM preise

WHERE DATE_ADD(zeitstempel,INTERVAL HOUR(TIMEDIFF(SYSDATE(),UTC_TIMESTAMP())) HOUR)>=CURDATE()

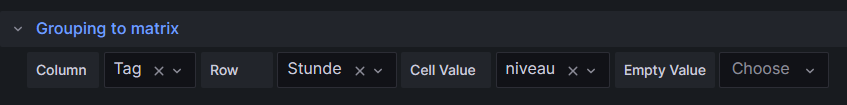

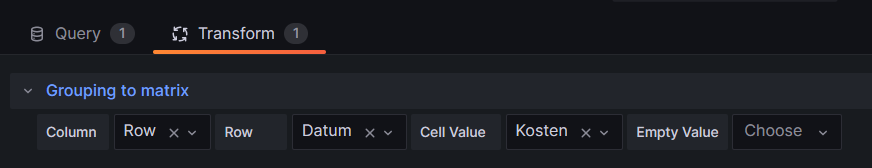

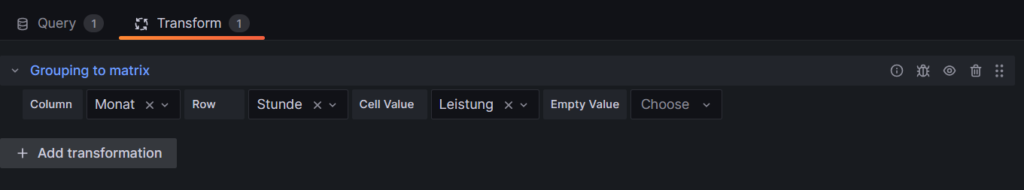

Group BY Tag, Stunde;The query above lists one price level per line. However, in order to get the nice visualization shown above, we need to transpose the table with respect to the months, and therefore, we must select the built-in transformation Grouping to Matrix and enter our column names according to the image below:

Now, in order to get a beautiful visualization, we need to adjust some of the panel options, and those are:

- Cell options → Cell type: Set to Auto

- Standard options → Color scheme: Set to Single color

and we need to define some Value mappings:

- NORMAL → Select yellow color

- EXPENSIVE → Select orange color

- VERY_EXPENSIVE → Select red color

- CHEAP → Select green color

- VERY_CHEAP → Select blue color

and we need to define some Overrides:

- Override 1 → Fields with name: Select TODAY

- Override 1 → Cell options → Cell type: Set to Colored background

- Override 2 → Fields with name: Select TOMORROW

- Override 2 → Cell options → Cell type: Set to Colored background

- Override 3 → Fields with type: Select Stunde\Tag

- Override 3 → Column width → Set to 110

- Override 3 → Standard options → Display name: Type HOUR (That is only necessary because I initially did my query with German column names)

In the MySQL query above, you can find the sequence

DATE_ADD(zeitstempel,INTERVAL HOUR(TIMEDIFF(SYSDATE(),UTC_TIMESTAMP())) HOUR)which actually transforms the UTC timestamp into the timestamp of the local time of the machine where the MySQL server resides. In my case, this is the same machine that I use for Grafana. The sub-sequence

HOUR(TIMEDIFF(SYSDATE(),UTC_TIMESTAMP()))gives – in my case – back a 2 when we are in summer time as then we are at UTC+2h, and 1 when we are in winter time as then we are at UTC+1h. This sequence was necessary for me as the Grafana visualization Table does not do an automatic time conversion to the local time.

Electricity price (per kWh) and grid consumption

In the next visualization, we will look at a timeline of the electricity price per hour and our consumption pattern. This will look like this graph:

For this graph, we use the built-in Grafana visualization Time series. We select the according database, and we define two MySQL queries. The first one is named Price:

SELECT zeitstempel,

preis AS 'price',

AVG(preis) OVER (ORDER BY zeitstempel ROWS BETWEEN 47 PRECEDING AND CURRENT ROW) as 'rolling 2-days average price'

FROM preise;The first query has the peculiarity that we do not only retrieve the price for each 1-hour block, but that we also calculate a rolling 2-days average over the extracted data. The second query is named Consumption and retrieves our energy consumption:

SELECT zeitstempel, energie AS 'grid consumption' FROM verbrauch;Again, we need to adjust some of the panel options, and those are:

- Standard options → Unit: Select (Euro) €

- Standard options → Min: Set to 0

- Standard options → Decimals: Set to 2

and we need to define some Overrides:

- Override 1 → Fields with name: Select grid consumption

- Override 1 → Axis → Placement: Select Right

- Override 1 → Standard options → Unit: Select Kilowatt-hour (kWh)

- Override 1 → Standard options → Decimals: Set to 1

In this graph, we can already get an indication on how well we time our energy consumption. Ideally, the peaks (local maximums) in energy consumption (blue line) should coincide with the local minimums of the electricity price (green line). As you can see with the two peaks of the blue line (charging the BEV), I did not always match this sweet spot. There might be good reasons for consuming electricity also at hours of higher prices, and some examples are:

- You have to drive away at a certain hour, and you need to charge the BEV now.

- You have solar generation at certain times of the day which you intend to use and therefore consume electricity (like for charging a BEV) when the sun shines. While you then might not consume at the cheapest hour, you might ultimately make good use of the additional solar energy.

- You want to watch TV in the evening when the electricity price is typically high.

This graph visualizes the data according to the timezone of the dashboard, so there is no need to add an offset to the UTC timestamps in the database.

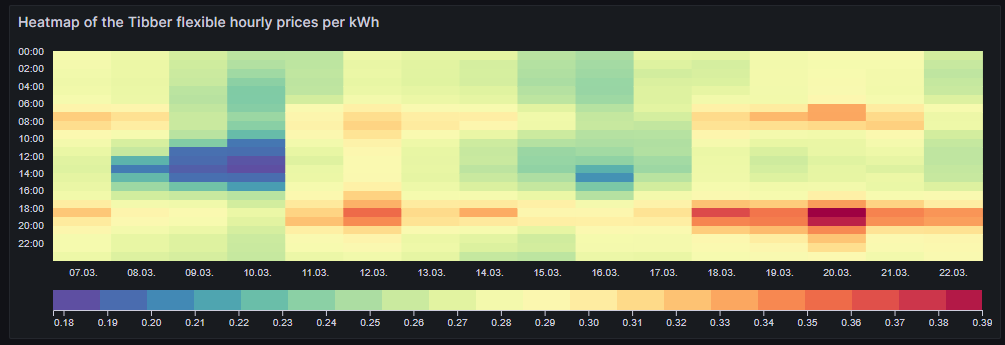

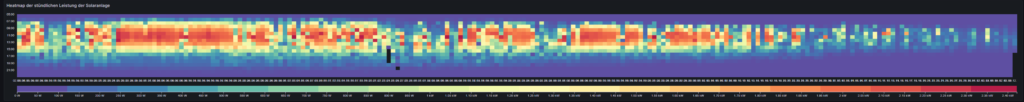

Heatmap of the Tibber flexible hourly prices per kWh

In the next visualization, we look at a heatmap of the hourly prices and therefore, we use the plug-in Grafana visualization Hourly heatmap.

The query is very simple:

SELECT UNIX_TIMESTAMP(DATE_ADD(zeitstempel,INTERVAL HOUR(TIMEDIFF(SYSDATE(),UTC_TIMESTAMP())) HOUR)) AS time, preis FROM preise;However, we need to adjust some of the panel options, and those are:

- Dimensions → Time: Select time

- Dimensions → Value: Select price

- Hourly heatmap → From: Set to 00:00

- Hourly heatmap → To: Set to 00:00

- Hourly heatmap → Group by: Select 60 minutes

- Hourly heatmap → Calculation: Select Sum

- Hourly heatmap → Color palette: Select Spectral

- Hourly heatmap → Invert color palette → Activate

- Legend → Show legend → Activate

- Legend → Gradient quality: Select Low

- Standard options → Unit: Select (Euro) €

- Standard options → Decimals: Set to 2

- Standard options → Color scheme: Select From thresholds (by value)

With the heatmap, we have an easy-to-understand visualization on when the prices are high and on when they are low and on whether there is a regular pattern that we can observe. In this case, we can identify that in the evening (at dinner or TV time), the electricity price often seems to be high. Hence, this is not a good time to switch on powerful electric consumers or to start charging a BEV.

Similar to the Grafana visualization Table, you can find the sequence

DATE_ADD(zeitstempel,INTERVAL HOUR(TIMEDIFF(SYSDATE(),UTC_TIMESTAMP())) HOUR)because the Grafana visualization Hourly heatmap also does not do an automatic time conversion to the local time.

Complex Visualizations

Actual cost versus minimal cost, maximal cost, average cost per day

This visualization shows the daily electricity cost that I have incurred (blue line) and the cost I would have incurred if I had purchased the whole amount of electricity on the respective day:

- … during the cheapest hour on that day (green line)

- … during the most expensive hour on that day (red line)

- … at an average price (average of all hours) on that day (yellow line)

The closer the blue line is to the green line, the better I have shifted my consumption pattern to the hours of cheap electricity. In real life, one will always have to purchase electricity also in hours of expensive electricity unless one switches off all devices in a household at certain hours. So, in real life, the blue line will never be the same as the green line. A blue line between the yellow and the green line already indicates that one does well. The graph also shows that my consumption varies substantially from day to day. The graph uses the built-in Grafana visualization Time series. The last data point must not be considered as the last day is only calculated until 14:00 local time.

For this graph, we join the tables preise and verbrauch in a MySQL query and group the result by day:

SELECT DATE(preise.zeitstempel) AS 'date',

MIN(preise.preis)*SUM(verbrauch.energie) AS 'minimal cost',

MAX(preise.preis)*SUM(verbrauch.energie) AS 'maximal cost',

AVG(preise.preis)*SUM(verbrauch.energie) AS 'average cost',

SUM(verbrauch.kosten) AS 'actual cost'

FROM preise JOIN verbrauch ON verbrauch.zeitstempel=preise.zeitstempel

WHERE DATE(preise.zeitstempel)>'2024-03-03'

GROUP BY DATE(preise.zeitstempel);The following panel options are recommended:

- Standard options → Unit: Select (Euro) €

- Standard options → Decimals: Set to 2

and the following Overrides are recommended:

- Override 1 → Fields with name: Select minimal cost

- Override 1 → Standard options → Color scheme: Select Single color, then select green

- Override 2 → Fields with name: Select maximal cost

- Override 2 → Standard options → Color scheme: Select Single color, then select red

- Override 3 → Fields with name: Select actual cost

- Override 3 → Standard options → Color scheme: Select Single color, then select blue

- Override 4 → Fields with name: Select average cost

- Override 4 → Standard options → Color scheme: Select Single color, then select yellow

Important: This graph adds up the data from a UTC day (not the local calendar day) and visualizes the data points according to the timezone of the dashboard.

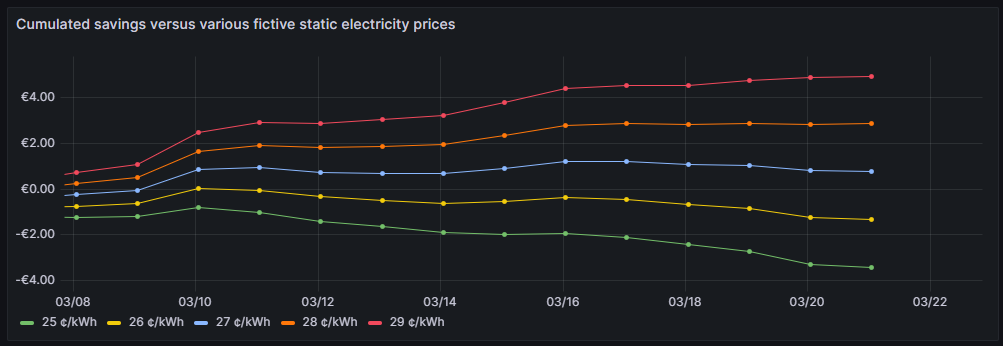

Cumulated savings versus various fictive static electricity prices

This visualization shows us in a cumulative manner how much money I have saved using dynamic pricing versus fictive electricity contracts with different static prices per kWh (traditional contracts). The graph uses the built-in Grafana visualization Time series. The last data point must not be considered as the last day is only calculated until 14:00 local time.

One can see that for traditional prices below 27 ¢/kWh, I would not have saved any money so far. 27 ¢/kWh is the price that I could get in a traditional electricity contract at the place where I live. However, it is too early yet to draw final conclusions. I intend to observe how the graphs advance when we get into summer as I expect that during summer, I will have more times at cheap electricity whereas in the subsequent winter, I will probably have more times at higher prices. The graph is done with this query:

SELECT DATE(preise.zeitstempel) AS 'Datum',

SUM(0.25*SUM(verbrauch.energie)-SUM(verbrauch.kosten)) OVER (ORDER BY DATE(preise.zeitstempel)) as '25 ¢/kWh',

SUM(0.26*SUM(verbrauch.energie)-SUM(verbrauch.kosten)) OVER (ORDER BY DATE(preise.zeitstempel)) as '26 ¢/kWh',

SUM(0.27*SUM(verbrauch.energie)-SUM(verbrauch.kosten)) OVER (ORDER BY DATE(preise.zeitstempel)) as '27 ¢/kWh',

SUM(0.28*SUM(verbrauch.energie)-SUM(verbrauch.kosten)) OVER (ORDER BY DATE(preise.zeitstempel)) as '28 ¢/kWh',

SUM(0.29*SUM(verbrauch.energie)-SUM(verbrauch.kosten)) OVER (ORDER BY DATE(preise.zeitstempel)) as '29 ¢/kWh'

FROM preise JOIN verbrauch ON verbrauch.zeitstempel=preise.zeitstempel

WHERE DATE(preise.zeitstempel)>'2024-03-03'

GROUP BY DATE(preise.zeitstempel);The following panel options are recommended:

- Standard options → Unit: Select (Euro) €

- Standard options → Decimals: Set to 2

Important: This graph adds up the data from a UTC day (not the local calendar day) and visualizes the data points according to the timezone of the dashboard.

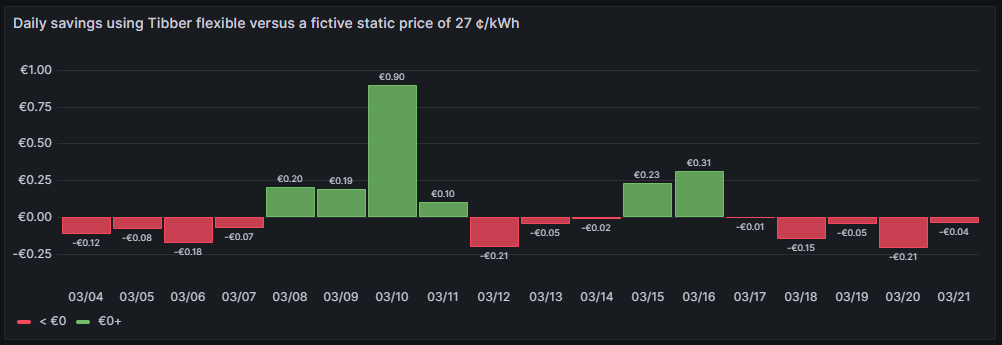

Daily savings using Tibber flexible versus a fictive static price of 27 ¢/kWh

This next visualization shows how much money I have saved (green) or lost (red) using dynamic pricing versus a fictive electricity contract with a price of 27 ¢/kWh that – as mentioned before – I could get in a traditional electricity contract at the place where I live. The graph uses the built-in Grafana visualization Bar chart. The last data point must not be considered as the last day is only calculated until 14:00 local time.

One can see that due to the nature of dynamic prices, I do not save money every day. It has been quite a mixed result so far. The graph is done with this query:

SELECT DATE_FORMAT(preise.zeitstempel,'%m/%d') AS 'Datum',

0.27*SUM(verbrauch.energie)-SUM(verbrauch.kosten) AS 'Daily cost vs. average'

FROM preise JOIN verbrauch ON verbrauch.zeitstempel=preise.zeitstempel

WHERE DATE(preise.zeitstempel)>'2024-03-03'

GROUP BY DATE(preise.zeitstempel);The following panel options are recommended:

- Bar chart → Color by field: Select Daily cost vs. average

- Standard options → Unit: Select (Euro) €

- Standard options → Decimals: Set to 2

- Standard options → Color scheme: Select From thresholds (by value)

- Thresholds → Enter 0, then select green

- Thresholds → Base: Select red

Important: This graph adds up the data from a UTC day (not the local calendar day).

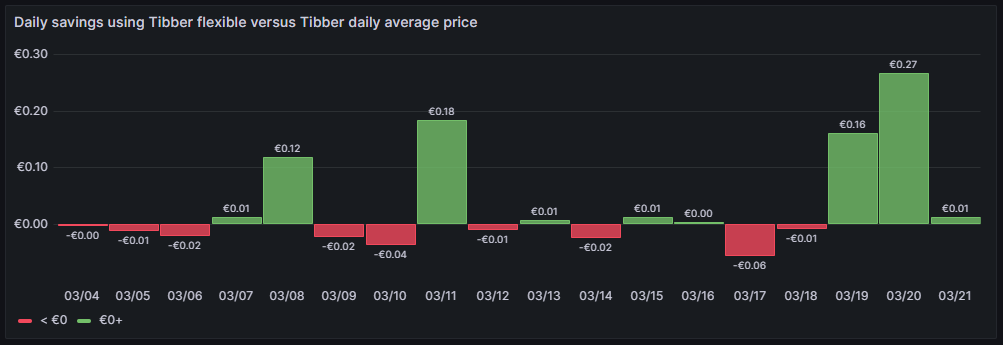

Daily savings using Tibber flexible versus Tibber daily average price

A similar visualization shows how much money I have saved (green) or lost (red) using dynamic pricing versus the average price per day, calculated on all dynamic prices of that day. This graph shows me if I am successful in making use of dynamic pricing (green) or not (red). The graph uses the built-in Grafana visualization Bar chart. The last data point must not be considered as the last day is only calculated until 14:00 local time.

So far, it seems that while I do have “good” and “bad” days, on on the good days, I seem to save more money compared to the Tibber average price. The large green bars are those where I charge the BEV, and before I charge the BEV, I really carefully consider the electricity price of today and tomorrow. This graph is done with this query:

SELECT DATE_FORMAT(preise.zeitstempel,'%m/%d') AS 'Datum',

AVG(preise.preis)*SUM(verbrauch.energie)-SUM(verbrauch.kosten) AS 'Daily cost vs. average'

FROM preise JOIN verbrauch ON verbrauch.zeitstempel=preise.zeitstempel

WHERE DATE(preise.zeitstempel)>'2024-03-03'

GROUP BY DATE(preise.zeitstempel);The following panel options are recommended:

- Bar chart → Color by field: Select Daily cost vs. average

- Standard options → Unit: Select (Euro) €

- Standard options → Decimals: Set to 2

- Standard options → Color scheme: Select From thresholds (by value)

- Thresholds → Enter 0, then select green

- Thresholds → Base: Select red

Important: This graph adds up the data from a UTC day (not the local calendar day).

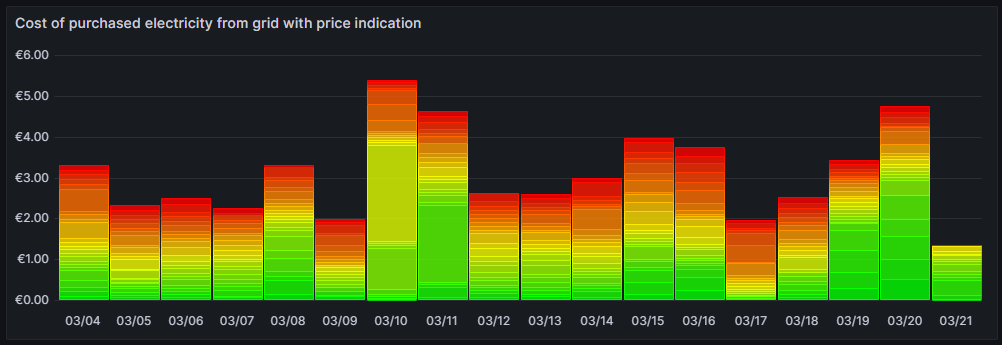

Cost of purchased electricity from grid with price indication

This is one of my favorite graphs as it contains a lot of information in one visualization. It shows the electricity cost per day, but also, how this cost is composed. Each day is a concatenation of 24 rectangles. The 24 rectangles represent the 24 hours of the day. Their color is different, ranging in shades from green to red. The green only rectangle (rgb: 0, 255, 0) is the cost that incurred at the cheapest hour of the day. The red only rectangle (rgb: 255, 0, 0) is the cost that incurred at the most expensive hour of the day. The shades in between ranging from green to red represent an ordered list of the hours from cheap to expensive. Large rectangles mean that a large part of the daily cost can be attributed to a consumption in that hour. Essentially, this means that the more green shades a day has, the more cost has incurred at hours of cheap electricity and the better I have used the dynamic pricing for me. The more red shades a day has, the more cost has incurred at hours of expensive electricity. A day with more red shades is not automatically a “bad” day. There might be reasons for a consumption at expensive hours, and some which are true in my case, are:

- I might cover the electricity demand at cheap hours by my solar panels so that during these hours, I do not have any grid consumption at all.

- I might deliberately decide to consume electricity at expensive hours during daylight because my solar panels cover a large part of the consumption at my home, maybe, because I charge the car and 50% of the electric energy comes from the solar panels anyway and I just buy the remaining 50% from the grid. While the grid consumption might be expensive, I anyway get 50% “for free” from the solar panels.

- I might deliberately decide to consume electricity at expensive hours because the ambient temperature outside the house is moderate or warm and I can heat the house with the air conditioner at high efficiency while I can switch off the central heating which runs by natural gas. In that case, even at high prices, I expect to have a better outcome overall because I might be able to switch off the central heating completely.

The graph uses the built-in Grafana visualization Bar chart. The last data point must not be considered as the last day is only calculated until 14:00 local time.

This graph uses this query:

SELECT DATE_FORMAT(preise.zeitstempel,'%m/%d') AS 'Datum',

ROW_NUMBER() OVER (PARTITION BY DATE(preise.zeitstempel) ORDER BY preise.preis ASC) AS 'Row',

verbrauch.kosten AS 'Kosten'

FROM preise JOIN verbrauch ON verbrauch.zeitstempel=preise.zeitstempel

WHERE DATE(preise.zeitstempel)>'2024-03-03'

ORDER BY DATE(preise.zeitstempel) ASC, preise.preis ASC;and the built-in transformation Grouping to Matrix:

The following panel options must be used:

- Standard options → Unit: Select (Euro) €

- Standard options → Min: Set to 0

- Standard options → Decimals: Set to 2

Additionally, we need to define exactly 24 Overrides:

- Override 1 → Fields with name: Select 1

- Override 1 → Standard options → Color scheme: Select Single color, then select Custom, then rgb(0, 255, 0)

- Override 2 → Fields with name: Select 2

- Override 2 → Standard options → Color scheme: Select Single color, then select Custom, then rgb(22, 255, 0)

- Override 3 → Fields with name: Select 3

- Override 3 → Standard options → Color scheme: Select Single color, then select Custom, then rgb(44, 255, 0)

- Override 4 → Fields with name: Select 4

- Override 4 → Standard options → Color scheme: Select Single color, then select Custom, then rgb(66, 255, 0)

- Override 5 → Fields with name: Select 5

- Override 5 → Standard options → Color scheme: Select Single color, then select Custom, then rgb(89, 255, 0)

- Override 6 → Fields with name: Select 6

- Override 6 → Standard options → Color scheme: Select Single color, then select Custom, then rgb(111, 255, 0)

- Override 7 → Fields with name: Select 7

- Override 7 → Standard options → Color scheme: Select Single color, then select Custom, then rgb(133, 255, 0)

- Override 8 → Fields with name: Select 8

- Override 8 → Standard options → Color scheme: Select Single color, then select Custom, then rgb(155, 255, 0)

- Override 9 → Fields with name: Select 9

- Override 9 → Standard options → Color scheme: Select Single color, then select Custom, then rgb(177, 255, 0)

- Override 10 → Fields with name: Select 10

- Override 10 → Standard options → Color scheme: Select Single color, then select Custom, then rgb(199, 255, 0)

- Override 11 → Fields with name: Select 11

- Override 11 → Standard options → Color scheme: Select Single color, then select Custom, then rgb(222, 255, 0)

- Override 12 → Fields with name: Select 12

- Override 12 → Standard options → Color scheme: Select Single color, then select Custom, then rgb(244, 255, 0)

- Override 13 → Fields with name: Select 13

- Override 13 → Standard options → Color scheme: Select Single color, then select Custom, then rgb(255, 244, 0)

- Override 14 → Fields with name: Select 14

- Override 14 → Standard options → Color scheme: Select Single color, then select Custom, then rgb(255, 222, 0)

- Override 15 → Fields with name: Select 15

- Override 15 → Standard options → Color scheme: Select Single color, then select Custom, then rgb(255, 200, 0)

- Override 16 → Fields with name: Select 16

- Override 16 → Standard options → Color scheme: Select Single color, then select Custom, then rgb(255, 177, 0)

- Override 17 → Fields with name: Select 17

- Override 17 → Standard options → Color scheme: Select Single color, then select Custom, then rgb(255, 155, 0)

- Override 18 → Fields with name: Select 18

- Override 18 → Standard options → Color scheme: Select Single color, then select Custom, then rgb(255, 133, 0)

- Override 19 → Fields with name: Select 19

- Override 19 → Standard options → Color scheme: Select Single color, then select Custom, then rgb(255, 110, 0)

- Override 20 → Fields with name: Select 20

- Override 20 → Standard options → Color scheme: Select Single color, then select Custom, then rgb(255, 89, 0)

- Override 21 → Fields with name: Select 21

- Override 21 → Standard options → Color scheme: Select Single color, then select Custom, then rgb(255, 66, 0)

- Override 22 → Fields with name: Select 22

- Override 22 → Standard options → Color scheme: Select Single color, then select Custom, then rgb(255, 44, 0)

- Override 23 → Fields with name: Select 23

- Override 23 → Standard options → Color scheme: Select Single color, then select Custom, then rgb(255, 22, 0)

- Override 24 → Fields with name: Select 24

- Override 24 → Standard options → Color scheme: Select Single color, then select Custom, then rgb(255, 0, 0)

Important: This graph adds up the data from a UTC day (not the local calendar day).

Conclusion

Even with a relatively simple dataset, we can create insightful visualizations with Grafana that can help us to interpret complex relationships. In my case, the visualizations shall help me to answer the questions:

- Do I fare well with dynamic pricing as compared to a traditional electricity contract with static prices?

- Am I able to shift consumption pattern of large energy chunks efficiently to those hours where electricity is cheaper?

As I mentioned, there might be reasons to buy electricity also at expensive hours. The fact that I have support of solar panels during the day might tilt the decision to consume electric energy aways from the cheapest hours to hours where a large part of that consumption is anyway covered by the solar panels. Or I might decide to heat with the air conditioners because the temperature difference between outside and inside of the house is small and the air conditioners can run with a high efficiency. In that case, I trade in natural gas consumption versus electricity consumption.

Outlook

It would be interesting to consider the energy that the solar panels generate “for free” (not really for free, but as they have been installed, they are already “sunk cost”) and visualize the resulting electricity cost from the mix of solar energy with energy consumed from the grid.

Likewise, it might be interesting as well as challenging to derive a good model that uses a battery and dynamic electricity prices as well as the energy from the solar panels to minimize cost of the energy consumption from the grid. How large should this battery be? When should it be charged from the grid and when should it return its energy to the consumers in the house?

Files

The following dataset was used for the graphs:

Sources

- [1] = Tibber Developer

- [2] = Download Grafana | Grafana Labs

- [3] = day-ahead market – an overview

- [4] = Tibber Developer: Communicating with the API

Disclaimer

- Program codes and examples are for demonstration purposes only.

- Program codes are not recommended be used in production environments without further enhancements in terms of speed, failure-tolerance or cyber-security.

- While program codes have been tested, they might still contain errors.

- I am in neither affiliated nor linked to companies named in this blog post.

Getting around Carrier-grade NAT

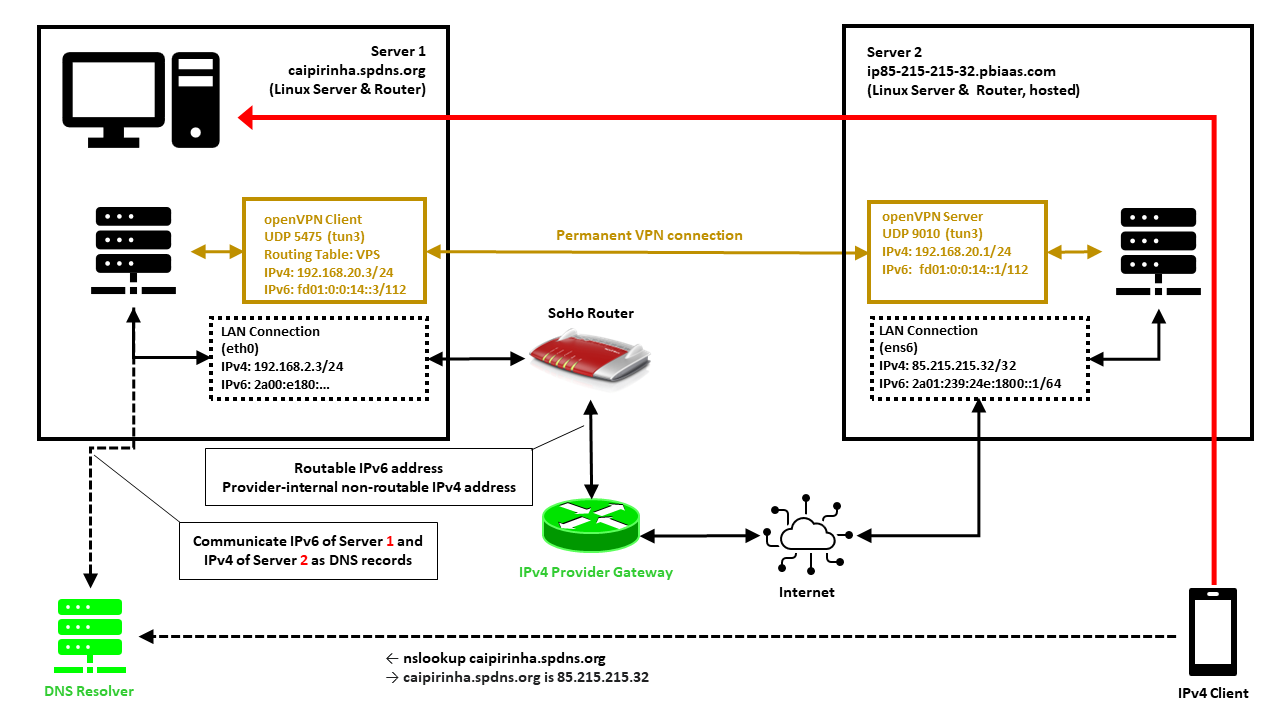

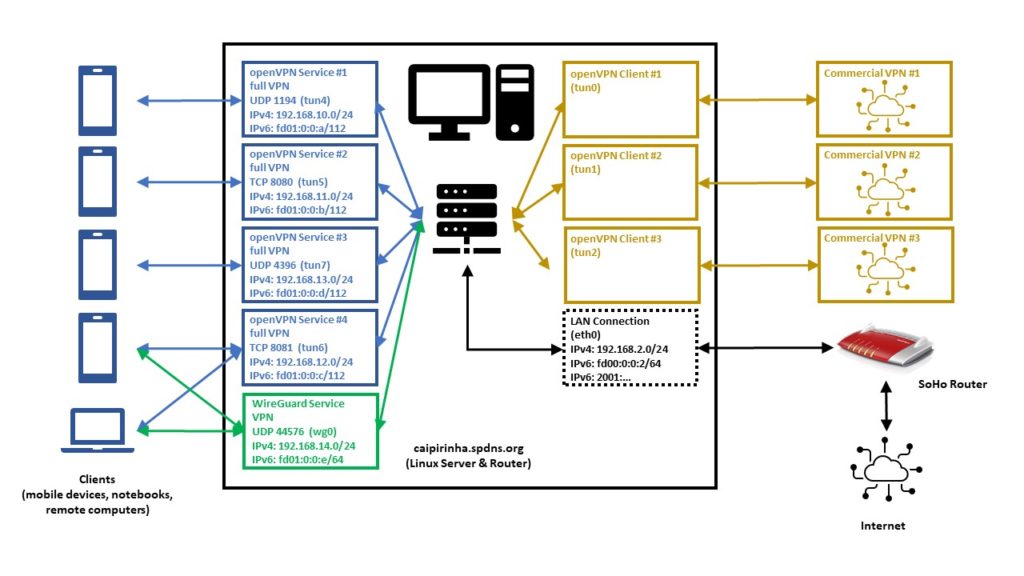

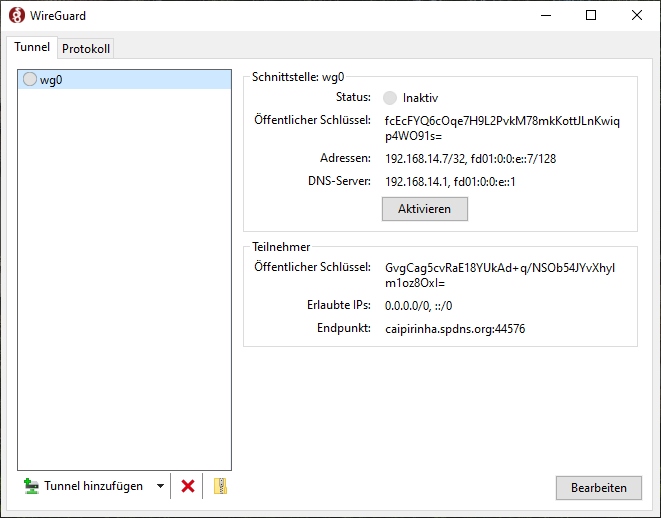

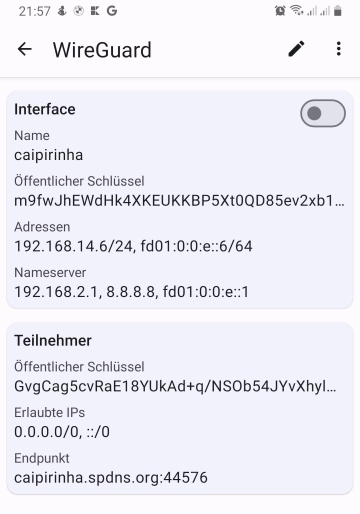

Executive Summary

This blog post explains how a small internet-based shared server (“vServer”, “VPS”) can be used to tunnel connections from the internet back to a SoHo-based router that does not have a publicly routable IPv4 internet address, maybe because the internet service provider (ISP) uses Carrier-grade NAT (CG-NAT) and only offers “real” IPv4 addresses at cost. As internet-based shared servers can be rented for small fees, the approach described below is a viable concept to overcome the limitations of CG-NAT connections which might only allow outgoing connections for IPv4 or even for both IPv4 and IPv6. This concept can even be used if the SoHo server is connected via the mobile network to the internet-based shared server.

Background

The implementation proved useful for me when I switched from my DSL ISP who happily had provided me with “real” (routable) IPv4 and IPv6 addresses to a new fiber-optics ISP that provides IPv6, but that uses CG-NAT on IPv4 so that no incoming IPv4 connections are possible from the internet. As I feared that my server at home would only be accessible from the internet via IPv6, I had to develop this counterstrategy.

Preconditions

In order to use the approach described here, you should:

- … have access to a Linux machine which is already properly configured for dual stack on its principal network interface (e.g., eth0)

- … additionally have access to a cloud-based Linux server which is already properly configured for dual stack on its principal network interface

- … have access to a DNS resolver where you can enter an IPv4 and an IPv6 addresses for your SoHo server so that your domain resolves properly

- … have the package openvpn installed on both machines (preferably from a repository of your Linux distribution)

- … know how to create client and server certificates for openvpn [1]

- … have knowledge of routing concepts, networks, some understanding of shell scripts and configuration files

- … know related system commands like sysctl

- … familiarize yourself with [2], [3], [4], [5]

Description and Usage

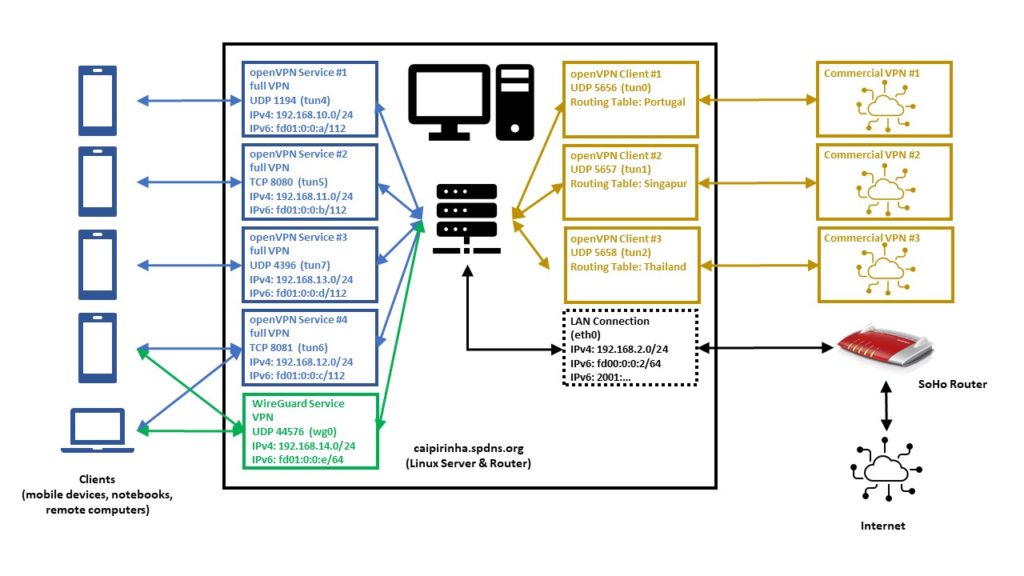

In this setup, we have a full-blown SoHo server (Server 1) which is hosting numerous services that we want to offer to the world. However, while the provider allocates an IPv6 /64 subnet, he does not offer an IPv4 address that would be reachable from the internet. Rather than that, he employs Carrier-grade NAT (CG-NAT) for IPv4. This is a typical setup for fiber-optics or cable providers or for mobile network providers. In some countries (which came late to the internet), IPv4 addresses are scarce in general, and so you might experience CG-NAT for all private internet connections.

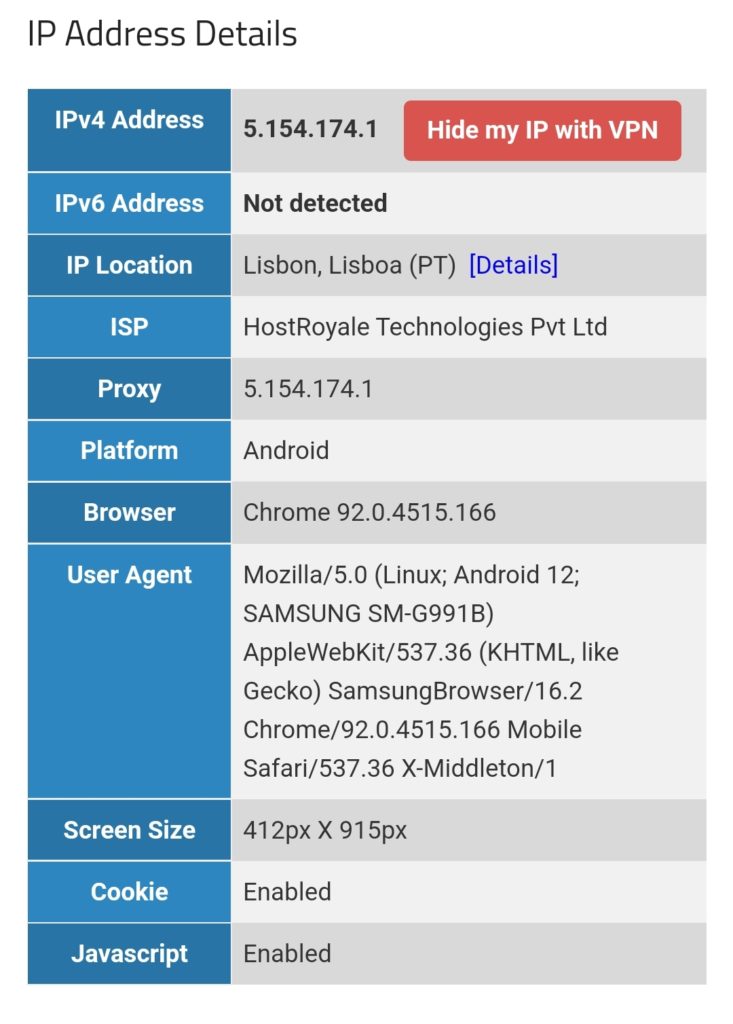

This is where Server 2 comes into play. Server 2 is a hosted shared server, it just needs a good internet connection and a fixed IPv4 address, but it does not need a lot of computational power. It will only be used to forward traffic to Server 1. In my case, I rented a small “VPS” server from Ionos as I personally found their offer compelling [6], but there are alternatives. My VPS has a dual-stack and a fixed IPv4 address and a fixed IPv6 /64 subnet allocated. The IPv4 address of the VPS is 85.215.215.32, and we will use this IPv4 address as entry address for our SoHo server (Server 1) caipirinha.spdns.org.

A new Routing Table

We want to separate the traffic that we receive and send out in return via the VPN network (192.168.20.0/24) from the regular traffic that enters and leaves the server via the network 192.168.2.0/24. Therefore, we set up a new routing table as described in [7] and name it “VPS”. In order to access it via its name, we modify /etc/iproute2/rt_tables:

#

# reserved values

#

255 local

254 main

253 default

0 unspec

#

# local

#

#1 inr.ruhep

...

201 VPS

...Setting up a permanent VPN

First, we need to set up a permanent VPN connection from Server 1 to Server 2. Server 1 will be the VPN client, and Server 2 will be the VPN server. I chose this direction because in that approach, Server 1 may even be connected to the internet via a mobile only connection CG-NAT both on IPv4 and IPv6. In my approach, I use the network 192.168.20.0/24 for the VPN connection; Server 1 gets the address 192.168.20.3 and Server 2 gets the address 192.168.20.1.

Server 2: The VPN Server

On Server 2, we set up a VPN server listening on port 9010 (UDP), using dev tun3. The configuration file is shown below. In my case, Server 2 is an Ubuntu-based server, and so it is recommended to adjust the settings in the configuration file /etc/default/openvpn which governs the behavior of openvpn connections on Ubuntu. I modified this configuration file so that only one openvpn service is started. This is done via the configuration option

AUTOSTART="server-9010"For the configuration of the server side, I meanwhile include the CA certificate, the server certificate and the private key in one configuration file. I find that more convenient, but it certainly may have disadvantages, The key message is that it is possible to do so. My own certificates and private keys have been substituted by “…” here, of course.

# Konfigurationsdatei für den openVPN-Server auf IONOS VPS (UDP:9010)

client-config-dir /etc/openvpn/server/conf-9010

crl-verify /etc/openvpn/server/crl.pem

dev tun3

dh /etc/openvpn/server/dh.pem

hand-window 90

ifconfig 192.168.20.1 255.255.255.0

ifconfig-pool 192.168.20.2 192.168.20.254 255.255.255.0

ifconfig-ipv6 fd01:0:0:14::1 2a01:239:24e:1800::1

ifconfig-ipv6-pool fd01:0:0:14::2/112

ifconfig-pool-persist /etc/openvpn/server/ip-pool-9010.txt

keepalive 20 80

log /var/log/openvpn/server-9010.log

mode server

persist-key

persist-tun

port 9010

proto udp6

reneg-sec 86400

script-security 2

status /var/run/openvpn/status-9010

tls-server

topology subnet

verb 1

writepid /var/run/openvpn/server-9010.pid

# Topologie des VPN und Default-Gateway

push "topology subnet"

push "tun-ipv6"

<ca>

-----BEGIN CERTIFICATE-----

...

-----END CERTIFICATE-----

</ca>

<cert>

-----BEGIN CERTIFICATE-----

...

-----END CERTIFICATE-----

</cert>

<key>

-----BEGIN PRIVATE KEY-----

...

-----END PRIVATE KEY-----

</key>We also must take care that our client always gets the IP address 192.168.20.3 as we originally envisioned. This is done with the client-specific configuration file /etc/openvpn/server/conf-9010/caipirinha_client:

# Spezielle Konfigurationsdatei für den Server caipirinha.spdns.org als Client

#

ifconfig-push 192.168.20.3 255.255.255.0

ifconfig-ipv6-push fd01:0:0:14::3/111 fd01:0:0:14::1The client-specific configuration file additionally also allocates the static IPv6 address fd01:0:0:14::3 to our client. Finally, the service can then be started with:

systemctl start openvpn@server-9010.serviceServer 1: The VPN Client

On Server 1, we set up a VPN client using dev tun3. The local port shall always be 5475 (arbitrarily chosen but fixed so that we can track the connection easily if necessary). Server 2 is addressed via its public IPv6 address (2a01:239:24e:1800::1), but we could also have used its public IPv4 address (85.215.215.32). I chose the IPv6 address because the IPv4 connection would run via the provider gateway, and that might slow down the connection or make it less reliable.

# Konfigurationsdatei für den openVPN-Client auf caipirinha.spdns.org zum IONOS-Server

client

dev tun3

explicit-exit-notify

hand-window 90

keepalive 10 60

log /var/log/openvpn_ionos_vpn.log

lport 5475

persist-key

persist-tun

proto udp

remote 2a01:239:24e:1800::1 9010

remote-cert-tls server

remote-random

reneg-sec 86400

route-nopull

script-security 2

status /var/run/openvpn/status_ionos_vpn

up /etc/openvpn/start_piavpn.sh

verb 1

<ca>

-----BEGIN CERTIFICATE-----

...

-----END CERTIFICATE-----

</ca>

<cert>

-----BEGIN CERTIFICATE-----

...

-----END CERTIFICATE-----

</cert>

<key>

-----BEGIN PRIVATE KEY-----

...

-----END PRIVATE KEY-----

</key>One peculiarity is the referenced script /etc/openvpn/start_piavpn.sh. At the start of the VPN connection, this script populates the routing table VPS:

#!/bin/bash

#

# This script requires the tool "ipcalc" which needs to be installed on the target system.

# Set the correct PATH environment

PATH='/sbin:/usr/sbin:/bin:/usr/bin'

VPN_DEV=$1

VPN_SRC=$4

VPN_MSK=$5

VPN_GW=$(ipcalc ${VPN_SRC}/${VPN_MSK} | sed -n 's/^HostMin:\s*\([0-9]\{1,3\}\.[0-9]\{1,3\}\.[0-9]\{1,3\}\.[0-9]\{1,3\}\).*/\1/p')

VPN_NET=$(ipcalc ${VPN_SRC}/${VPN_MSK} | sed -n 's/^Network:\s*\([0-9]\{1,3\}\.[0-9]\{1,3\}\.[0-9]\{1,3\}\.[0-9]\{1,3\}\/[0-9]\{1,2\}\).*/\1/p')

case "${VPN_DEV}" in

"tun0") ROUTING_TABLE='Portugal';;

"tun1") ROUTING_TABLE='Brasilien';;

"tun2") ROUTING_TABLE='Singapur';;

"tun3") ROUTING_TABLE='VPS';;

"tun8") ROUTING_TABLE='China';;

esac

...

ip route add ${VPN_NET} dev ${VPN_DEV} proto static scope link src ${VPN_SRC} table ${ROUTING_TABLE}

ip route replace default dev ${VPN_DEV} via ${VPN_GW} table ${ROUTING_TABLE}When the VPN connection is stopped, then the VPN network and the default route are automatically deleted from the routing table VPS as the VPN network collapses. While the VPN connection is up, we can view the routing table VPS with:

caipirinha:~ # ip route list table VPS

default via 192.168.20.1 dev tun3

192.168.20.0/24 dev tun3 proto static scope link src 192.168.20.3Finally, the client can be started with:

systemctl start openvpn@client_ionos_vps.serviceOf course, the actual name after “openvpn@” in this command depends on how you named the respective client configuration file.

Channeling the Traffic

Now, we must make sure that traffic that is received by Server 2 and that shall be forwarded to Server 1 is channeled in an appropriate way through the VPN connection. We need to execute some commands on both servers. [3], [4] explain how that can be achieved.

Server 2: Forward the traffic

We need to enable IPv4 routing and simply forward connections to those ports where we offer our service on Server 1. This is done by:

sysctl -w net.ipv4.ip_forward=1